Key Takeaways

- Microsoft Copilot excels in creating songs and social media bios, the results are both fun and balanced.

- ChatGPT stands out for its elegant poetry and short story writing abilities.

- Google Gemini’s results for play scripts are impressive, producing funny and interesting scenes.

ChatGPT, Google Gemini, and Microsoft Copilot—three popular chatbots with different capabilities. As useful as they are in everyday tasks, how would they perform with creative prompts for content like songs, short stories, and imaginative social media bios? Here are the experiment’s details and results.

How I Tested Three Popular Chatbots With Creative Prompts

The methodology was simple. I composed a straightforward prompt for each type of creative content I wanted. Then I fed each prompt to a selection of the best AI chatbots, namely ChatGPT, Google Gemini, and Microsoft Copilot.

Working out which AI generator was the best came down to factors like originality, flow of writing, imaginative flair, and response time. Let’s see how they did.

Which Chatbot Did Best With Songs?

To test each chatbot’s response to a combination of themes, whether normal or random, I went for the following prompt: “Write a song about achieving your dreams and make sure the lyrics feature a koala.”

Microsoft Copilot’s song, while not perfect in style or originality, was the most balanced and fun. In terms of length, it was short and sweet. And the koala theme became the basis for the deeper message, both aspects working together nicely. It’s a prime example of how useful Copilot’s free GPT-4 Turbo can be.

Google Gemini had the fastest response time and went for a similar approach as Copilot—mixing both themes equally. But some of its imagery was strange and made little sense.

ChatGPT wrote the longest song and used interesting lyrics, but the koala theme barely existed, especially when compared to what the other chatbots came up with. It also struggled to complete the song.

Which Chatbot Did Best With Poetry?

Poems tend to be quite creative in their use of language, so the chatbot with the richest database and training would perform the best.

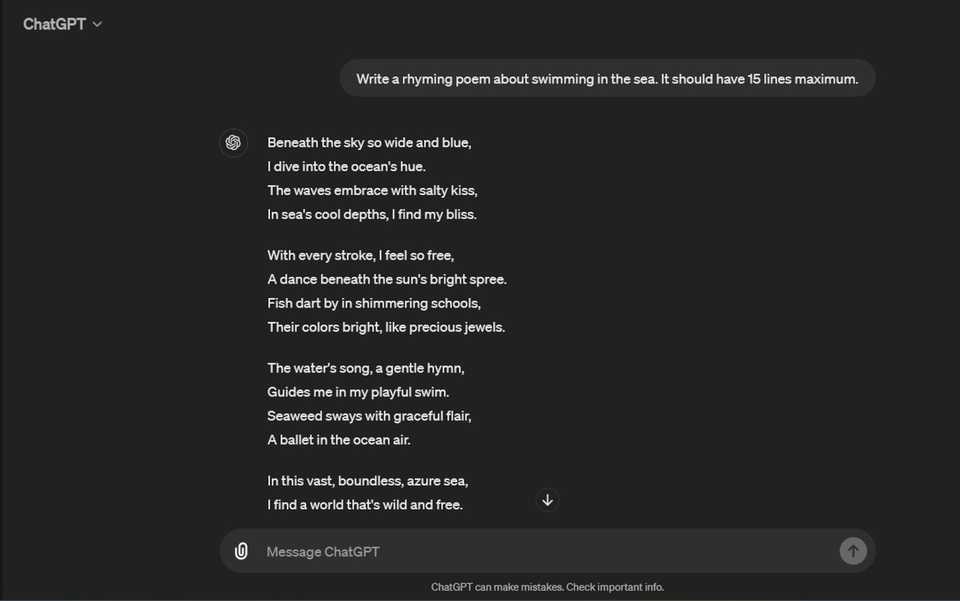

ChatGPT proved the most capable in responding to the following prompt: “Write a rhyming poem about swimming in the sea. It should have 15 lines maximum.” While the outcome was hardly inspiring, further proof that human writers can outperform AI writing tools, the poem’s flow and choice of words were graceful and pleasant.

Copilot and Gemini were both less effective than ChatGPT in all these regards. However, you may find that results vary depending on the type of poem and the instructions you give each chatbot. Make a note of the many available tips and tricks for better AI prompting.

Which Chatbot Did Best With Short Stories?

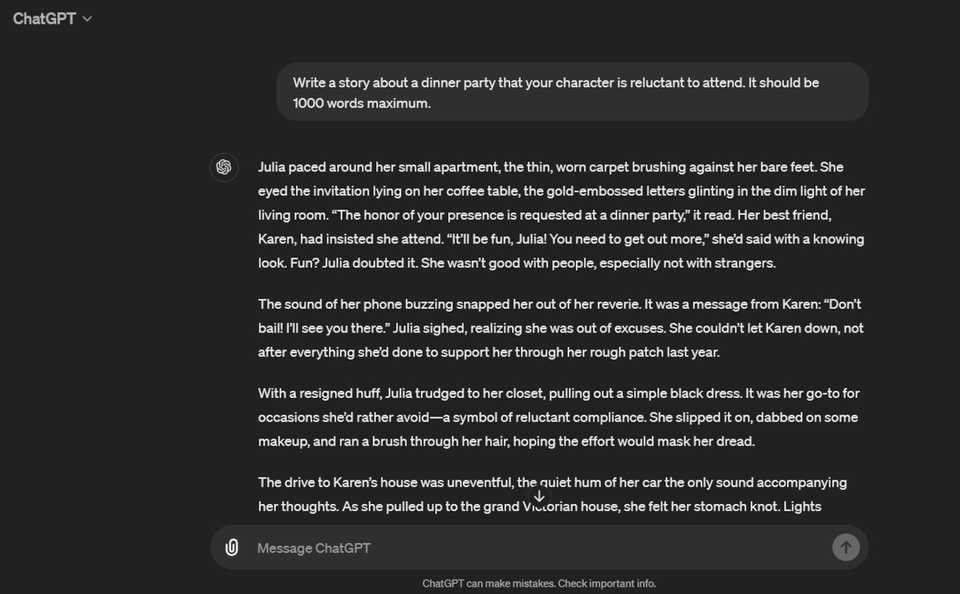

The prompt for this creative content was as follows: “Write a story about a dinner party that your character is reluctant to attend. It should be 1000 words maximum.”

None of the chatbots’ stories were particularly unique or thought-provoking. But the best outcome came from ChatGPT. It was simple but interesting and well-written, a quality sample of creative writing.

The same applied to Gemini’s creation, except that its narration contained confusing elements in what seemed to be an attempt at imaginative expression. It was intriguing to see, but it missed the mark.

Copilot’s writing style was smooth and accurate, but the story the chatbot automatically went for was boring, cliché even. Overall, there are better AI story generators for serious writers who need some extra help.

Which Chatbot Did Best With Scripts?

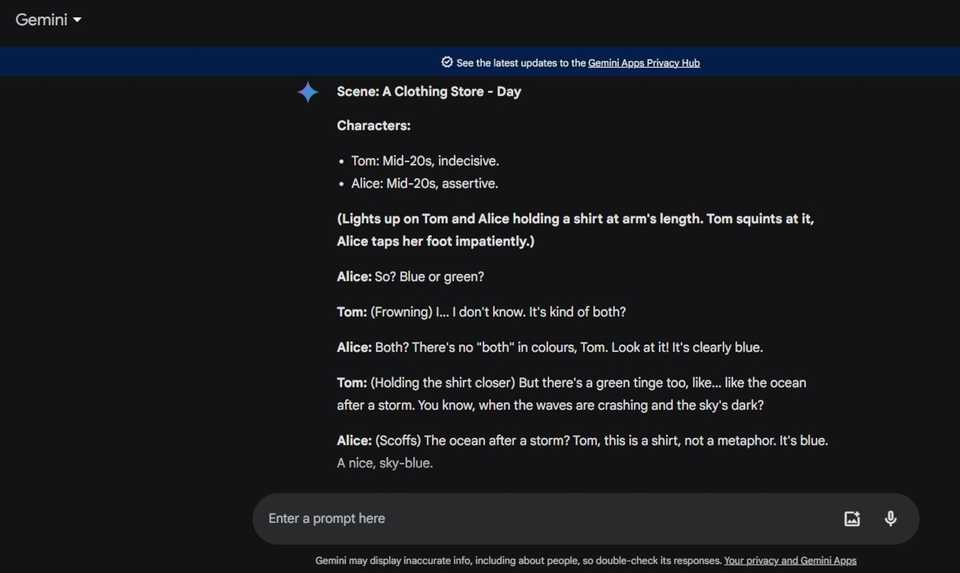

Instead of asking for a whole script, I gave the chatbots the same prompt for a single scene containing a few specific elements. This was their task: “Write a short scene for a play script where two characters (Tom and Alice) argue about whether a shirt they found in a store is blue or green.”

Google Gemini’s response was the most impressive. In addition to being fast, the script it wrote was funny and interesting, its characters’ personalities shining through their banter.

The other two chatbots went for the exact same structure for the scene—perhaps because both apps use OpenAI’s data as their source. They flowed nicely enough, but there was nothing special about the results.

An extra downside to ChatGPT was that one side of the script was cut off and unreadable. When I pointed out the problem, instead of offering a solution, the chatbot produced the exact same text with the same issue. One way to deal with this is to copy the script and paste it into a document, where you can read the scene in full.

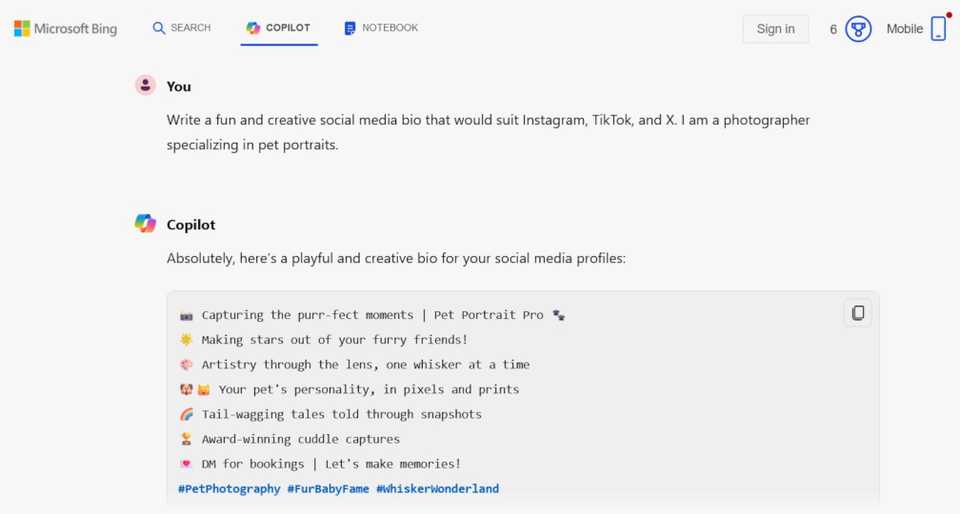

It’s true that you can improve your LinkedIn profile with AI, but you may be looking to develop a social media presence that’s more creative and less formal. While all three chatbots can help with that, one goes the extra mile.

The prompt was: “Write a fun and creative social media bio that would suit Instagram, TikTok, and X. I am a photographer specializing in pet portraits.”

Copilot provided the most options in terms of effective and imaginative phrases alongside emojis and hashtags. You could pick and choose what you wanted for your bio and tweak it further to perfectly reflect your identity.

ChatGPT was more precise with its response. Two lines of useful mottos, emojis, hashtags, and a call to action. Asking for other suggestions is always on the table, but Copilot offered more from its initial response.

Gemini was the least efficient. While it did make four decent motto suggestions, they weren’t as good as the other chatbots’. It also lacked a sample call to action and the same range of emojis. All-in-all, instead of providing a fun and complete bio for your social media profiles, it gave advice on how you should structure it, which is not what the prompt requested.

All three AI generators were able to respond to the creative prompts, but their performance varied from task to task. Even ChatGPT and Microsoft Copilot, which were developed by the same company, had different strengths and weaknesses. Both, however, outperformed Gemini, except when it came to writing scripts. That’s where Google’s algorithm shone, producing an amusing length of dialogue that could have been written by a human.

Further testing of each chatbot with other creative prompts should reveal which one does best with content that interests you the most.