The United States is reportedly looking to put a stop to sexually abusive artificial intelligence-generated deepfakes by calling on companies to voluntarily cooperate in prohibiting the problematic AI capability.

In the absence of government legislation, the White House is requesting corporations to cooperate voluntarily on Thursday.

Officials expect that by agreeing to a set of particular steps, the commercial sector will be able to stop the production, distribution, and monetization of these kinds of nonconsensual AI photos, which include explicit photographs of children.

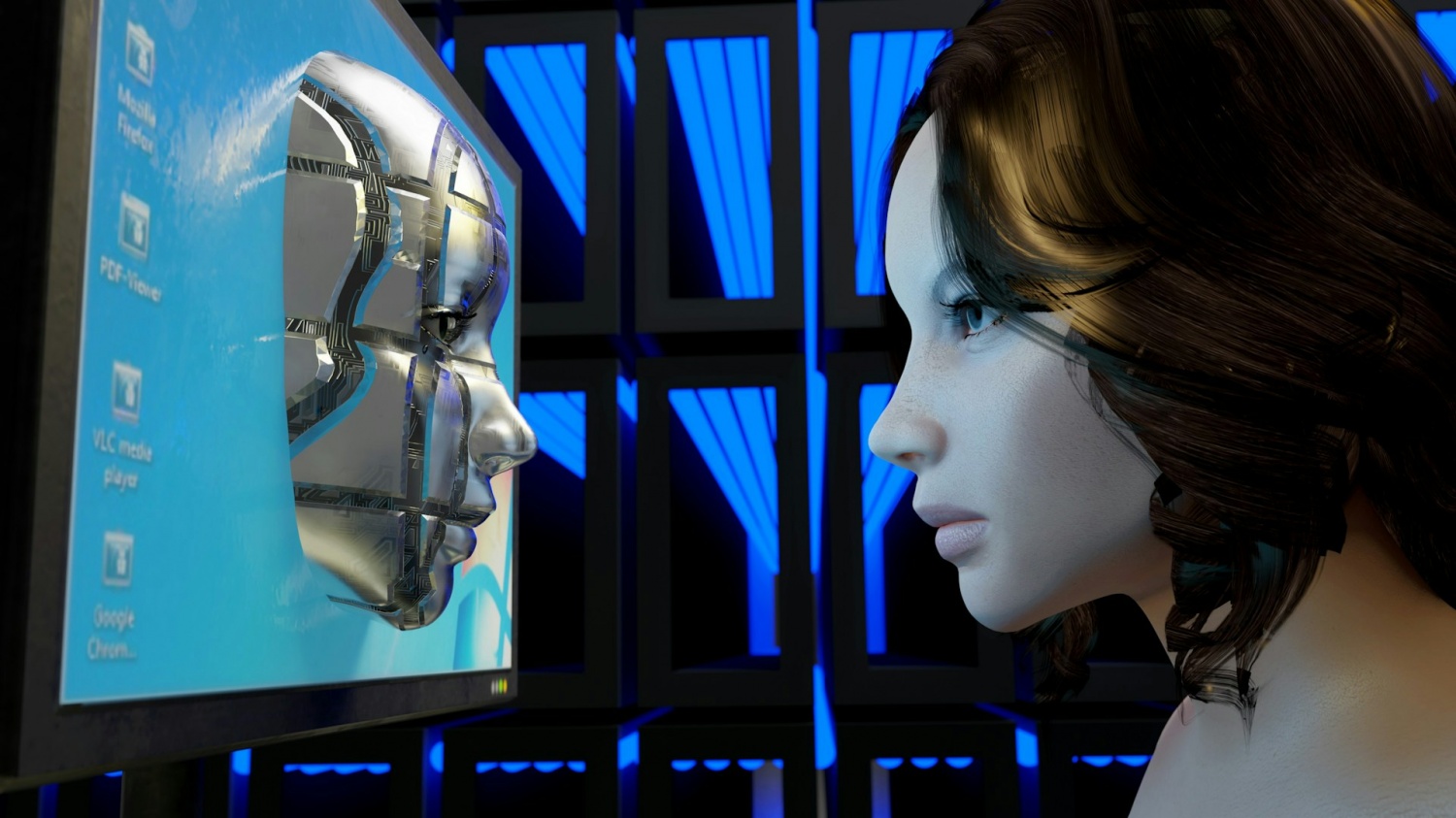

(Photo: Andres Siimon from Unsplash) Deepfakes are already dangerous since they can mislead people, but when paired with a banking trojan, things just get worse for the victims.

Additionally, the White House advised that tech companies should impose restrictions on web services and apps that purport to give users the ability to make and modify sexual photographs without the consent of the subject.

Similarly, cloud service providers can forbid explicit deepfake websites and applications from using their services.

It is now simple to turn someone’s likeness into a sexually explicit AI deepfake using new generative AI techniques and to disseminate those lifelike images on social media or in chat rooms. Whether they are youngsters or celebrities, the victims have few options for putting an end to it.

The White House claims that image-based sexual assault is one of the damaging applications of AI that is expanding the fastest at this time, based on reports.

Additionally, malicious actors can use AI to spread actual non-consensual photographs at startlingly high speeds and volumes.

Read Also: Pedophiles Use AI to Create Children Deepfake Nudes for Extortion, Dark Web Discovery Reveals

Officials Behind the Call

White House officials Arati Prabhakar, the director of the Office of Science and Technology Policy, and Jennifer Klein, the head of the Gender Policy Council, wrote the proclamation.

In a phone interview, Prabhakar informed sources that the White House is hoping that businesses linked to the increase in image-based sexual assault will act swiftly and decisively to put an end to it.

AI Deepfake Cases

Worries about AI-generated sexual imagery continue to be heard all across the world. In April of last year, students in London demanded that education be oriented around artificial intelligence with an emphasis on preventing fake nudes rather than merely exam cheating.

The London Police launched an inquiry after fictitious student nudes were made and circulated. Even though she is unaware of the perpetrators, one of the students who claimed to have been the victim of AI-generated images of herself in the nude says she feels ashamed of her friends’ actions.

Though the youngster says it’s “super frustrating,” and they want people to believe it is not them, even knowing that it is not them makes it embarrassing. The teenager said that although educators forbid students from utilizing artificial intelligence to cheat on tests or assignments, they hardly ever address the more crafty and creative ways in which it might be used.

In Tasmania, Australia, a man recently made history by being the first person to be charged with child abuse using material created by artificial intelligence in his jurisdiction.

Following the discovery of hundreds of illegal AI-generated videos that he had downloaded, uploaded, and owned, the 48-year-old man was found guilty.

The Gravelly Beach guy pled guilty on March 26, 2024, to having access to and holding onto child abuse materials following his arrest and prosecution.

Related Article: OpenAI Contemplates AI-Generated Adult Content Amid Debate

(Photo : Tech Times)

ⓒ 2024 TECHTIMES.com All rights reserved. Do not reproduce without permission.