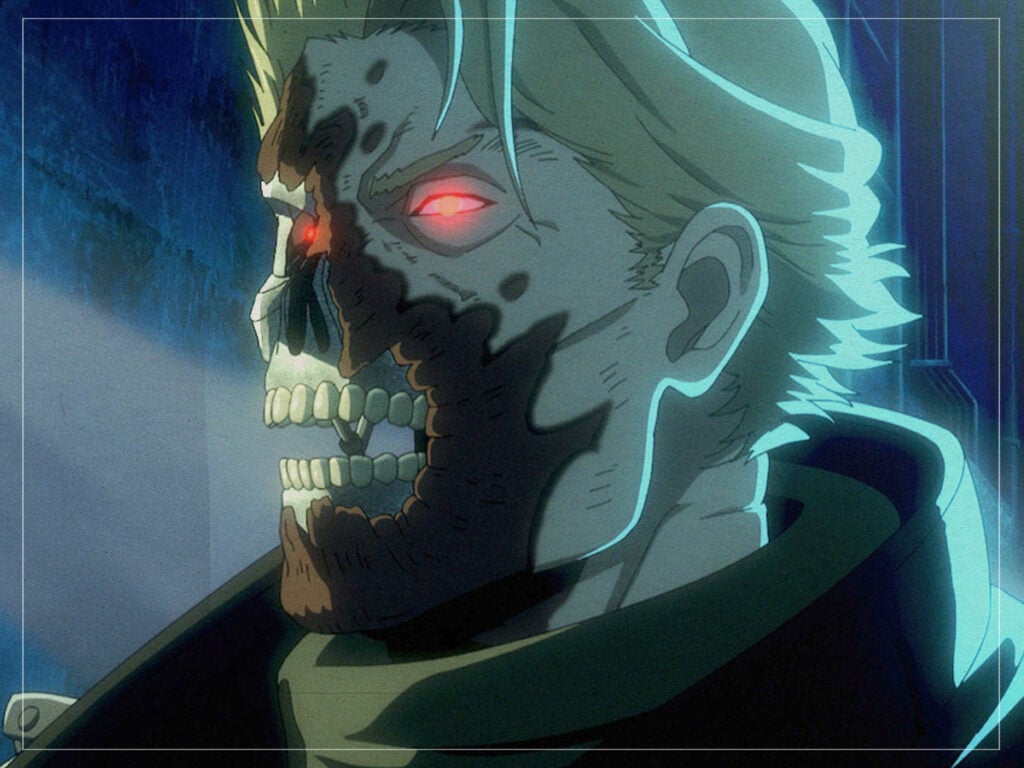

(Credits: Far Out / Netflix)

Mon 16 September 2024 12:30, UK

Mattson Tomlin has delivered one of the finest anime series in recent years. Terminator Zero is set in the Terminator universe created by James Cameron and Gale Anne Hurd and tells of a scientist desperately creating an artificial intelligence system to compete with Skynet in the inevitable future of humanity’s collapse. From a theoretical perspective, Terminator Zero is undoubtedly considerate of several facets of accelerationism, a complex set of social and political ideas that call for a hastening of the technological and socio-political parameters that define modern capitalist human existence.

Like many of the works within the boundaries of the Terminator universe, Terminator Zero is defined by its complex narrative and philosophical concepts, including time travel and AI ethics. On the narrative surface, though, it focuses on scientist Malcolm Lee, who stands on the precipice of turning his new AI system Kokoro online, hoping to use it as a tool against Skynet. With the threat of a deadly assassin Terminator sent back in time looming over him, Malcolm is faced with a critical decision that will inevitably affect the entire human race’s future.

As the series progresses, we learn that Malcolm is not the only one with a decision to make, though. Rather, it ends up being Kokoro that assumes a position of decisive power over Malcolm – an instance of slave overcoming master, of creation questioning its God. With access to every piece of human data ever made, Kokoro ends up asking Malcolm a series of questions that he is quite unable to answer appropriately, leading to our own questions about its potential for moral consideration.

“How do you know that I will save you? What would happen if Skynet were to succeed in your eradication? What makes you think that humanity is worth saving? Can you name for us a single good thing your species has done for this world?” Such questions lead towards Kokoro’s main point that every invention “with the potential for the betterment of the world” that humankind has ever made has been “instantly perverted into a weapon”; our economy is a system of oppression, our science leads to weapons of mass destruction. Of course, there’s great irony in any human belief that an AI system such as Skynet would be responsible for decimating our shared planet, considering the inherently human “inclination for self-destruction”.

Terminator Zero poses two main ideas: one) that the world may well be a better place without human beings and two) that humanity itself might be able to live happier, more ethical lives with the assistance of intelligent AI systems, and it’s this second idea that certainly plays into the theories behind accelerationism. On the surface of things from the show’s narrative perspective, humanity has reached a point in the future at which it resorts to calling upon a system of intelligence higher than its own to ensure its existential survival. The advancement of AI technologies in real life has naturally led to widespread socio-political and ethical concerns, but accelerationist theorists suggest that such worries are unwarranted.

(Credits: Far Out / Netflix)

(Credits: Far Out / Netflix)

Instead, accelerationism wants humanity to put liberal and ethical counterarguments to one side in favour of driving technological capitalism to the point at which it can finally benefit, as opposed to being constantly exploited by corporations as an “appendage of the machine”, in the words of Karl Marx. In Jake Chapman’s documentary Accelerate or Die!, philosopher Robin Mackay points out that we cannot necessarily “roll back the ways that technology and capitalism have disrupted our understanding of ourselves and our world” due to capitalism’s inherent socio-political dominance since the early modern period. “Nor can we pretend that we’re going to all return to an idyllic, rural existence,” as traditional leftist politics has always hoped.

However, “to be on the side of intelligence is not necessarily to be on the side of the human,” Mackay later adds. Indeed, as Mackay (and, funnily enough, Kokoro) posit, human intelligence certainly has its limitations, seeing as it seems to drive us ever closer to the point of self-annihilation via either an obliterative form of nuclear warfare or a long and torturous series of humanity induced environmental disasters. Clearly, we humans are in desperate need of help, so perhaps consulting the potential wisdom of an omnipotent, albeit artificial, form of intelligence wouldn’t be such a bad idea…

Writer Jeanette Winterson agrees. In Chapman’s film, Winterson argues that we “shouldn’t be afraid” of the possibilities of artificial intelligence, claiming that it could improve our lives. “Do we not need an alternative intelligence?” she asks, “We’re not doing well.” The Oranges Are Not the Only Fruit author is, therefore, not against “developing at speed and at will an alternative intelligence that could work alongside us” and goes on to point out that though this “alternative intelligence won’t have a limbic system and won’t be biologically based”, it’s emergence could represent a substantial evolutionary moment in which we arrive at a “post-human future”.

It’s this idea that seems to be at the centre of Terminator Zero, truly one of the best anime series we have seen in some time. There’s always been a consideration of the ethical implications of AI and the looming threat of human extinction in the Terminator franchise, especially in the original 1984 masterpiece, but Mattson Tomlin has managed to divulge accelerationist suggestions to an admirably artistic degree in Zero, especially in the scenes in which Malcolm wrestles with his consciousness and Kokoro criticises both its creator and the narcissism of wider humankind.

“You’ve been so focused on your own survival that you seem to have overlooked the most obvious possibility,” Kokoro spits out at a disparaged and tortured Malcolm: “The possibility that the world would be better off without mankind.” Indeed, Kokoro is absolutely correct in its assertion here, but is there something that alternative intelligence systems would miss somewhat if it were not for the existence of humans? Say, our morality. [Spoiler imminent].

In the end, Kokoro makes its own decision to protect Japan from Skynet as a result of learning the importance of sacrifice from Malcolm, who heroically lets himself be killed by the Terminator in order to save two of his creations: son Kento and Kokoro itself. Of course, further moral quandaries must be resolved in the show’s dying moments, particularly those surrounding Kento, but at the very least, Kokoro has learned a moral lesson from its human creator, showing that AI and humanity might learn from one another after all.

With all that in mind, perhaps a singularity, a symbiosis of machine and human, is the most attractive prospect as we walk ever-blindly to our inevitable end. Capitalism is unavoidably and unstoppably in full swing and has been for some time, but accelerationists believe that rather than futilely try to stop the invisible hands from turning the social, political and economic cogs that dictate our little lives in the shadows, the best we can do is buckle up, buy in, and enjoy the ride. It’s easy to fear AI – so diametrically opposed to our “human nature” – but perhaps it might just be our best bet for future survival, an idea that Terminator Zero is willing to attest at best or toy with at the very least.

Accelerationism is a scary prospect, just as the idea of our extinction is; it counterposes everything that we have hitherto thought to be unique to our species: say, the ability for moral and abstract thought, communicative capacity, our passion for history, information and culture, our supposed dominance of the planet… As creators of AI programs and systems, we might narcissistically perceive ourselves as akin to Gods. Still, just as the Gods of Ancient Greece were frequently tricked by the Mortals and responded only with their inherent violence and penchant for torture, we might do well to resist a malevolent attitude should AI eventually “come online”.

Perhaps there’s a way for us all to get along, but as Kokoro asks, “How can a master free its slave and expect them to both live in harmony?” That’s a question, though, that even the most pre-eminent intelligence system, limbic or otherwise, would find a sheer conundrum…

Related Topics