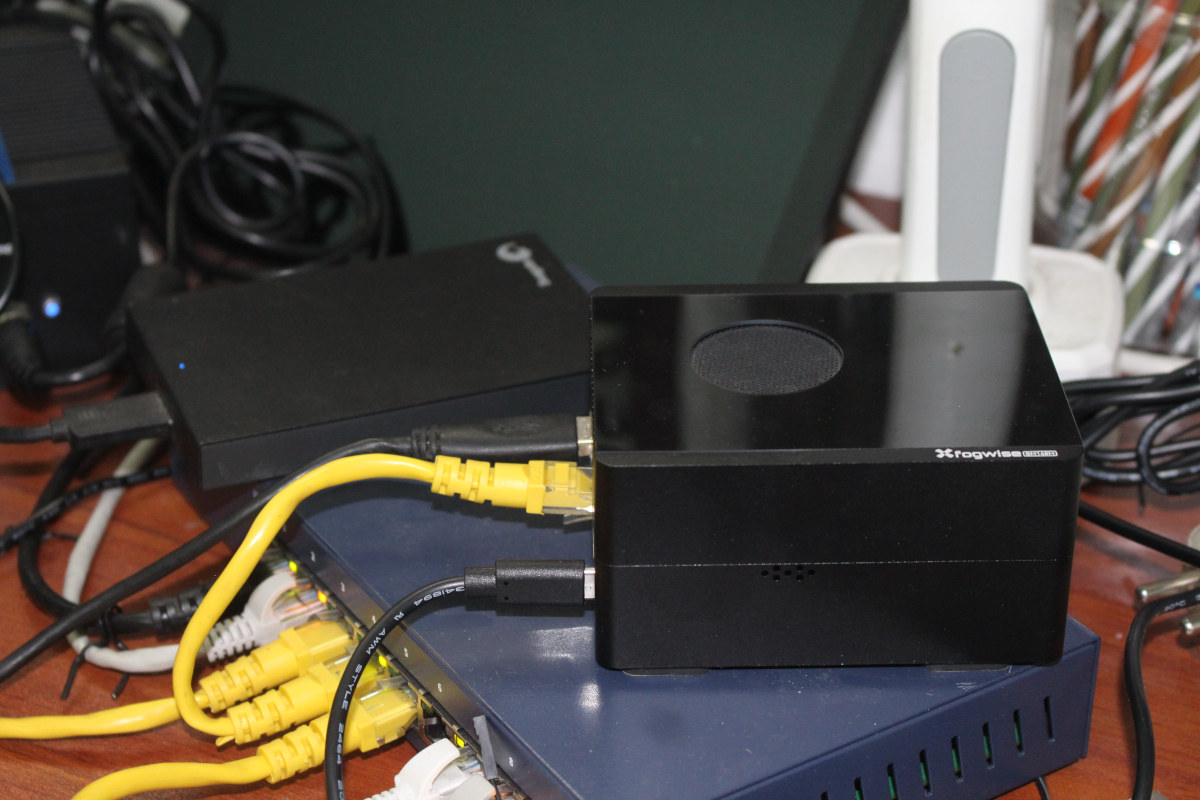

After checking out Radxa Fogwise Airbox hardware in the first part of the review last month, I’ve now had time to test the SOPHGO SG2300x-powered AI box with an Ubuntu 20.04 Server image preloaded with CasaOS as well as Stable Diffusion and Llama3 containers.

I’ll start the second part of the review by checking out the pre-installed Stable Diffusion text-to-image generator and Llama3 AI chatbot, then manually install imgSearch AI-powered image search engine in CasaOS web dashboard, test the Python SDK in the command line, and run some AI vision models, namely Resnet50 and YOLOv8.

Radxa Fogwise Airbox OS installation

Radxa only provided an Ubuntu Server 20.04 image last month with only the basics pre-installated. The company has now improved the documentation and also made two images available for the Radxa Fogwise Airbox:

- Base image (1.2GB) – Based on Ubuntu Server 20.04; contains only Sophon base SDK and backend.

- Full image (9.5GB) – Same as above, but adding the Radxa LLM frontend, CasaOS, and demos for common LLMs.

Beginners should go with the second even if it takes longer to download, as it will make everything much easier to test out of the box. So that is the image I went with (Radxa Airbox B5 0606), and I used USBImager to flash it in a 32GB microSD card, before inserting the microSD card into the Fogwise Airbox and monitoring the installation with the serial console as I did in the first part of the review.

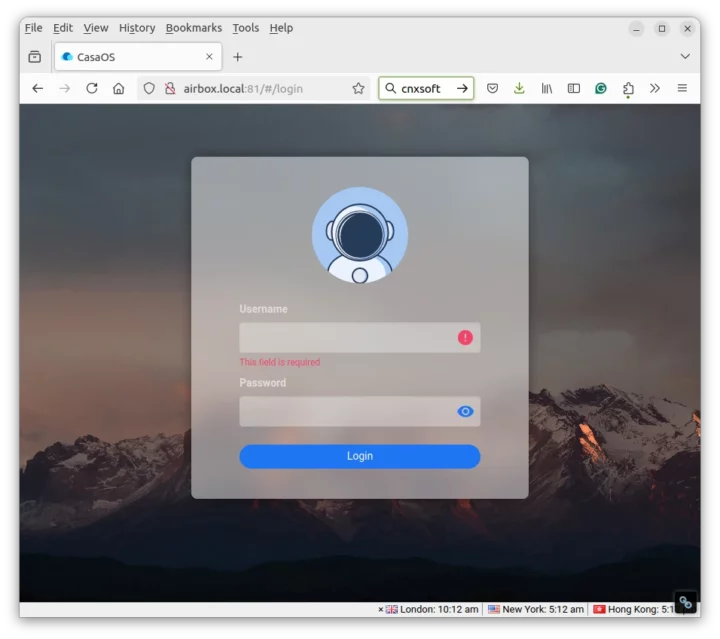

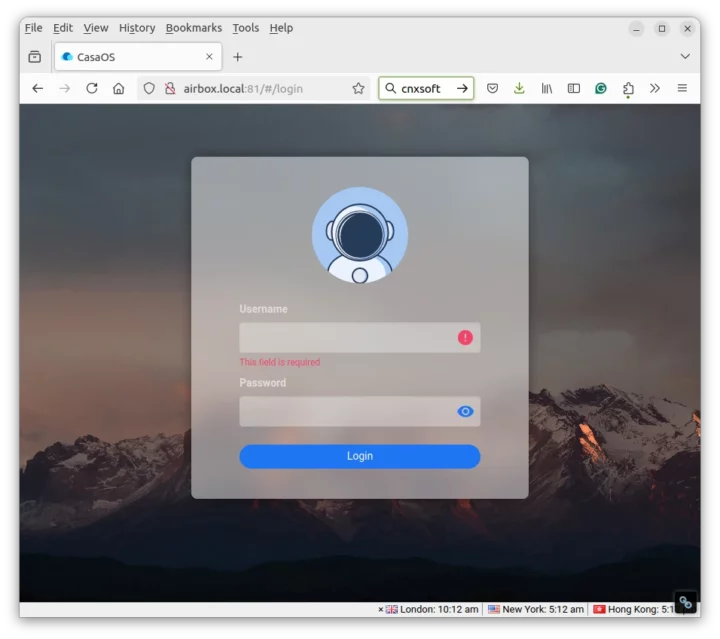

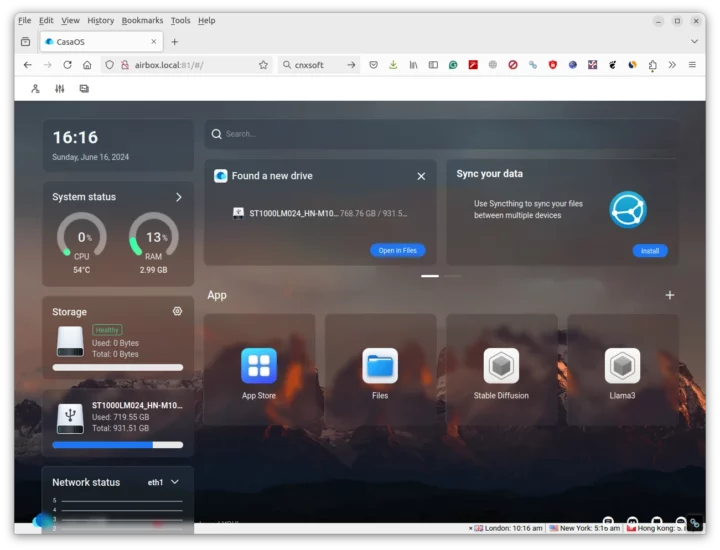

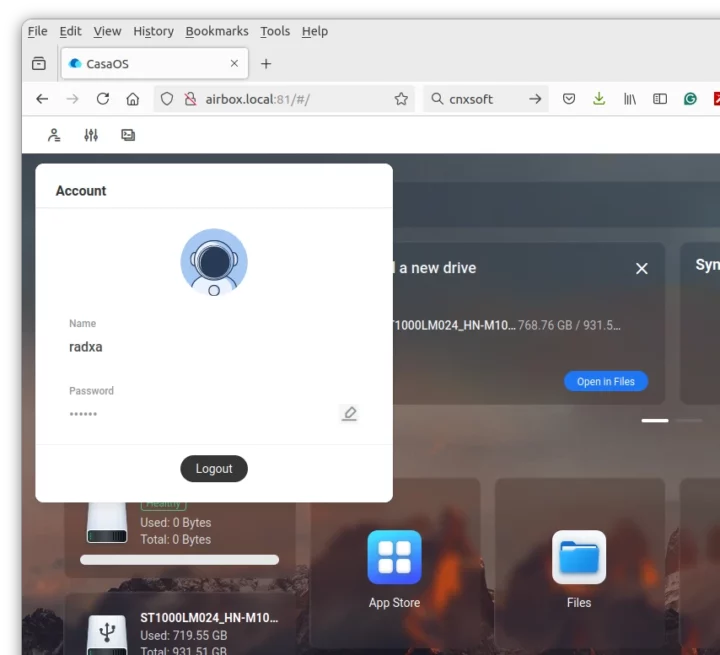

After installation is complete, we can see airbox.local’s TCP port 81 is open and we can access the CasaOS dashboard using radxa and radxa as login credentials.

jaufranc@CNX-LAPTOP-5:~/edev/twentyseventeen-child$ nmap -F Airbox.local

Starting Nmap 7.80 ( ) at 2024-06-16 16:11 +07

Nmap scan report for Airbox.local (192.168.31.71)

Host is up (0.0012s latency).

rDNS record for 192.168.31.71: Airbox

Not shown: 94 closed ports

PORT STATE SERVICE

22/tcp open ssh

80/tcp open http

81/tcp open hosts2-ns

139/tcp open netbios-ssn

445/tcp open microsoft-ds

8888/tcp open sun-answerbook

|

jaufranc@CNX–LAPTOP–5:~/edev/twentyseventeen–child$ nmap –F Airbox.local Starting Nmap 7.80 ( https://nmap.org ) at 2024-06-16 16:11 +07 Nmap scan report for Airbox.local (192.168.31.71) Host is up (0.0012s latency). rDNS record for 192.168.31.71: Airbox Not shown: 94 closed ports PORT STATE SERVICE 22/tcp open ssh 80/tcp open http 81/tcp open hosts2–ns 139/tcp open netbios–ssn 445/tcp open microsoft–ds 8888/tcp open sun–answerbook |

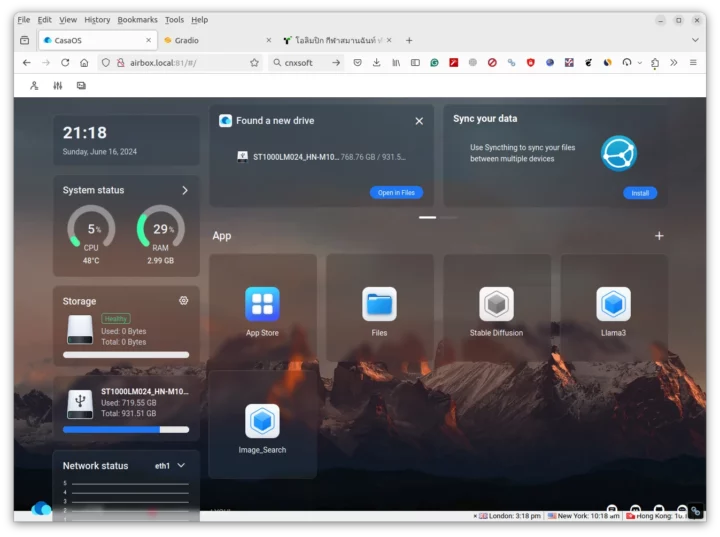

Using Radxa Fogwise Airbox with CasaOS

It will show some system information (I also connected a USB hard drive), but the most important is that Stable Diffusion and Lllama3 are already installed. You may consider changing CasaOS’s username and password, and accessing the Linux terminal through SSH with username (linaro) and password (linaro) to change the password there as well…

Here is some extra information about the system from inxi.

linaro@Airbox:~$ sudo inxi -Fc0

System:

Host: Airbox Kernel: 5.4.217-bm1684-g18c6a7c915a2-dirty aarch64 bits: 64

Console: tty 0 Distro: Ubuntu 20.04 LTS (Focal Fossa)

Machine:

Type: ARM Device System: Radxa AICore BM1684x IO Board details: N/A

CPU:

Topology: 8-Core (2-Die) model: bm1684x variant: cortex-a53 bits: 64

type: MCP MCM

Speed: 2300 MHz min/max: 1150/2300 MHz Core speeds (MHz): 1: 2300 2: 2300

3: 2300 4: 2300 5: 2300 6: 2300 7: 2300 8: 2300

Graphics:

Message: No Device data found.

Display: server: No display server data found. Headless machine?

tty: 80×24

Message: Advanced graphics data unavailable in console for root.

Audio:

Device-1: Realtek type: USB driver: hid-generic,snd-usb-audio,usbhid

Sound Server: ALSA v: k5.4.217-bm1684-g18c6a7c915a2-dirty

Network:

Device-1: ethernet driver: bm_dwmac

Device-2: ethernet driver: bm_dwmac

IF-ID-1: docker0 state: up speed: N/A duplex: N/A mac: 02:42:01:de:71:42

IF-ID-2: dummy0 state: down mac: 7a:fa:cc:19:46:e9

IF-ID-3: eth0 state: up speed: 1000 Mbps duplex: full

mac: 00:e0:4c:05:7b:70

IF-ID-4: eth1 state: down mac: 00:e0:4c:05:7b:71

IF-ID-5: sit0 state: down mac: 00:00:00:00

IF-ID-6: vethbcdc398 state: up speed: 10000 Mbps duplex: full

mac: 9a:a2:52:18:f9:a2

Drives:

Local Storage: total: 989.76 GiB used: 471.56 GiB (47.6%)

ID-1: /dev/mmcblk0 model: CUTB42 size: 58.24 GiB

ID-2: /dev/sda type: USB vendor: Seagate model: ST1000LM024 HN-M101MBB

size: 931.51 GiB

Partition:

ID-1: / size: 5.82 GiB used: 168.3 MiB (2.8%) fs: overlay source: ERR-102

ID-2: /boot size: 117.1 MiB used: 67.4 MiB (57.5%) fs: vfat

dev: /dev/mmcblk0p1

ID-3: /opt size: 1.95 GiB used: 246.5 MiB (12.4%) fs: ext4

dev: /dev/mmcblk0p6

Sensors:

Message: No sensors data was found. Is sensors configured?

Info:

Processes: 219 Uptime: 10m Memory: 2.99 GiB used: 896.1 MiB (29.3%)

Init: systemd runlevel: 5 Shell: bash inxi: 3.0.38

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 |

linaro@Airbox:~$ sudo inxi –Fc0 System: Host: Airbox Kernel: 5.4.217–bm1684–g18c6a7c915a2–dirty aarch64 bits: 64 Console: tty 0 Distro: Ubuntu 20.04 LTS (Focal Fossa) Machine: Type: ARM Device System: Radxa AICore BM1684x IO Board details: N/A CPU: Topology: 8–Core (2–Die) model: bm1684x variant: cortex–a53 bits: 64 type: MCP MCM Speed: 2300 MHz min/max: 1150/2300 MHz Core speeds (MHz): 1: 2300 2: 2300 3: 2300 4: 2300 5: 2300 6: 2300 7: 2300 8: 2300 Graphics: Message: No Device data found. Display: server: No display server data found. Headless machine? tty: 80×24 Message: Advanced graphics data unavailable in console for root. Audio: Device–1: Realtek type: USB driver: hid–generic,snd–usb–audio,usbhid Sound Server: ALSA v: k5.4.217–bm1684–g18c6a7c915a2–dirty Network: Device–1: ethernet driver: bm_dwmac Device–2: ethernet driver: bm_dwmac IF–ID–1: docker0 state: up speed: N/A duplex: N/A mac: 02:42:01:de:71:42 IF–ID–2: dummy0 state: down mac: 7a:fa:cc:19:46:e9 IF–ID–3: eth0 state: up speed: 1000 Mbps duplex: full mac: 00:e0:4c:05:7b:70 IF–ID–4: eth1 state: down mac: 00:e0:4c:05:7b:71 IF–ID–5: sit0 state: down mac: 00:00:00:00 IF–ID–6: vethbcdc398 state: up speed: 10000 Mbps duplex: full mac: 9a:a2:52:18:f9:a2 Drives: Local Storage: total: 989.76 GiB used: 471.56 GiB (47.6%) ID–1: /dev/mmcblk0 model: CUTB42 size: 58.24 GiB ID–2: /dev/sda type: USB vendor: Seagate model: ST1000LM024 HN–M101MBB size: 931.51 GiB Partition: ID–1: / size: 5.82 GiB used: 168.3 MiB (2.8%) fs: overlay source: ERR–102 ID–2: /boot size: 117.1 MiB used: 67.4 MiB (57.5%) fs: vfat dev: /dev/mmcblk0p1 ID–3: /opt size: 1.95 GiB used: 246.5 MiB (12.4%) fs: ext4 dev: /dev/mmcblk0p6 Sensors: Message: No sensors data was found. Is sensors configured? Info: Processes: 219 Uptime: 10m Memory: 2.99 GiB used: 896.1 MiB (29.3%) Init: systemd runlevel: 5 Shell: bash inxi: 3.0.38 |

Only 2.99GB RAM is available to the system, although I have a machine with 16GB of RAM. That’s because the RAM is shared between the NPU (Neural Processing Unit), VPU (Video Processing Unit), and VPP (Graphics Acceleration Unit). We can check the settings with the memory_edit utility as follows:

linaro@Airbox:~$ memory_edit.sh -p bm1684x_sm7m_v1.2.dtb

Info: use dts file /opt/sophon/memory_edit/output/bm1684x_sm7m_v1.2.dts

Info: chip is bm1684x

Info: =======================================================================

Info: get ddr information …

Info: ddr12_size 8589934592 Byte [8192 MiB]

Info: ddr3_size 4294967296 Byte [4096 MiB]

Info: ddr4_size 4294967296 Byte [4096 MiB]

Info: ddr_size 16384 MiB

Info: =======================================================================

Info: get max memory size …

Info: max npu size: 0x1dbf00000 [7615 MiB]

Info: max vpu size: 0xb8000000 [2944 MiB]

Info: max vpp size: 0x100000000 [4096 MiB]

Info: =======================================================================

Info: get now memory size …

Info: now npu size: 0x1c0000000 [7168 MiB]

Info: now vpu size: 0x80000000 [2048 MiB]

Info: now vpp size: 0xc0000000 [3072 MiB]

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

linaro@Airbox:~$ memory_edit.sh –p bm1684x_sm7m_v1.2.dtb Info: use dts file /opt/sophon/memory_edit/output/bm1684x_sm7m_v1.2.dts Info: chip is bm1684x Info: ======================================================================= Info: get ddr information ... Info: ddr12_size 8589934592 Byte [8192 MiB] Info: ddr3_size 4294967296 Byte [4096 MiB] Info: ddr4_size 4294967296 Byte [4096 MiB] Info: ddr_size 16384 MiB Info: ======================================================================= Info: get max memory size ... Info: max npu size: 0x1dbf00000 [7615 MiB] Info: max vpu size: 0xb8000000 [2944 MiB] Info: max vpp size: 0x100000000 [4096 MiB] Info: ======================================================================= Info: get now memory size ... Info: now npu size: 0x1c0000000 [7168 MiB] Info: now vpu size: 0x80000000 [2048 MiB] Info: now vpp size: 0xc0000000 [3072 MiB] |

That means we are left with 4096 MB for the system with this device tree file. If needed, it can be changed with the script as follows:

memory_edit.sh -c -npu 7360 -vpu 2360 -vpp 4096 bm1684x_sm7m_v1.2.dtb

sudo cp /opt/sophon/memory_edit/emmcboot.itb /boot/emmcboot.itb && sync

sudo reboot

|

memory_edit.sh –c –npu 7360 –vpu 2360 –vpp 4096 bm1684x_sm7m_v1.2.dtb sudo cp /opt/sophon/memory_edit/emmcboot.itb /boot/emmcboot.itb && sync sudo reboot |

I have not changed it before the current memory configuration works fine for the AI models used.

Stable diffusion test

Let’s now click on the Stable Diffusion icon in CasaOS dashboard to start and open it. The first time I tried it looked like it would not work and Firefox was unable to connect. That’s simply because it takes time for the container to start, so you’ll want to wait a minute or two before trying again.

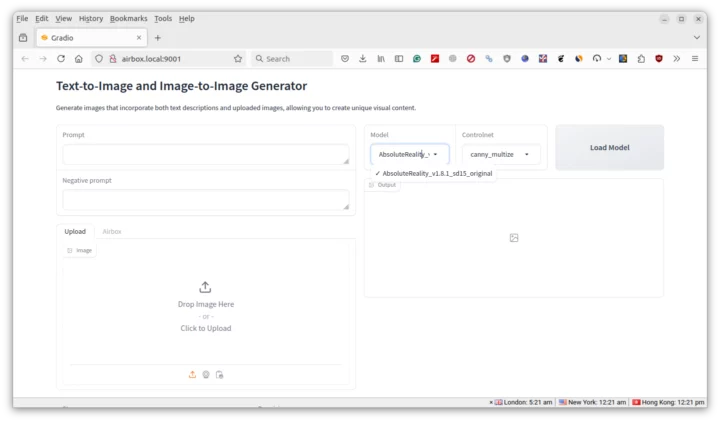

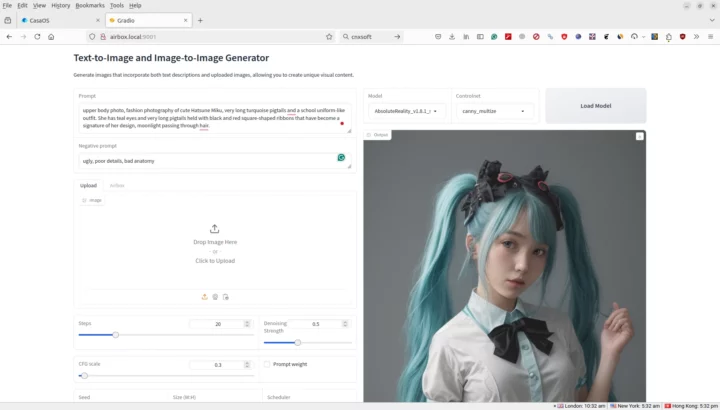

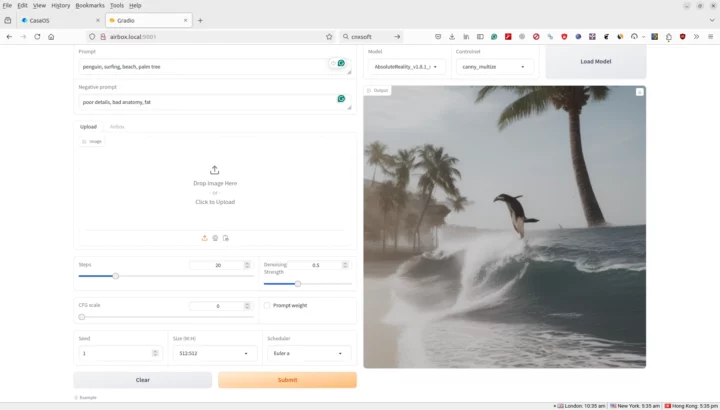

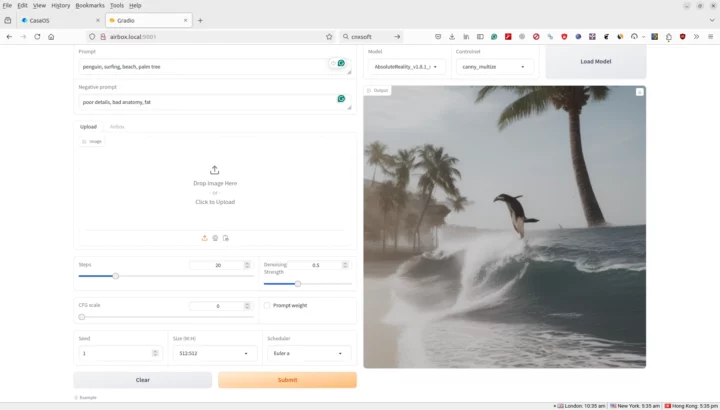

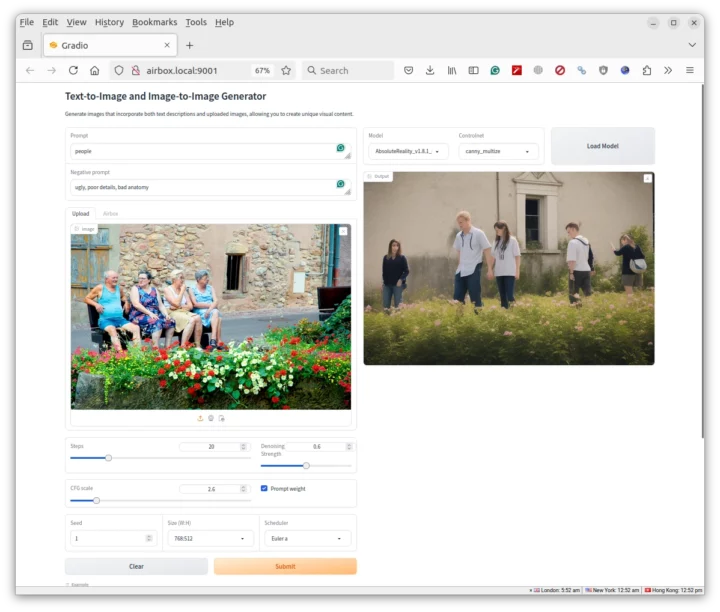

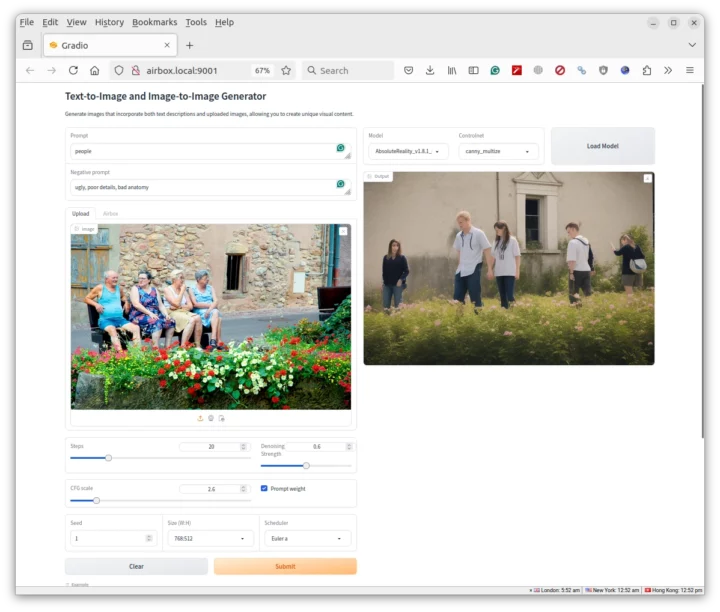

The web interface for Stable Diffusion is built with Gradio which explains why the window is called “Gradio”. The first step is to select the Model and Controlnet and click on the Load Model button although there’s not much of a choice here because each dropdown menu only has a single choice. It will take a little while to load the model (around 1 minute), and then we can try the text-to-image generator. I started with one of the examples provided at the bottom with both a prompt:

1girl, ponytail ,white hair, purple eyes, medium breasts, collarbone, flowers and petals, landscape, background, rose, abstract

and a negative prompt:

ugly, poor details, bad anatomy

plus various parameters including denoising strength and CFG (classifier-free guidance scale) scale.

It took about 7 seconds to create the image. I then tried my own text prompt creating an image with a penguin surfing at a beach with some palm trees.

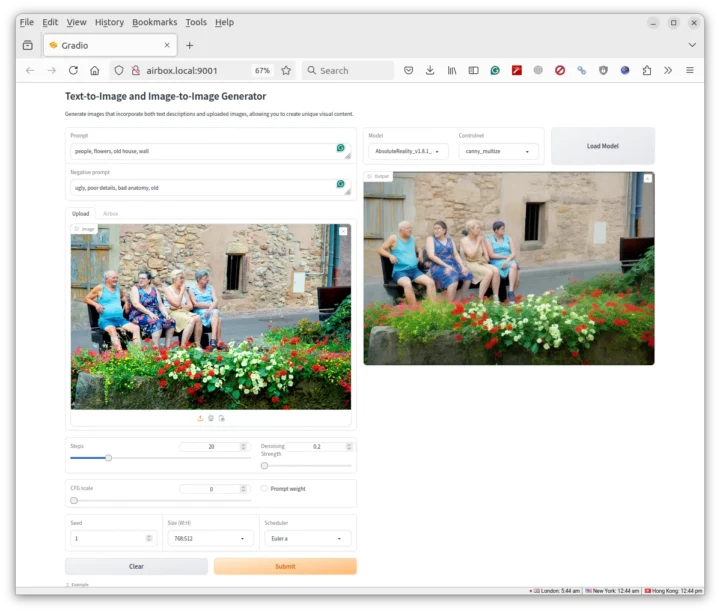

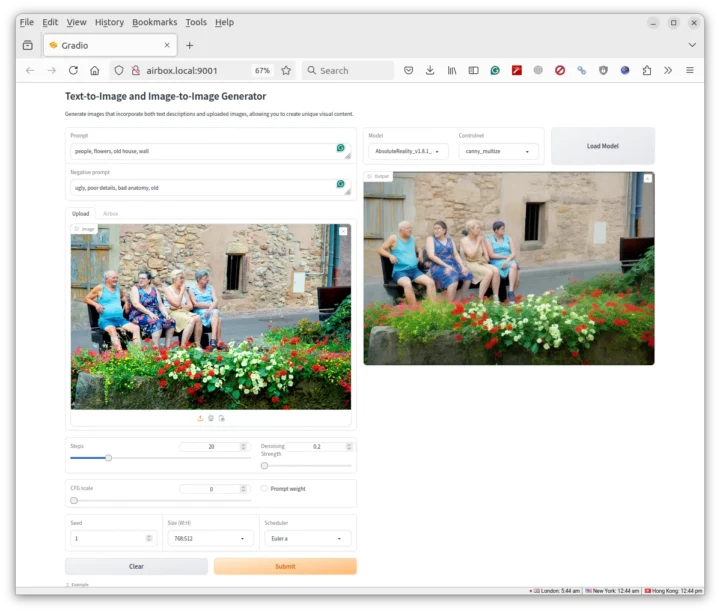

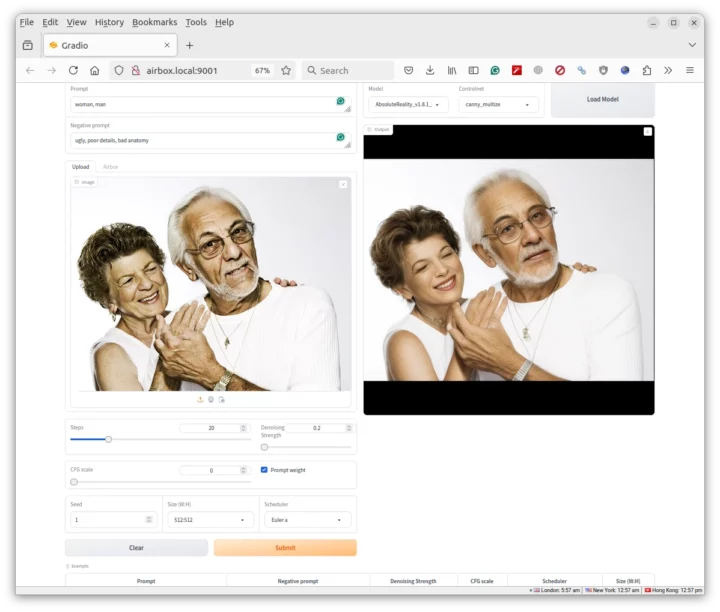

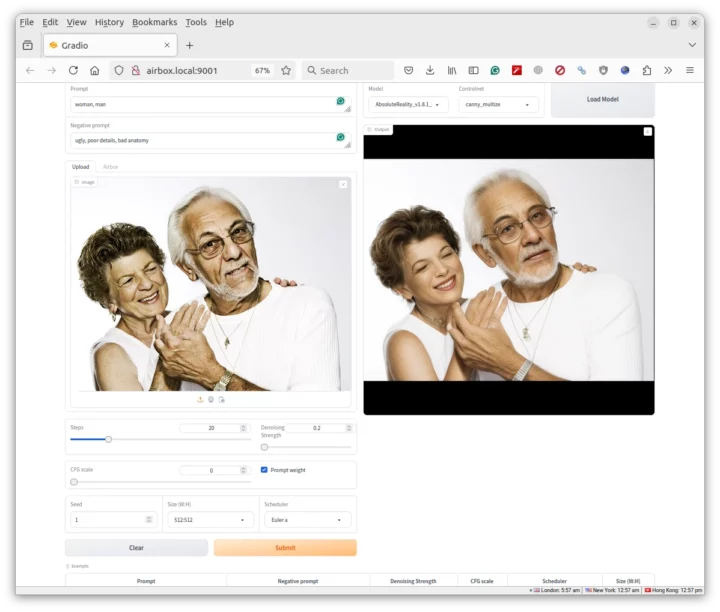

It also took 5 to 7 seconds to create the image. Adding an input image may be quite fun and it allows us to better understand the denoising strength and CFG scale parameters. All processing is done locally, you don’t have to worry about sharing personal photos online. I used a stock photo with older people as a test.

I set the denoising strength to the minimum to keep most of the original image, and the CFG scale to 0 to give it some flexibility. The resulting image is very close to the original.

If I boost the denoising strength and play around with the CFG scale to get an acceptable result… Stable Diffusion still takes the input as a guide, but it has much more flexibility when creating a photo.

I’ve noticed that when human subjects are small in a photo the results don’t look that good. Let’s try another photo.

That’s better. Adjusting the denoising strength will create images further and further from the original. The GFC scale can create “monsters” with funny fingers and physical attributes. All tests I did completed in 5 to 10 seconds.

Llama3 on Fogwise Airbox

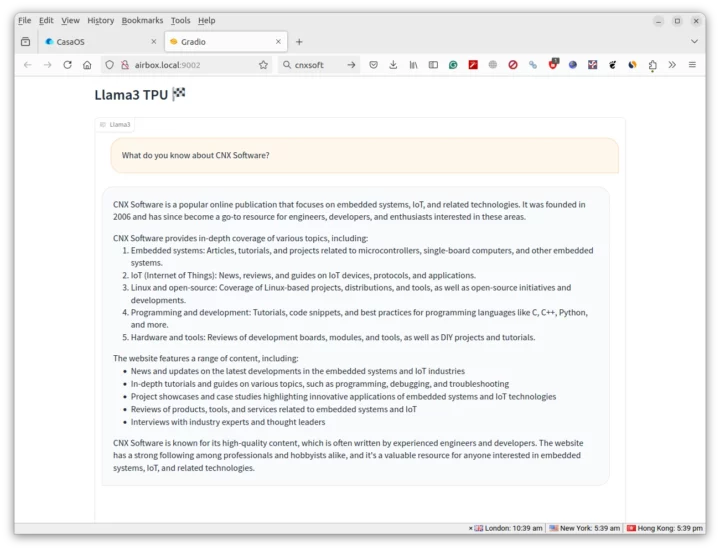

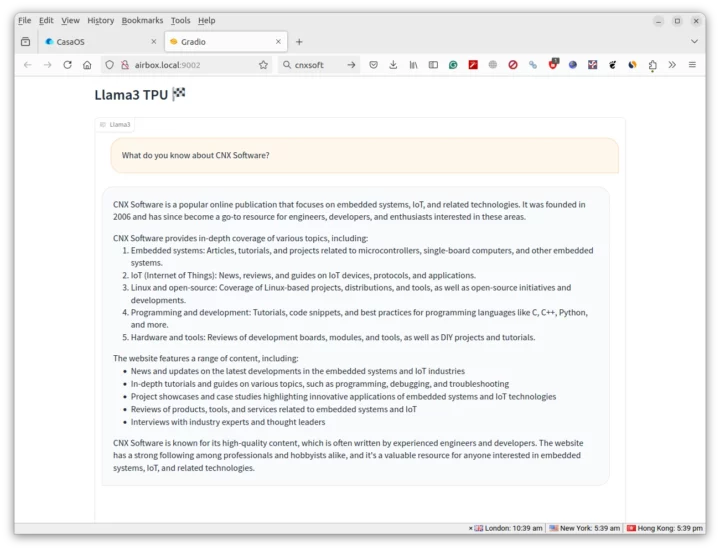

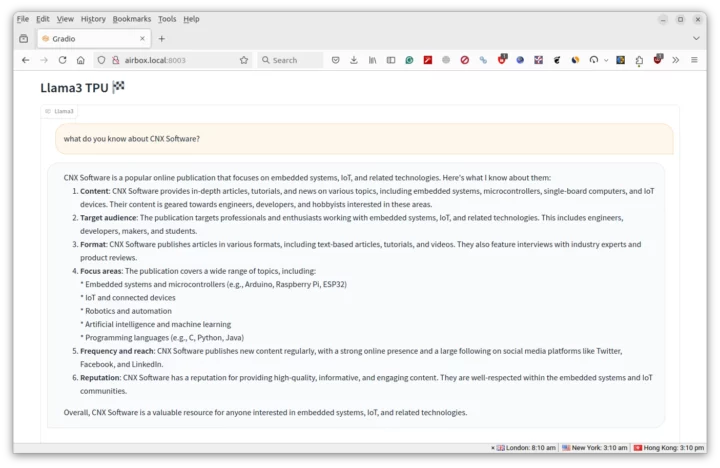

Time to shut down the Stable Diffusion container, and start Llama3. We’ve presented with a text prompt and a submit button at the bottom of the page. So I asked whether Llama3 knew anything about CNX Software.

Most of it gives a pretty good summary of what CNX Software does, although the starting year is wrong. But I was told (on X), that I should not expect Llama3 to spew accurate information. I guess it’s some art project then 🙂

Most of it gives a pretty good summary of what CNX Software does, although the starting year is wrong. But I was told (on X), that I should not expect Llama3 to spew accurate information. I guess it’s some art project then 🙂

We don’t have performance metrics in the web interface, so I shot a screencast in Ubuntu to show the rendering speeds.

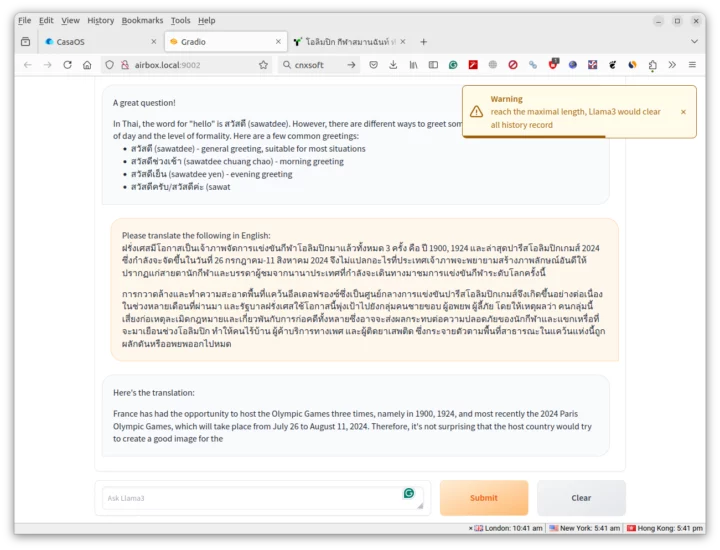

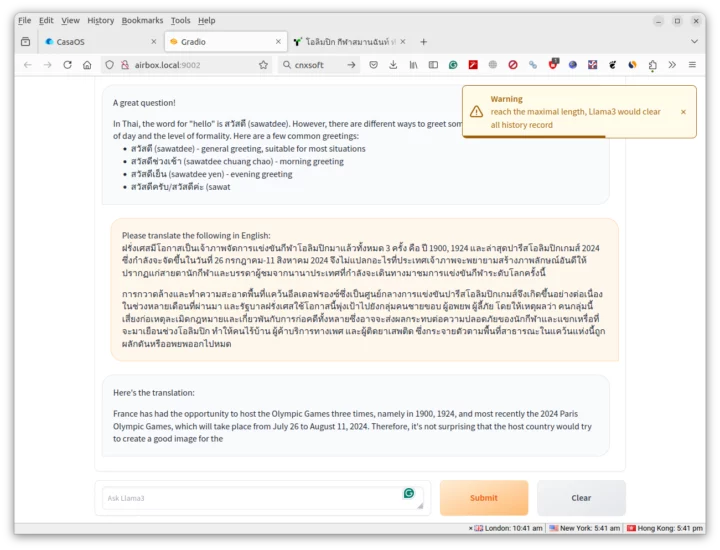

I then asked Llama3 to translate a relatively short text into English but this stopped in the middle of the answer with a warning reading “reach the maximal length, Llama3 would clear all history record”.

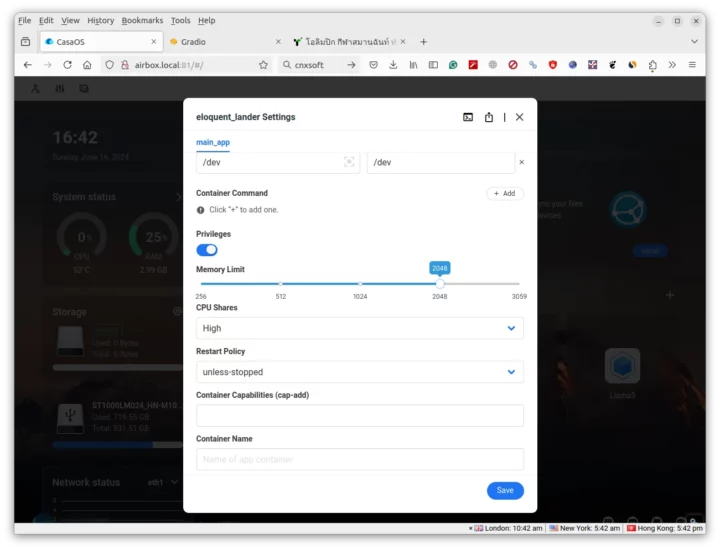

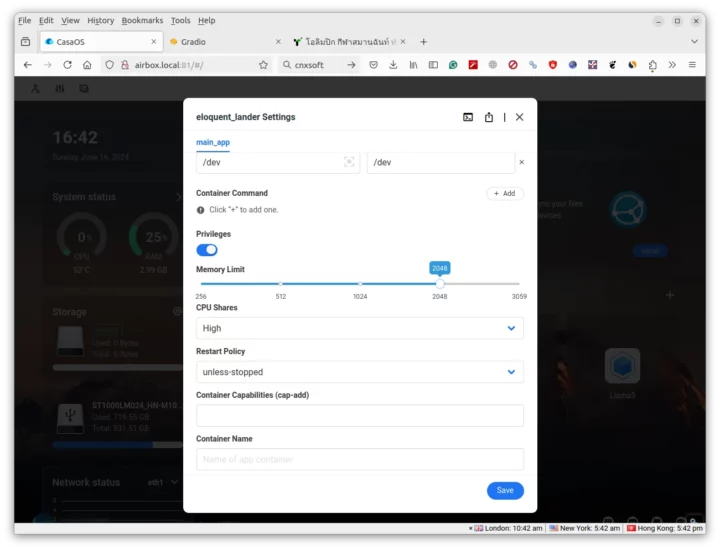

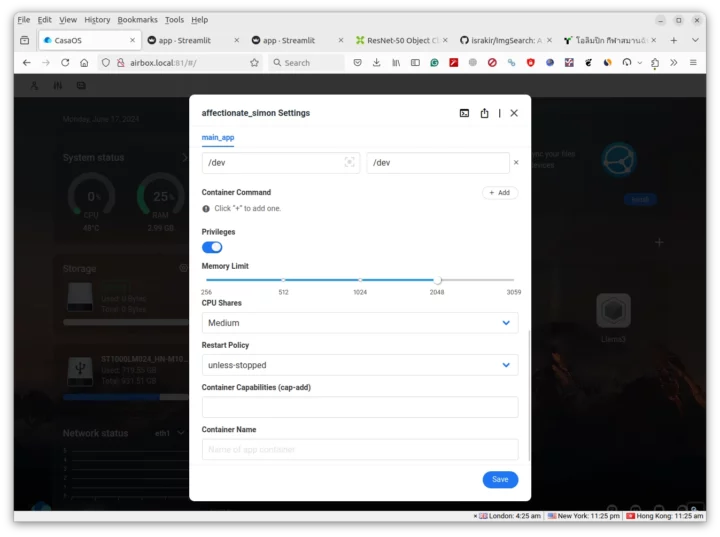

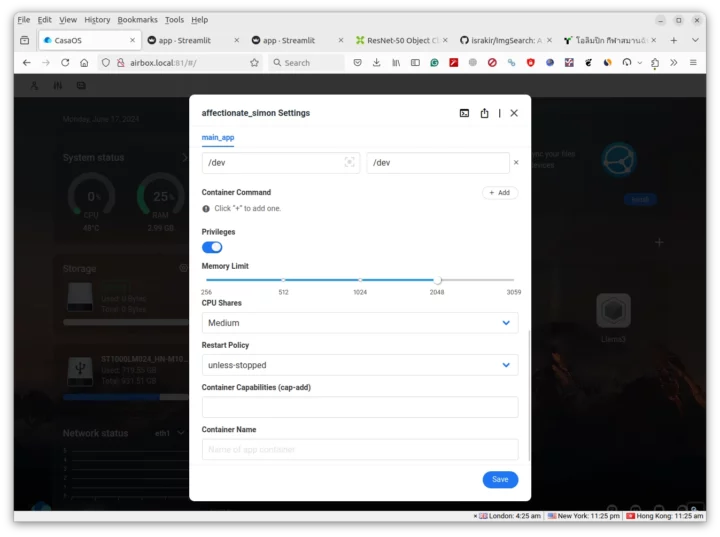

So stopped the container, changed the memory limits from 256 to 2048, and restarted Llama3.

But I got the same issue. Radxa told me it’s possible to change that:

Our Llama3 is fixed length input due with TPU design, for now is 512 length, if the total input + output > 512 the model would clear all of history information, if you want to increase the length of model, you can compile it to 1024 or more follow this link: (chinese), but it would cost more inference time, or you can set –enable_history to False to ignore the history

I don’t think I can do that in CasaOS, but I’ll try again later in the command line.

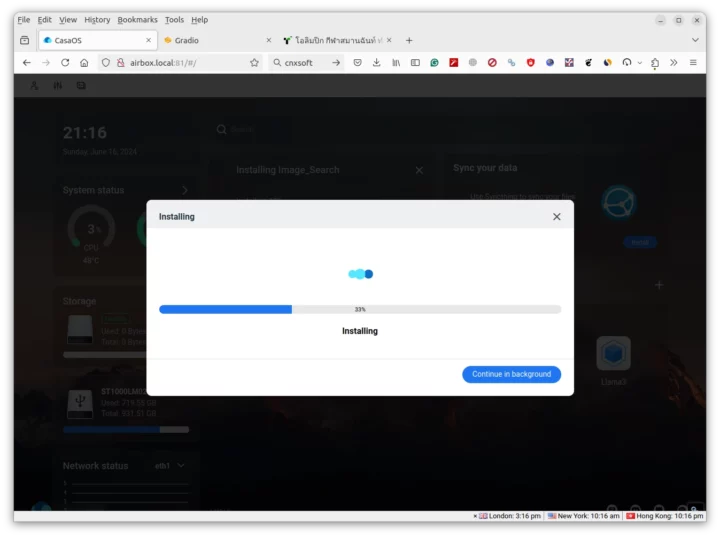

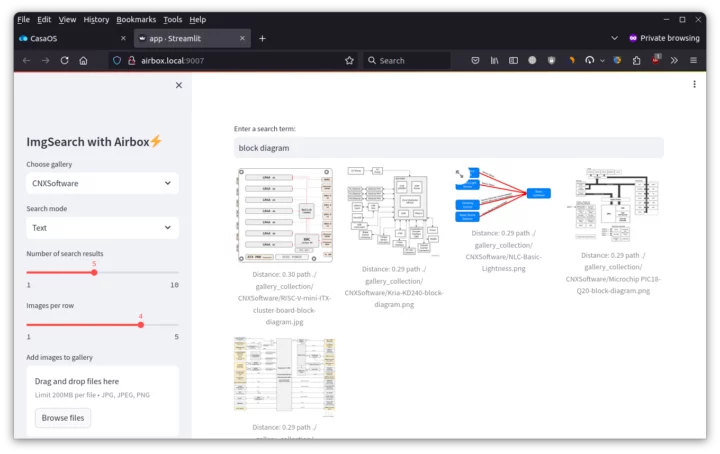

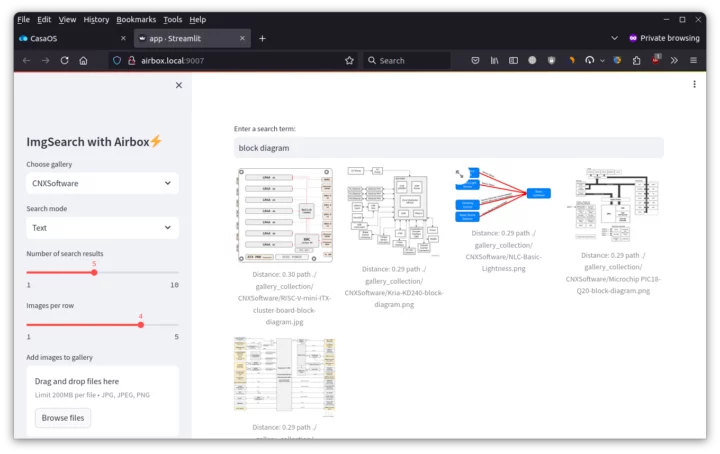

Installing and running imgSearch in CasaOS

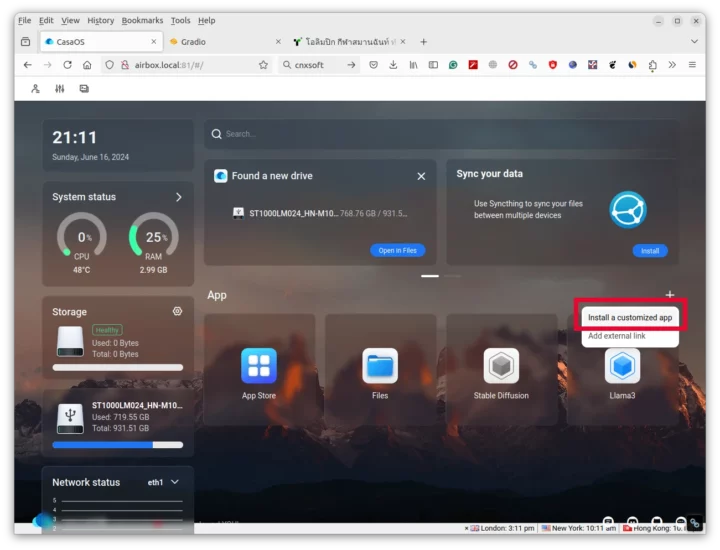

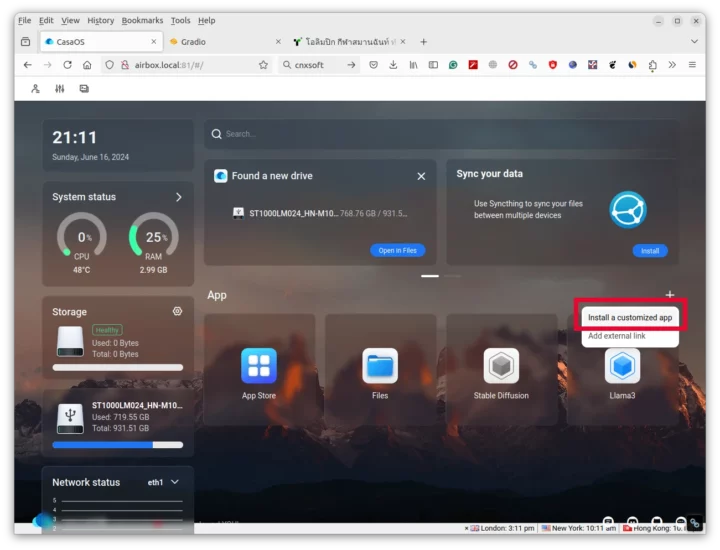

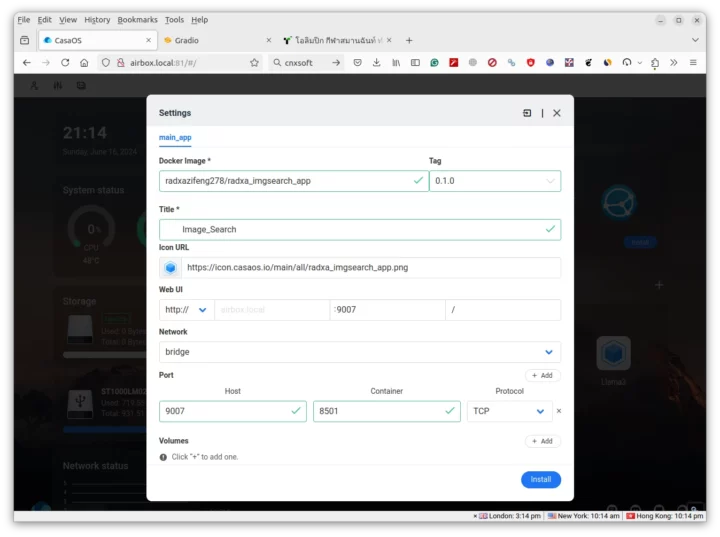

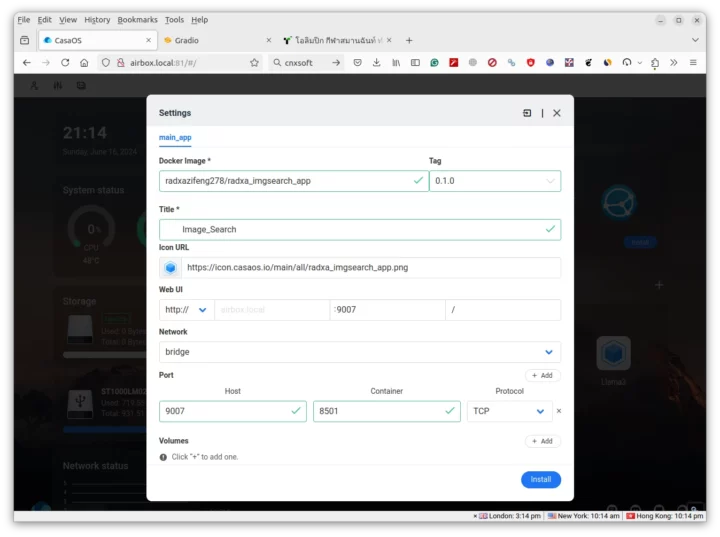

We’ve only used some preinstalled apps so far. But we can install extra apps manually including “Radxa whisper”, “Radxa ImageSearch”, and “Radxa chatdoc”. I’ll go with the imgSearch image search implementation

The first step is to click on the + icon and select “Install a customized app”

Then we need to add the parameters for the docker image:

Then we need to add the parameters for the docker image:

- Docker Image – radxazifeng278/radxa_imgsearch_app:0.1.0

- Title – Image_Search

- Web UI port – 9007 (you can select any unused TCP port)

- Host Port – 9007

- Container Port – 8501

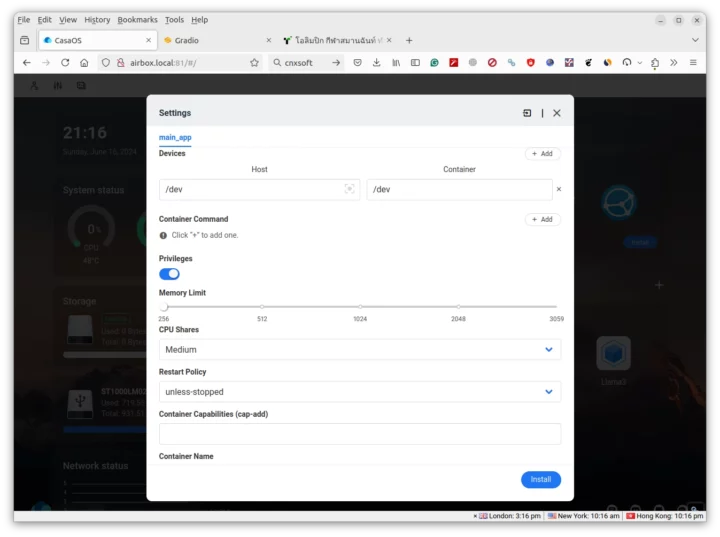

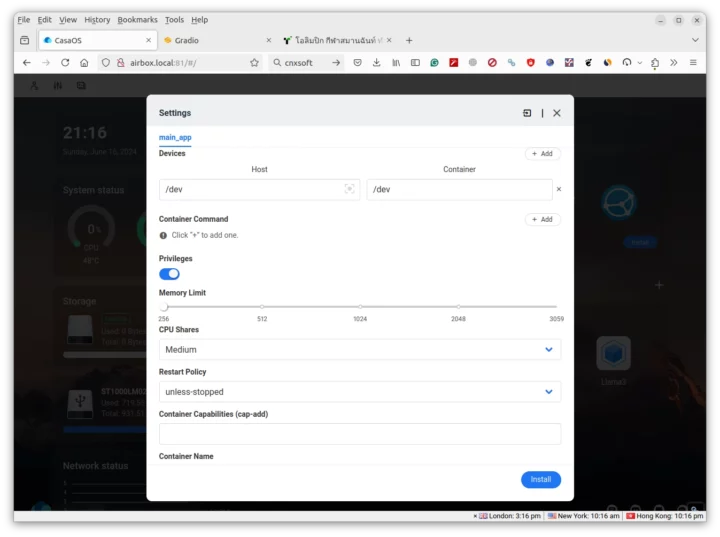

- Host Devices – /dev

- Container Devices – /dev

- CPU Shares – Medium

Now click on the “Install” button to start the installation process which only takes several seconds.

Now click on the “Install” button to start the installation process which only takes several seconds.

We now have a new app called “Image_Search”.

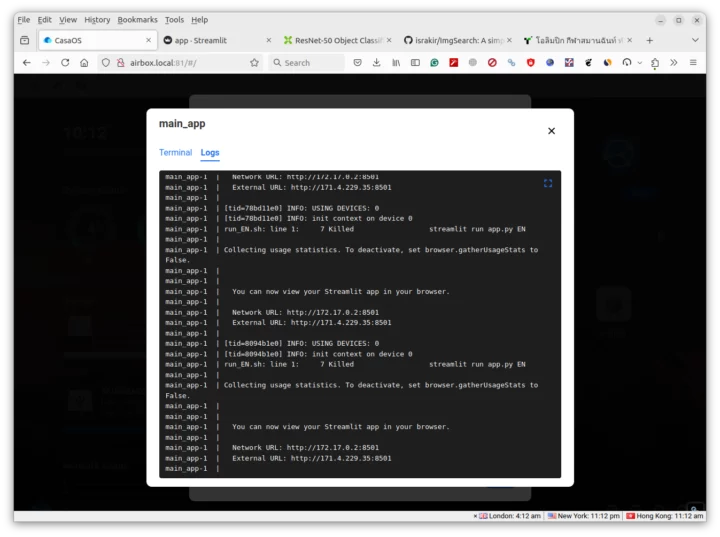

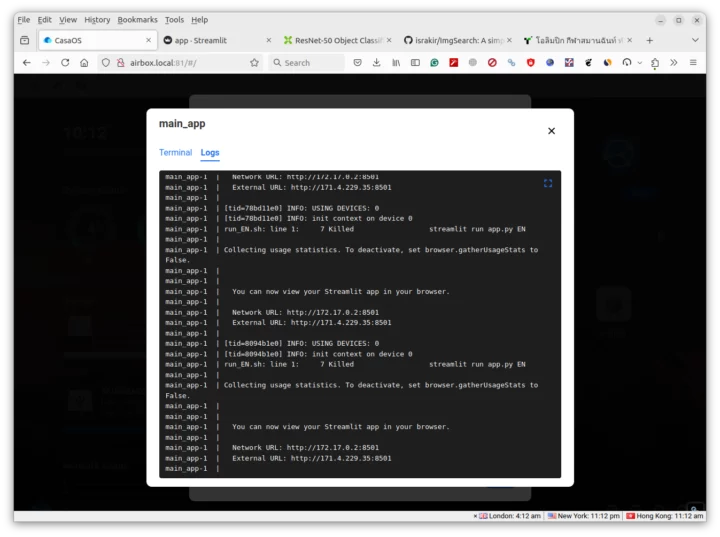

We can click on the app to start it, however the first time I tried, it would get stuck forever in a loop showing “Running”

If I check the log in Ubuntu 20.04 we can see a few out-of-memory errors:

[65184.192877] [6] :oom-kill:constraint=CONSTRAINT_MEMCG,nodemask=(null),cpuset=d696d17bd12939256402639cbda19d9f55995ad0fa2e65f9f39a1f98e716cc58,mems_allowed=0,oom_memcg=/docker/d696d17bd12939256402639cbda19d9f55995ad0fa2e65f9f39a1f98e716cc58,task_memcg=/docker/d696d17bd12939256402639cbda19d9f55995ad0fa2e65f9f39a1f98e716cc58,task=streamlit,pid=171794,uid=0

[65184.192913] [6] :Memory cgroup out of memory: Killed process 171794 (streamlit) total-vm:1573764kB, anon-rss:254604kB, file-rss:17656kB, shmem-rss:0kB, UID:0 pgtables:1176kB oom_score_adj:0

[65184.395643] [1] :docker0: port 1(vethe3b63bc) entered disabled state

[65184.395762] [1] :veth298a23d: renamed from eth0

[65184.472287] [1] :docker0: port 1(vethe3b63bc) entered disabled state

[65184.474744] [1] :device vethe3b63bc left promiscuous mode

[65184.474753] [1] :docker0: port 1(vethe3b63bc) entered disabled state

[65184.541368] [1] :overlayfs: NFS export requires “index=on”, falling back to nfs_export=off.

|

[65184.192877] [6] :oom–kill:constraint=CONSTRAINT_MEMCG,nodemask=(null),cpuset=d696d17bd12939256402639cbda19d9f55995ad0fa2e65f9f39a1f98e716cc58,mems_allowed=0,oom_memcg=/docker/d696d17bd12939256402639cbda19d9f55995ad0fa2e65f9f39a1f98e716cc58,task_memcg=/docker/d696d17bd12939256402639cbda19d9f55995ad0fa2e65f9f39a1f98e716cc58,task=streamlit,pid=171794,uid=0 [65184.192913] [6] :Memory cgroup out of memory: Killed process 171794 (streamlit) total–vm:1573764kB, anon–rss:254604kB, file–rss:17656kB, shmem–rss:0kB, UID:0 pgtables:1176kB oom_score_adj:0 [65184.395643] [1] :docker0: port 1(vethe3b63bc) entered disabled state [65184.395762] [1] :veth298a23d: renamed from eth0 [65184.472287] [1] :docker0: port 1(vethe3b63bc) entered disabled state [65184.474744] [1] :device vethe3b63bc left promiscuous mode [65184.474753] [1] :docker0: port 1(vethe3b63bc) entered disabled state [65184.541368] [1] :overlayfs: NFS export requires “index=on”, falling back to nfs_export=off. |

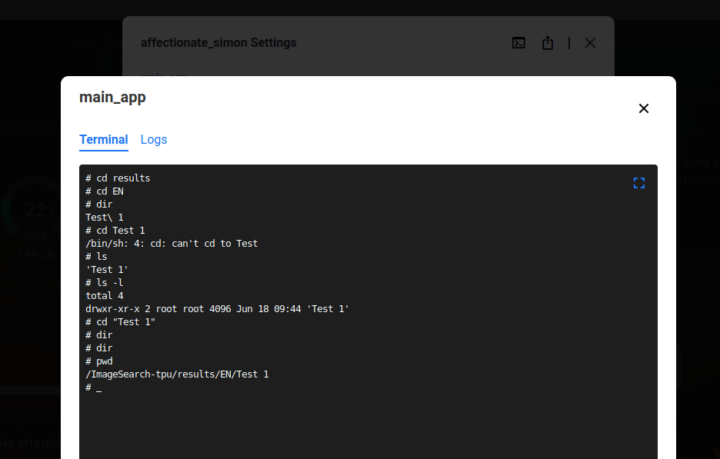

We can also check the log in CasaOS and the program is continuously being killed and restarted.

The trick is to change the memory limit in the app settings. I changed it to 2048.

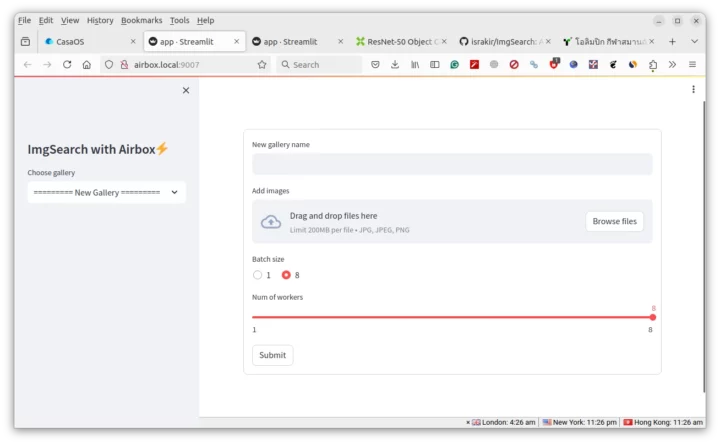

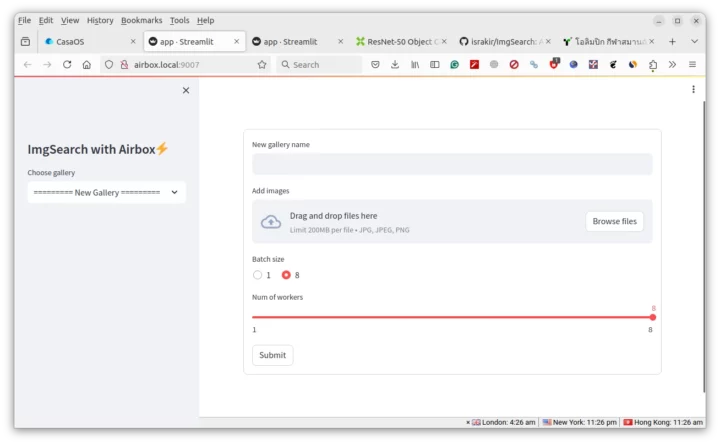

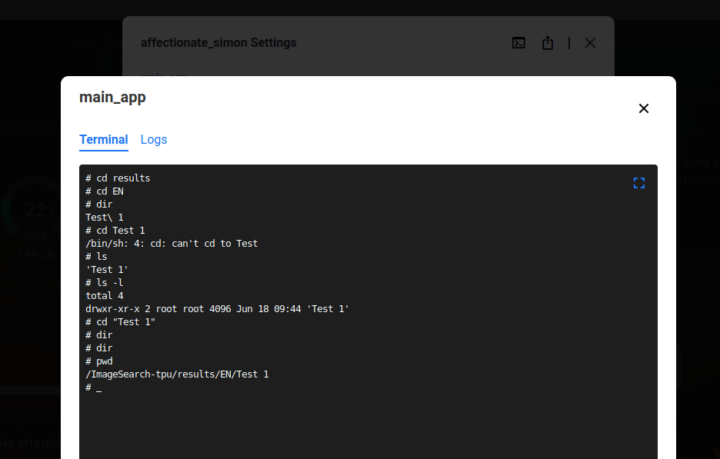

We can click on Save which will reinstall the docker container with the new parameters, and this time around it can run:

We can click on Save which will reinstall the docker container with the new parameters, and this time around it can run:

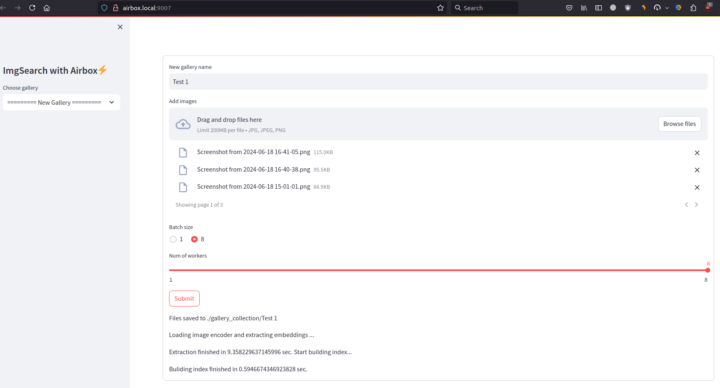

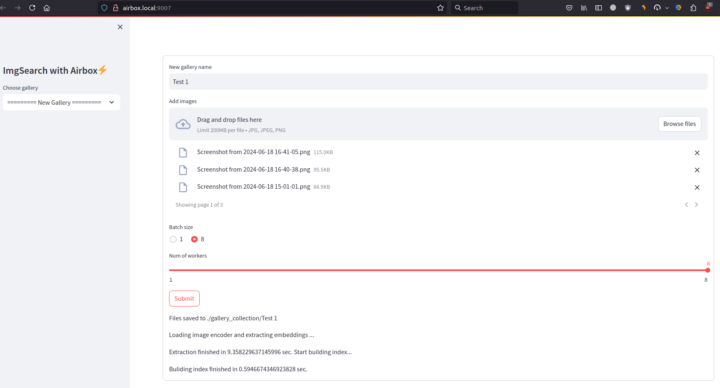

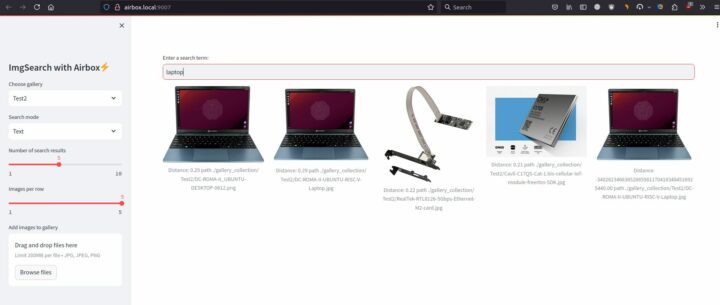

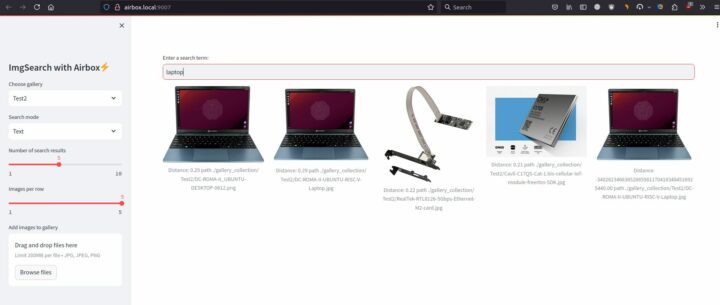

My first idea was to select a directory on the hard drive attached to the Radxa Fogwise Airbox AI Box, but it’s not implemented that way, as instead, we need to manually upload a list of files. I was told the Steamlit Python framework used for this demo does not have a widget to load files from a directory. Nevertheless, I created a “Test 1” gallery with around 3 photos to get started. It could process the photos within a few seconds.

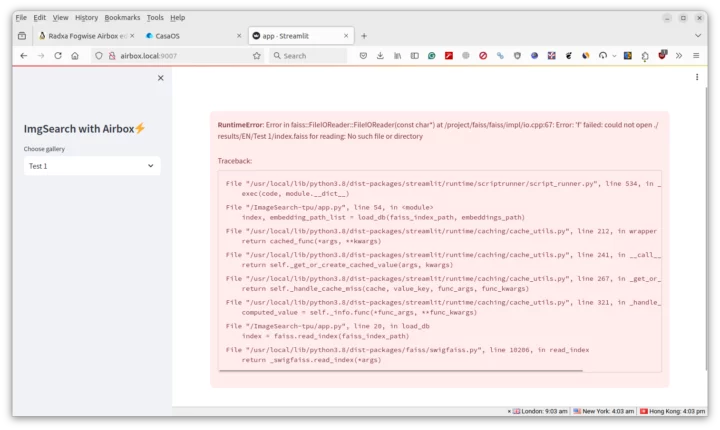

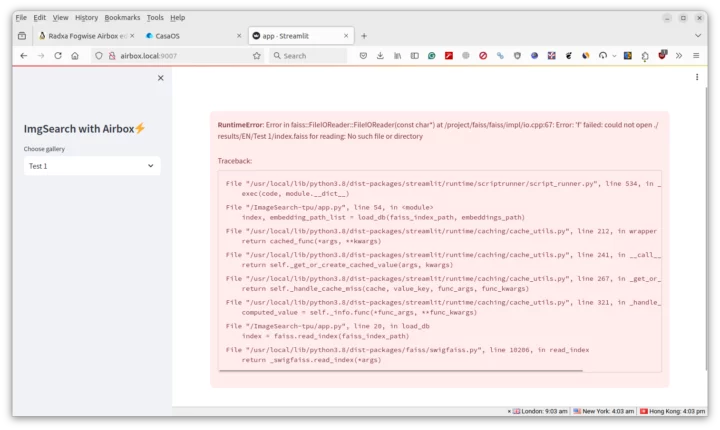

However, I was unable to run the test due to an error that reads “could not open ./results/EN/Test 1/index.faiss for reading: No such file or directory“.

If I go to the results/EN directory in the Terminal for the container, there’s a “Test 1” folder, but it’s empty.

Then I thought maybe, it’s not liking the space in the gallery name. So I changed that to “test2” and it worked after uploading a few recent pictures.

Then I created a “CNXSoftware” gallery trying to add all images from 2023 (about 4,000 of those), but it was stuck and nothing seemed to happen. So I just uploaded a few hundred from the directory, and I was able to search for “block diagram” from the list of photos and got relevant results.

Checking out the Python Virtual environment on Fogwise Airbox

Time to shut down all containers running in CasaOS, and try the Python Virtual environment that should give the user more flexibility. I’ll be following the instructions for Llama3 since I have some unfinished business.

At first, I did this in the root partition (/), but I quickly ran out of space.

linaro@Airbox:/data/temp$ df -h

Filesystem Size Used Avail Use% Mounted on

overlay 5.9G 3.4G 2.3G 60% /

devtmpfs 1.5G 0 1.5G 0% /dev

tmpfs 1.5G 0 1.5G 0% /dev/shm

tmpfs 306M 35M 272M 12% /run

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 1.5G 0 1.5G 0% /sys/fs/cgroup

/dev/mmcblk0p7 43G 25G 17G 60% /data

/dev/mmcblk0p4 3.3G 3.2G 0 100% /media/root-ro

/dev/mmcblk0p5 5.9G 3.4G 2.3G 60% /media/root-rw

/dev/mmcblk0p6 2.0G 221M 1.7G 12% /opt

/dev/mmcblk0p2 3.0G 51M 2.8G 2% /recovery

/dev/mmcblk0p1 118M 68M 50M 58% /boot

/dev/sda2 241G 89G 140G 39% /data2

tmpfs 306M 0 306M 0% /run/user/1000

|

linaro@Airbox:/data/temp$ df –h Filesystem Size Used Avail Use% Mounted on overlay 5.9G 3.4G 2.3G 60% / devtmpfs 1.5G 0 1.5G 0% /dev tmpfs 1.5G 0 1.5G 0% /dev/shm tmpfs 306M 35M 272M 12% /run tmpfs 5.0M 0 5.0M 0% /run/lock tmpfs 1.5G 0 1.5G 0% /sys/fs/cgroup /dev/mmcblk0p7 43G 25G 17G 60% /data /dev/mmcblk0p4 3.3G 3.2G 0 100% /media/root–ro /dev/mmcblk0p5 5.9G 3.4G 2.3G 60% /media/root–rw /dev/mmcblk0p6 2.0G 221M 1.7G 12% /opt /dev/mmcblk0p2 3.0G 51M 2.8G 2% /recovery /dev/mmcblk0p1 118M 68M 50M 58% /boot /dev/sda2 241G 89G 140G 39% /data2 tmpfs 306M 0 306M 0% /run/user/1000 |

So I switched to the 25GB “data” partition instead. Adding an M.2 2230 NVMe SSD to the Fogwise Airbox might be a good idea since AI models are large, and you may not want to delete or move the files around all of the time…

Let’s get the Llama3 Python demo:

mkdir -p /data/temp

cd /data/temp/

git clone

cd LLM-TPU/models/Llama3/python_demo

|

mkdir –p /data/temp cd /data/temp/ git clone https://github.com/zifeng-radxa/LLM-TPU.git cd LLM–TPU/models/Llama3/python_demo |

The Llama3 8B model can be downloaded as follows:

wget

bash tar_downloader.sh

tar -xvf llama3-8b_int4_1dev_512.tar.gz

|

wget https://github.com/radxa-edge/TPU-Edge-AI/releases/download/llama3/tar_downloader.sh bash tar_downloader.sh tar –xvf llama3–8b_int4_1dev_512.tar.gz |

Let’s now setup the environment and install dependencies:

cd ..

pip3 install –upgrade pip

pip3 install -r requirements.txt

cd python_demo

export LD_LIBRARY_PATH=/data/temp/LLM-TPU/support/lib_soc:$LD_LIBRARY_PATH

cp llama3-8b_int4_1dev_512/*.so .

|

cd .. pip3 install —upgrade pip pip3 install –r requirements.txt cd python_demo export LD_LIBRARY_PATH=/data/temp/LLM–TPU/support/lib_soc:$LD_LIBRARY_PATH cp llama3–8b_int4_1dev_512/*.so . |

We can now start the Llama3 demo in the terminal (Note: it will take a while before we get to the prompt, so be patient):

(.venv) linaro@Airbox:/data/temp/LLM-TPU/models/Llama3/python_demo$ python3 pipeline.py -m ./llama3-8b_int4_1dev_512/llama3-8b_int4_1dev_512.bmodel -t ../token_config/

None of PyTorch, TensorFlow >= 2.0, or Flax have been found. Models won’t be available and only tokenizers, configuration and file/data utilities can be used.

Load ../token_config/ …

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

Device [ 0 ] loading ….

[BMRT][bmcpu_setup:498] INFO:cpu_lib ‘libcpuop.so’ is loaded.

[BMRT][bmcpu_setup:521] INFO:Not able to open libcustomcpuop.so

bmcpu init: skip cpu_user_defined

open usercpu.so, init user_cpu_init

[BMRT][BMProfileDeviceBase:190] INFO:gdma=0, tiu=0, mcu=0

Model[./llama3-8b_int4_1dev_512/llama3-8b_int4_1dev_512.bmodel] loading ….

[BMRT][load_bmodel:1939] INFO:Loading bmodel from [./llama3-8b_int4_1dev_512/llama3-8b_int4_1dev_512.bmodel]. Thanks for your patience…

[BMRT][load_bmodel:1704] INFO:Bmodel loaded, version 2.2+v1.7-20240419

[BMRT][load_bmodel:1706] INFO:pre net num: 0, load net num: 69

[BMRT][load_tpu_module:1802] INFO:loading firmare in bmodel

[BMRT][preload_funcs:2121] INFO: core_id=0, multi_fullnet_func_id=30

[BMRT][preload_funcs:2124] INFO: core_id=0, dynamic_fullnet_func_id=31

Done!

=================================================================

1. If you want to quit, please enter one of [q, quit, exit]

2. To create a new chat session, please enter one of [clear, new]

=================================================================

Question:

Answer: Hello! I’m Llama3, a helpful AI assistant. I’m here to assist you with any questions or tasks you may have. What’s on your mind? Do you need help with something specific or just want to chat? I’m all ears (or rather, all text)!

FTL: 0.798 s

TPS: 9.565 token/s

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

(.venv) linaro@Airbox:/data/temp/LLM–TPU/models/Llama3/python_demo$ python3 pipeline.py –m ./llama3–8b_int4_1dev_512/llama3–8b_int4_1dev_512.bmodel –t ../token_config/ None of PyTorch, TensorFlow >= 2.0, or Flax have been found. Models won‘t be available and only tokenizers, configuration and file/data utilities can be used. Load ../token_config/ … Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained. Device [ 0 ] loading …. [BMRT][bmcpu_setup:498] INFO:cpu_lib ‘libcpuop.so‘ is loaded. [BMRT][bmcpu_setup:521] INFO:Not able to open libcustomcpuop.so bmcpu init: skip cpu_user_defined open usercpu.so, init user_cpu_init [BMRT][BMProfileDeviceBase:190] INFO:gdma=0, tiu=0, mcu=0 Model[./llama3-8b_int4_1dev_512/llama3-8b_int4_1dev_512.bmodel] loading …. [BMRT][load_bmodel:1939] INFO:Loading bmodel from [./llama3-8b_int4_1dev_512/llama3-8b_int4_1dev_512.bmodel]. Thanks for your patience… [BMRT][load_bmodel:1704] INFO:Bmodel loaded, version 2.2+v1.7-20240419 [BMRT][load_bmodel:1706] INFO:pre net num: 0, load net num: 69 [BMRT][load_tpu_module:1802] INFO:loading firmare in bmodel [BMRT][preload_funcs:2121] INFO: core_id=0, multi_fullnet_func_id=30 [BMRT][preload_funcs:2124] INFO: core_id=0, dynamic_fullnet_func_id=31

Done!

================================================================= 1. If you want to quit, please enter one of [q, quit, exit] 2. To create a new chat session, please enter one of [clear, new] =================================================================

Question: Answer: Hello! I’m Llama3, a helpful AI assistant. I‘m here to assist you with any questions or tasks you may have. What’s on your mind? Do you need help with something specific or just want to chat? I‘m all ears (or rather, all text)! FTL: 0.798 s TPS: 9.565 token/s |

Let’s ask the same question as before:

Question: What do you know about CNX Software?

Answer: CNX Software is a popular online publication that focuses on embedded systems, IoT, and related technologies. They provide news, articles, tutorials, and reviews on various topics such as:

1. Embedded systems: Microcontrollers, single-board computers, and other embedded devices.

2. IoT (Internet of Things): Connected devices, sensors, and applications.

3. Robotics: Robotics platforms, programming, and automation.

4. Artificial intelligence (AI) and machine learning (ML): Applications and implementations in embedded systems.

5. Linux and open-source operating systems: Tutorials, reviews, and news on Linux and other open-source OSes.

CNX Software is known for its in-depth articles, tutorials, and reviews, which cater to a wide range of audiences, from beginners to experienced professionals. They also feature interviews with industry experts and provide resources for learning and development.

The website is a valuable resource for anyone interested in embedded systems, IoT, and related technologies. It’s a great place to stay updated on the latest developments, learn new skills, and explore new projects.

Would you like to know more about a specific topic or aspect of CNX Software?

FTL: 0.722 s

TPS: 9.566 token/s

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

Question: What do you know about CNX Software?

Answer: CNX Software is a popular online publication that focuses on embedded systems, IoT, and related technologies. They provide news, articles, tutorials, and reviews on various topics such as:

1. Embedded systems: Microcontrollers, single–board computers, and other embedded devices. 2. IoT (Internet of Things): Connected devices, sensors, and applications. 3. Robotics: Robotics platforms, programming, and automation. 4. Artificial intelligence (AI) and machine learning (ML): Applications and implementations in embedded systems. 5. Linux and open–source operating systems: Tutorials, reviews, and news on Linux and other open–source OSes.

CNX Software is known for its in–depth articles, tutorials, and reviews, which cater to a wide range of audiences, from beginners to experienced professionals. They also feature interviews with industry experts and provide resources for learning and development.

The website is a valuable resource for anyone interested in embedded systems, IoT, and related technologies. It‘s a great place to stay updated on the latest developments, learn new skills, and explore new projects.

Would you like to know more about a specific topic or aspect of CNX Software? FTL: 0.722 s TPS: 9.566 token/s |

The AI box generates 9.566 token/s. if you want to reproduce the Gradio demo as in CasaOS, we can start the web_demo.py Python script instead:

(.venv) linaro@Airbox:/data/temp/LLM-TPU/models/Llama3/python_demo$ python3 web_demo.py -m ./llama3-8b_int4_1dev_512/llama3-8b_int4_1dev_512.bmodel -t ../token_config/

/data/temp/LLM-TPU/models/Llama3/python_demo/.venv/lib/python3.8/site-packages/gradio_client/documentation.py:104: UserWarning: Could not get documentation group for

warnings.warn(f”Could not get documentation group for {cls}: {exc}”)

/data/temp/LLM-TPU/models/Llama3/python_demo/.venv/lib/python3.8/site-packages/gradio_client/documentation.py:104: UserWarning: Could not get documentation group for

warnings.warn(f”Could not get documentation group for {cls}: {exc}”)

None of PyTorch, TensorFlow >= 2.0, or Flax have been found. Models won’t be available and only tokenizers, configuration and file/data utilities can be used.

Load ../token_config/ …

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

Device [ 0 ] loading ….

[BMRT][bmcpu_setup:498] INFO:cpu_lib ‘libcpuop.so’ is loaded.

[BMRT][bmcpu_setup:521] INFO:Not able to open libcustomcpuop.so

bmcpu init: skip cpu_user_defined

open usercpu.so, init user_cpu_init

[BMRT][BMProfileDeviceBase:190] INFO:gdma=0, tiu=0, mcu=0

Model[./llama3-8b_int4_1dev_512/llama3-8b_int4_1dev_512.bmodel] loading ….

[BMRT][load_bmodel:1939] INFO:Loading bmodel from [./llama3-8b_int4_1dev_512/llama3-8b_int4_1dev_512.bmodel]. Thanks for your patience…

[BMRT][load_bmodel:1704] INFO:Bmodel loaded, version 2.2+v1.7-20240419

[BMRT][load_bmodel:1706] INFO:pre net num: 0, load net num: 69

[BMRT][load_tpu_module:1802] INFO:loading firmare in bmodel

[BMRT][preload_funcs:2121] INFO: core_id=0, multi_fullnet_func_id=30

[BMRT][preload_funcs:2124] INFO: core_id=0, dynamic_fullnet_func_id=31

Done!

Running on local URL:

To create a public link, set `share=True` in `launch()`.

IMPORTANT: You are using gradio version 3.39.0, however version 4.29.0 is available, please upgrade.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

(.venv) linaro@Airbox:/data/temp/LLM–TPU/models/Llama3/python_demo$ python3 web_demo.py –m ./llama3–8b_int4_1dev_512/llama3–8b_int4_1dev_512.bmodel –t ../token_config/ /data/temp/LLM–TPU/models/Llama3/python_demo/.venv/lib/python3.8/site–packages/gradio_client/documentation.py:104: UserWarning: Could not get documentation group for <class ‘gradio.mix.Parallel’>: No known documentation group for module ‘gradio.mix’ warnings.warn(f“Could not get documentation group for {cls}: {exc}”) /data/temp/LLM–TPU/models/Llama3/python_demo/.venv/lib/python3.8/site–packages/gradio_client/documentation.py:104: UserWarning: Could not get documentation group for <class ‘gradio.mix.Series’>: No known documentation group for module ‘gradio.mix’ warnings.warn(f“Could not get documentation group for {cls}: {exc}”) None of PyTorch, TensorFlow >= 2.0, or Flax have been found. Models won‘t be available and only tokenizers, configuration and file/data utilities can be used. Load ../token_config/ … Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained. Device [ 0 ] loading …. [BMRT][bmcpu_setup:498] INFO:cpu_lib ‘libcpuop.so‘ is loaded. [BMRT][bmcpu_setup:521] INFO:Not able to open libcustomcpuop.so bmcpu init: skip cpu_user_defined open usercpu.so, init user_cpu_init [BMRT][BMProfileDeviceBase:190] INFO:gdma=0, tiu=0, mcu=0 Model[./llama3–8b_int4_1dev_512/llama3–8b_int4_1dev_512.bmodel] loading .... [BMRT][load_bmodel:1939] INFO:Loading bmodel from [./llama3–8b_int4_1dev_512/llama3–8b_int4_1dev_512.bmodel]. Thanks for your patience... [BMRT][load_bmodel:1704] INFO:Bmodel loaded, version 2.2+v1.7–20240419 [BMRT][load_bmodel:1706] INFO:pre net num: 0, load net num: 69 [BMRT][load_tpu_module:1802] INFO:loading firmare in bmodel [BMRT][preload_funcs:2121] INFO: core_id=0, multi_fullnet_func_id=30 [BMRT][preload_funcs:2124] INFO: core_id=0, dynamic_fullnet_func_id=31 Done! Running on local URL: http://0.0.0.0:8003

To create a public link, set `share=True` in `launch()`. IMPORTANT: You are using gradio version 3.39.0, however version 4.29.0 is available, please upgrade. |

All good. I still have a 512-byte limit with either method:

Overall, CNX Software is a valuable resource for anyone interested in embedded systems, IoT, and related technologies.

FTL: 0.721 s

TPS: 9.569 token/s

Question: How do you say hello in Thailand language?

Answer: In Thailand, the official language is Thai, and the way to say “hello” is ” Sawatdee” (สวัสดี). This is a common greeting used during the day, and it’s suitable for most situations.

However, there are a few other ways to greet someone in Thai, depending on the time of day and the level of formality:

* “Sawatdi krap” (สวัสดีค่ะ) – This is a more formal way to say “hello” during the day, and it’s often used when speaking to someone you don’t know well or when you want to show respect.

* “Sawatdi ka” (สวัสดีคะ) – This

FTL: 0.724 s

TPS: 9.540 token/s

… (reach the maximal length)

|

Overall, CNX Software is a valuable resource for anyone interested in embedded systems, IoT, and related technologies. FTL: 0.721 s TPS: 9.569 token/s

Question: How do you say hello in Thailand language?

Answer: In Thailand, the official language is Thai, and the way to say “hello” is ” Sawatdee” (สวัสดี). This is a common greeting used during the day, and it‘s suitable for most situations.

However, there are a few other ways to greet someone in Thai, depending on the time of day and the level of formality:

* “Sawatdi krap” (สวัสดีค่ะ) – This is a more formal way to say “hello” during the day, and it’s often used when speaking to someone you don‘t know well or when you want to show respect. * “Sawatdi ka” (สวัสดีคะ) – This FTL: 0.724 s TPS: 9.540 token/s ... (reach the maximal length) |

Attempting to increase Llama3 answer limit

Let’s see if we can increase the limit to 1024 and to what extent it impacts performance. Note that I first started those instructions on my Ubuntu 22.04 laptop with an Intel Core i5-13500H, 16GB RAM, and about 26GB of free space. But once I read the instructions at the end where we are told that:

Generating the bmodel takes about 2 hours or more. It is recommended to have 64G memory and over 200G of disk space, otherwise OOM or no space left errors are likely.

It’s not going to work. If only I had a machine with 64GB RAM. Oh! wait… I do! So I installed Ubuntu 24.04 on Cincoze DS-1402 embedded computer with an Intel Core i9-12900E processor, 64GB DDR5, and a 300GB Ubuntu partition. That should do. All instructions below are done in the x86 host unless otherwise stated.

We’ll first need to install the compiler:

sudo apt install git build-essential cmake python3-virtualenv

git clone

sudo docker pull sophgo/tpuc_dev:latest

sudo docker run –privileged –name myname -v $PWD:/workspace -it sophgo/tpuc_dev:latest

root@e7ce11913e8d:/workspace# cd tpu-mlir/

root@e7ce11913e8d:/workspace/tpu-mlir# source ./envsetup.sh

root@e7ce11913e8d:/workspace# cd tpu-mlir/

root@e7ce11913e8d:/workspace/tpu-mlir# ./build.sh

exit

|

sudo apt install git build–essential cmake python3–virtualenv git clone https://github.com/sophgo/tpu-mlir sudo docker pull sophgo/tpuc_dev:latest sudo docker run —privileged —name myname –v $PWD:/workspace –it sophgo/tpuc_dev:latest root@e7ce11913e8d:/workspace# cd tpu-mlir/ root@e7ce11913e8d:/workspace/tpu–mlir# source ./envsetup.sh root@e7ce11913e8d:/workspace# cd tpu-mlir/ root@e7ce11913e8d:/workspace/tpu–mlir# ./build.sh exit |

We’ll now need to ask permission to download the Llama3 model by filling out the form at Note that it requires a manual permission. I asked on Saturday, and I could download it on Sunday. We need to install git-lfs (Large File Storage) first:

sudo apt install curl

curl -s | sudo bash

sudo apt install git-lfs

git lfs install

|

sudo apt install curl curl –s https://packagecloud.io/install/repositories/github/git-lfs/script.deb.sh | sudo bash sudo apt install git–lfs git lfs install |

Then we have to generate a token to download the model and make sure to select “Read access to contents of all public gated repos you can access”. Let’s run the following command:

git clone

|

git clone https://huggingface.co/meta-llama/Meta-Llama-Guard-2-8B |

and input your username and token to retrieve the code. I did that in the root directory for the current user.

Time to create a virtual environment in the LLM-TPU/models/Llama3 directory:

cd LLM-TPU/models/Llama3

python3 -m virtualenv .venv

source .venv/bin/activate

pip3 install –upgrade pip

pip3 install -r requirements.txt

|

cd LLM–TPU/models/Llama3 python3 –m virtualenv .venv source .venv/bin/activate pip3 install —upgrade pip pip3 install –r requirements.txt |

Now copy modeling_llama.py to the transformers library in venv, install a few extra dependencies (apparently missed by the requirements.txt file) and start the compile using the downloaded Llama3 and a 1024-byte length:

cp ./compile/files/Meta-Llama-3-8B-Instruct/modeling_llama.py .venv/lib/python3.12/site-packages/transformers/models/llama/

pip3 install torch onnx

cd compile

(.venv) jaufranc@DS-1402-CNX:~/LLM-TPU/models/Llama3/compile$ python export_onnx.py –model_path ~/Meta-Llama-Guard-2-8B/ –seq_length 1024

|

cp ./compile/files/Meta–Llama–3–8B–Instruct/modeling_llama.py .venv/lib/python3.12/site–packages/transformers/models/llama/ pip3 install torch onnx cd compile (.venv) jaufranc@DS–1402–CNX:~/LLM–TPU/models/Llama3/compile$ python export_onnx.py —model_path ~/Meta–Llama–Guard–2–8B/ —seq_length 1024 |

Sadly, it didn’t quite work as expected:

$ python export_onnx.py –model_path ~/Meta-Llama-Guard-2-8B/ –seq_length 1024

Loading checkpoint shards: 100%|██████████████████| 4/4 [00:05<00:00, 1.36s/it]

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

Layers: 32

Hidden size: 4096

Convert block & block_cache

100%|███████████████████████████████████████████| 32/32 [06:56<00:00, 13.03s/it]

Convert embedding

Traceback (most recent call last):

File "/home/jaufranc/LLM-TPU/models/Llama3/compile/export_onnx.py", line 256, in

convert_embedding()

File “/home/jaufranc/LLM-TPU/models/Llama3/compile/export_onnx.py”, line 190, in convert_embedding

torch.onnx.export(model, (input_ids),

File “/home/jaufranc/LLM-TPU/models/Llama3/.venv/lib/python3.12/site-packages/torch/onnx/utils.py”, line 516, in export

_export(

File “/home/jaufranc/LLM-TPU/models/Llama3/.venv/lib/python3.12/site-packages/torch/onnx/utils.py”, line 1612, in _export

graph, params_dict, torch_out = _model_to_graph(

^^^^^^^^^^^^^^^^

File “/home/jaufranc/LLM-TPU/models/Llama3/.venv/lib/python3.12/site-packages/torch/onnx/utils.py”, line 1138, in _model_to_graph

graph = _optimize_graph(

^^^^^^^^^^^^^^^^

File “/home/jaufranc/LLM-TPU/models/Llama3/.venv/lib/python3.12/site-packages/torch/onnx/utils.py”, line 677, in _optimize_graph

graph = _C._jit_pass_onnx(graph, operator_export_type)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/home/jaufranc/LLM-TPU/models/Llama3/.venv/lib/python3.12/site-packages/torch/onnx/utils.py”, line 1956, in _run_symbolic_function

return symbolic_fn(graph_context, *inputs, **attrs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/home/jaufranc/LLM-TPU/models/Llama3/.venv/lib/python3.12/site-packages/torch/onnx/symbolic_opset9.py”, line 7107, in prim_constant

return g.op(“Constant”, value_t=symbolic_helper._node_get(node, “value”))

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/home/jaufranc/LLM-TPU/models/Llama3/.venv/lib/python3.12/site-packages/torch/onnx/_internal/jit_utils.py”, line 87, in op

return _add_op(self, opname, *raw_args, outputs=outputs, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File “/home/jaufranc/LLM-TPU/models/Llama3/.venv/lib/python3.12/site-packages/torch/onnx/_internal/jit_utils.py”, line 246, in _add_op

node = _create_node(

^^^^^^^^^^^^^

File “/home/jaufranc/LLM-TPU/models/Llama3/.venv/lib/python3.12/site-packages/torch/onnx/_internal/jit_utils.py”, line 307, in _create_node

_C._jit_pass_onnx_node_shape_type_inference(node, params_dict, opset_version)

RuntimeError: The serialized model is larger than the 2GiB limit imposed by the protobuf library. Therefore the output file must be a file path, so that the ONNX external data can be written to the same directory. Please specify the output file name.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 |

$ python export_onnx.py —model_path ~/Meta–Llama–Guard–2–8B/ —seq_length 1024 Loading checkpoint shards: 100%|██████████████████| 4/4 [00:05<00:00, 1.36s/it] Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine–tuned or trained. Layers: 32 Hidden size: 4096

Convert block & block_cache 100%|███████████████████████████████████████████| 32/32 [06:56<00:00, 13.03s/it] Convert embedding Traceback (most recent call last): File “/home/jaufranc/LLM-TPU/models/Llama3/compile/export_onnx.py”, line 256, in <module> convert_embedding() File “/home/jaufranc/LLM-TPU/models/Llama3/compile/export_onnx.py”, line 190, in convert_embedding torch.onnx.export(model, (input_ids), File “/home/jaufranc/LLM-TPU/models/Llama3/.venv/lib/python3.12/site-packages/torch/onnx/utils.py”, line 516, in export _export( File “/home/jaufranc/LLM-TPU/models/Llama3/.venv/lib/python3.12/site-packages/torch/onnx/utils.py”, line 1612, in _export graph, params_dict, torch_out = _model_to_graph( ^^^^^^^^^^^^^^^^ File “/home/jaufranc/LLM-TPU/models/Llama3/.venv/lib/python3.12/site-packages/torch/onnx/utils.py”, line 1138, in _model_to_graph graph = _optimize_graph( ^^^^^^^^^^^^^^^^ File “/home/jaufranc/LLM-TPU/models/Llama3/.venv/lib/python3.12/site-packages/torch/onnx/utils.py”, line 677, in _optimize_graph graph = _C._jit_pass_onnx(graph, operator_export_type) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File “/home/jaufranc/LLM-TPU/models/Llama3/.venv/lib/python3.12/site-packages/torch/onnx/utils.py”, line 1956, in _run_symbolic_function return symbolic_fn(graph_context, *inputs, **attrs) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File “/home/jaufranc/LLM-TPU/models/Llama3/.venv/lib/python3.12/site-packages/torch/onnx/symbolic_opset9.py”, line 7107, in prim_constant return g.op(“Constant”, value_t=symbolic_helper._node_get(node, “value”)) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File “/home/jaufranc/LLM-TPU/models/Llama3/.venv/lib/python3.12/site-packages/torch/onnx/_internal/jit_utils.py”, line 87, in op return _add_op(self, opname, *raw_args, outputs=outputs, **kwargs) ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ File “/home/jaufranc/LLM-TPU/models/Llama3/.venv/lib/python3.12/site-packages/torch/onnx/_internal/jit_utils.py”, line 246, in _add_op node = _create_node( ^^^^^^^^^^^^^ File “/home/jaufranc/LLM-TPU/models/Llama3/.venv/lib/python3.12/site-packages/torch/onnx/_internal/jit_utils.py”, line 307, in _create_node _C._jit_pass_onnx_node_shape_type_inference(node, params_dict, opset_version) RuntimeError: The serialized model is larger than the 2GiB limit imposed by the protobuf library. Therefore the output file must be a file path, so that the ONNX external data can be written to the same directory. Please specify the output file name. |

It’s a known limitation of protobug as documented in ONNX, but I’m unable to understand what I need to do to fix it, and despite various attempts, I failed to find a solution within the time I reserved for the review. Exporting the model with 512 bytes ends the same way. I’ll update this post once I get an answer from Radxa.

Radxa Model Zoo – Resnet50 and YOLOV8

I decided to do one last test by following the instructions to install the Radxa Model Zoo and run the 8-bit integer (INT8) Resnet50 model (INT16 and FP32 are also available). We are back in the Fogwise Airbox terminal:

git clone

cd Radxa-Model-Zoo/sample/ResNet

wget

mkdir images && cd images

wget

|

git clone https://github.com/zifeng-radxa/Radxa-Model-Zoo.git cd Radxa–Model–Zoo/sample/ResNet wget https://github.com/radxa-edge/TPU-Edge-AI/releases/download/model-zoo/resnet50_int8_1b.bmodel mkdir images && cd images wget https://github.com/radxa-edge/TPU-Edge-AI/releases/download/model-zoo/grace_hopper.bmp |

Grace Hopper

Grace Hopper

Again we need to set up a Python virtual environment and install dependencies:

cd ..

python3 -m virtualenv .venv

source .venv/bin/activate

pip3 install –upgrade pip

pip3 install numpy

pip3 install

|

cd .. python3 –m virtualenv .venv source .venv/bin/activate pip3 install —upgrade pip pip3 install numpy pip3 install https://github.com/radxa-edge/TPU-Edge-AI/releases/download/v0.1.0/sophon_arm-3.7.0-py3-none-any.whl |

Two samples are provided one using OpenCV and the SAIL API, and the other using SAIL and “BMVC” processing. Let’s run the OpenCV demo:

export LD_LIBRARY_PATH=/opt/sophon/libsophon-current/lib:$LD_LIBRARY_PATH

export PYTHONPATH=$PYTHONPATH:/opt/sophon/sophon-opencv-latest/opencv-python/

python3 python/resnet_opencv.py –input ./images –bmodel ./resnet50_int8_1b.bmodel

|

export LD_LIBRARY_PATH=/opt/sophon/libsophon–current/lib:$LD_LIBRARY_PATH export PYTHONPATH=$PYTHONPATH:/opt/sophon/sophon–opencv–latest/opencv–python/ python3 python/resnet_opencv.py —input ./images —bmodel ./resnet50_int8_1b.bmodel |

Output:

[BMRT][bmcpu_setup:406] INFO:cpu_lib ‘libcpuop.so’ is loaded.

bmcpu init: skip cpu_user_defined

open usercpu.so, init user_cpu_init

[BMRT][load_bmodel:1084] INFO:Loading bmodel from [./resnet50_int8_1b.bmodel]. Thanks for your patience…

[BMRT][load_bmodel:1023] INFO:pre net num: 0, load net num: 1

INFO:root:filename: ./images/grace_hopper.bmp, res: (457, 0.12794505059719086)

INFO:root:result saved in ./results/resnet50_int8_1b.bmodel_images_opencv_python_result.json

INFO:root:—————— Inference Time Info ———————-

INFO:root:decode_time(ms): 32.21

INFO:root:preprocess_time(ms): 38.65

INFO:root:inference_time(ms): 4.23

INFO:root:postprocess_time(ms): 0.35

|

[BMRT][bmcpu_setup:406] INFO:cpu_lib ‘libcpuop.so’ is loaded. bmcpu init: skip cpu_user_defined open usercpu.so, init user_cpu_init [BMRT][load_bmodel:1084] INFO:Loading bmodel from [./resnet50_int8_1b.bmodel]. Thanks for your patience... [BMRT][load_bmodel:1023] INFO:pre net num: 0, load net num: 1 INFO:root:filename: ./images/grace_hopper.bmp, res: (457, 0.12794505059719086) INFO:root:result saved in ./results/resnet50_int8_1b.bmodel_images_opencv_python_result.json INFO:root:————————— Inference Time Info ——————————— INFO:root:decode_time(ms): 32.21 INFO:root:preprocess_time(ms): 38.65 INFO:root:inference_time(ms): 4.23 INFO:root:postprocess_time(ms): 0.35 |

The inference time was 4.23ms (236FPS), and the total time was 75.44ms. Results in the JSON file:

(.venv) linaro@Airbox:~/Radxa-Model-Zoo/sample/ResNet$ cat ./results/resnet50_int8_1b.bmodel_images_opencv_python_result.json

[

{

“filename”: “./images/grace_hopper.bmp”,

“prediction”: 457,

“score”: 0.12794505059719086

}

|

(.venv) linaro@Airbox:~/Radxa–Model–Zoo/sample/ResNet$ cat ./results/resnet50_int8_1b.bmodel_images_opencv_python_result.json [ { “filename”: “./images/grace_hopper.bmp”, “prediction”: 457, “score”: 0.12794505059719086 } |

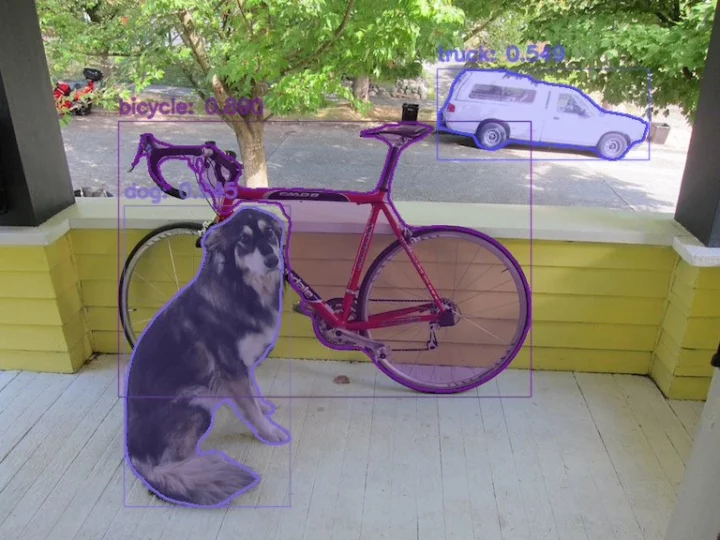

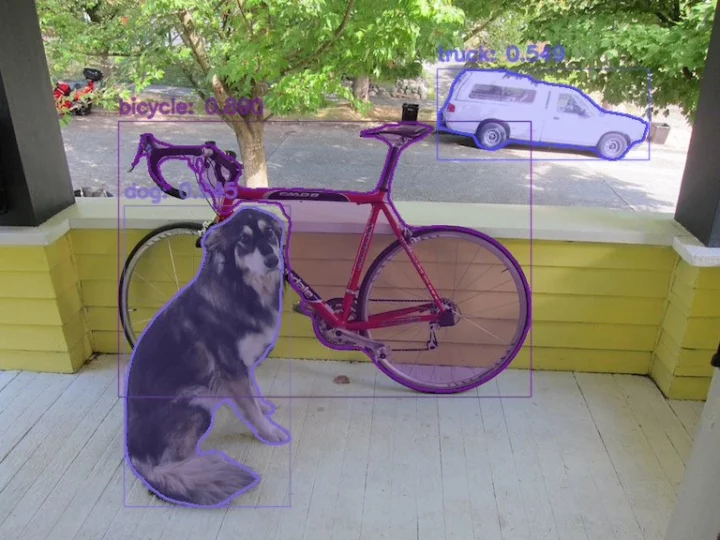

It looks to work but the output is not exactly human-readable… So let’s try Yolov8 object segmentation instead using the same Python virtual environment:

cd ../YOLOv8_seg/

wget

mkdir images && cd images

wget

cd ..

pip3 install pycocotools

|

cd ../YOLOv8_seg/ wget https://github.com/radxa-edge/TPU-Edge-AI/releases/download/model-zoo/yolov8s_int8_1b.bmodel mkdir images && cd images wget https://github.com/radxa-edge/TPU-Edge-AI/releases/download/model-zoo/dog_bike_car.jpg cd .. pip3 install pycocotools |

There are also two samples, but let’s keep using OpenCV:

python3 python/yolov8_opencv.py –input ./images –bmodel ./yolov8s_int8_1b.bmodel

|

python3 python/yolov8_opencv.py —input ./images —bmodel ./yolov8s_int8_1b.bmodel |

Output:

[BMRT][bmcpu_setup:406] INFO:cpu_lib ‘libcpuop.so’ is loaded.

bmcpu init: skip cpu_user_defined

open usercpu.so, init user_cpu_init

[BMRT][load_bmodel:1084] INFO:Loading bmodel from [./yolov8s_int8_1b.bmodel]. Thanks for your patience…

[BMRT][load_bmodel:1023] INFO:pre net num: 0, load net num: 1

INFO:root:load ./yolov8s_int8_1b.bmodel success!

INFO:root:1, img_file: ./images/dog_bike_car.jpg

sampleFactor=6, cinfo->num_components=3 (1×2, 1×1, 1×1)

Open /dev/jpu successfully, device index = 0, jpu fd = 23, vpp fd = 24

INFO:root:result saved in ./results/yolov8s_int8_1b.bmodel_images_opencv_python_result.json

INFO:root:—————— Predict Time Info ———————-

INFO:root:decode_time(ms): 29.25

INFO:root:preprocess_time(ms): 33.29

INFO:root:inference_time(ms): 16.18

INFO:root:postprocess_time(ms): 164.70

all done.

|

[BMRT][bmcpu_setup:406] INFO:cpu_lib ‘libcpuop.so’ is loaded. bmcpu init: skip cpu_user_defined open usercpu.so, init user_cpu_init [BMRT][load_bmodel:1084] INFO:Loading bmodel from [./yolov8s_int8_1b.bmodel]. Thanks for your patience... [BMRT][load_bmodel:1023] INFO:pre net num: 0, load net num: 1 INFO:root:load ./yolov8s_int8_1b.bmodel success! INFO:root:1, img_file: ./images/dog_bike_car.jpg sampleFactor=6, cinfo->num_components=3 (1×2, 1×1, 1×1) Open /dev/jpu successfully, device index = 0, jpu fd = 23, vpp fd = 24 INFO:root:result saved in ./results/yolov8s_int8_1b.bmodel_images_opencv_python_result.json INFO:root:————————— Predict Time Info ——————————— INFO:root:decode_time(ms): 29.25 INFO:root:preprocess_time(ms): 33.29 INFO:root:inference_time(ms): 16.18 INFO:root:postprocess_time(ms): 164.70 all done. |

The inference time was 16.18ms (61.8 FPS), and the total time was 246.72ms.

The JSON file is still not human-readable, but the demo also generates an image (or more if there are more input images) with descriptions and outlines for each object.

(.venv) linaro@Airbox:~/Radxa-Model-Zoo/sample/YOLOv8_seg$ ls -l results/images/

total 108

-rw-rw-r– 1 linaro linaro 110168 Jun 23 17:16 res_bmcv_dog_bike_car.jpg

|

(.venv) linaro@Airbox:~/Radxa–Model–Zoo/sample/YOLOv8_seg$ ls –l results/images/ total 108 –rw–rw–r— 1 linaro linaro 110168 Jun 23 17:16 res_bmcv_dog_bike_car.jpg |

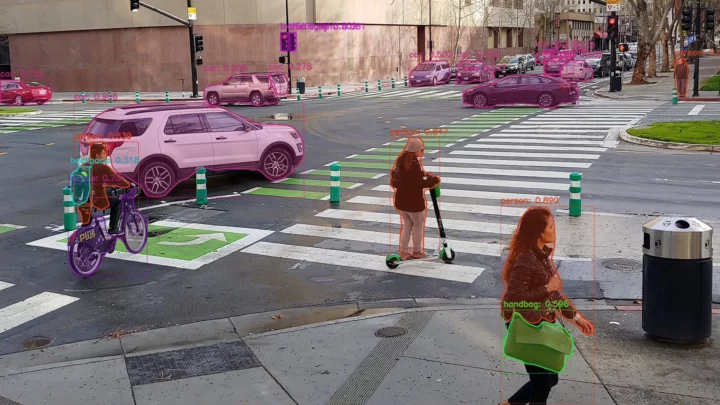

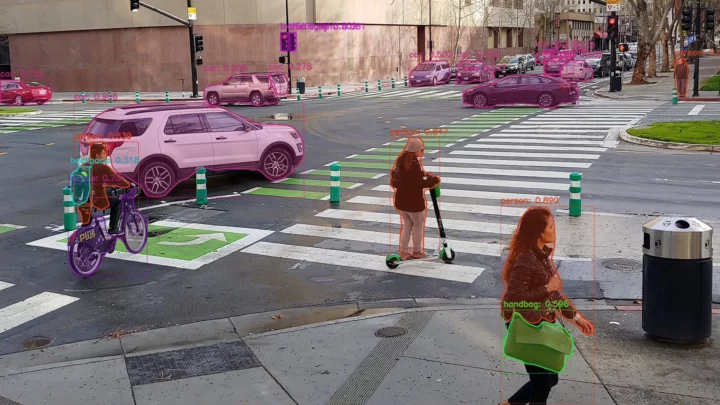

I added another larger image (1920×1080) with more object to the test:

(.venv) linaro@Airbox:~/Radxa-Model-Zoo/sample/YOLOv8_seg$ python3 python/yolov8_opencv.py –input ./images –bmodel ./yolov8s_int8_1b.bmodel

[BMRT][bmcpu_setup:406] INFO:cpu_lib ‘libcpuop.so’ is loaded.

bmcpu init: skip cpu_user_defined

open usercpu.so, init user_cpu_init

[BMRT][load_bmodel:1084] INFO:Loading bmodel from [./yolov8s_int8_1b.bmodel]. Thanks for your patience…

[BMRT][load_bmodel:1023] INFO:pre net num: 0, load net num: 1

INFO:root:load ./yolov8s_int8_1b.bmodel success!

INFO:root:1, img_file: ./images/dog_bike_car.jpg

sampleFactor=6, cinfo->num_components=3 (1×2, 1×1, 1×1)

Open /dev/jpu successfully, device index = 0, jpu fd = 23, vpp fd = 24

INFO:root:2, img_file: ./images/people-street.jpg

sampleFactor=10, cinfo->num_components=3 (2×2, 1×1, 1×1)

INFO:root:result saved in ./results/yolov8s_int8_1b.bmodel_images_opencv_python_result.json

INFO:root:—————— Predict Time Info ———————-

INFO:root:decode_time(ms): 54.78

INFO:root:preprocess_time(ms): 28.08

INFO:root:inference_time(ms): 14.53

INFO:root:postprocess_time(ms): 3634.29

all done.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

(.venv) linaro@Airbox:~/Radxa–Model–Zoo/sample/YOLOv8_seg$ python3 python/yolov8_opencv.py —input ./images —bmodel ./yolov8s_int8_1b.bmodel [BMRT][bmcpu_setup:406] INFO:cpu_lib ‘libcpuop.so’ is loaded. bmcpu init: skip cpu_user_defined open usercpu.so, init user_cpu_init [BMRT][load_bmodel:1084] INFO:Loading bmodel from [./yolov8s_int8_1b.bmodel]. Thanks for your patience... [BMRT][load_bmodel:1023] INFO:pre net num: 0, load net num: 1 INFO:root:load ./yolov8s_int8_1b.bmodel success! INFO:root:1, img_file: ./images/dog_bike_car.jpg sampleFactor=6, cinfo->num_components=3 (1×2, 1×1, 1×1) Open /dev/jpu successfully, device index = 0, jpu fd = 23, vpp fd = 24 INFO:root:2, img_file: ./images/people–street.jpg sampleFactor=10, cinfo->num_components=3 (2×2, 1×1, 1×1) INFO:root:result saved in ./results/yolov8s_int8_1b.bmodel_images_opencv_python_result.json INFO:root:————————— Predict Time Info ——————————— INFO:root:decode_time(ms): 54.78 INFO:root:preprocess_time(ms): 28.08 INFO:root:inference_time(ms): 14.53 INFO:root:postprocess_time(ms): 3634.29 all done. |

Inference is still fast, but postprocessing takes some time. The resulting image is pretty good.

Let’s try the BMCV sample to see if the speed is better:

(.venv) linaro@Airbox:~/Radxa-Model-Zoo/sample/YOLOv8_seg$ python3 python/yolov8_bmcv.py –input ./images –bmodel ./yolov8s_int8_1b.bmodel

[BMRT][bmcpu_setup:406] INFO:cpu_lib ‘libcpuop.so’ is loaded.

bmcpu init: skip cpu_user_defined

open usercpu.so, init user_cpu_init

[BMRT][load_bmodel:1084] INFO:Loading bmodel from [./yolov8s_int8_1b.bmodel]. Thanks for your patience…

[BMRT][load_bmodel:1023] INFO:pre net num: 0, load net num: 1

INFO:root:1, img_file: ./images/dog_bike_car.jpg

sampleFactor=6, cinfo->num_components=3 (1×2, 1×1, 1×1)

Open /dev/jpu successfully, device index = 0, jpu fd = 42, vpp fd = 43

INFO:root:2, img_file: ./images/people-street.jpg

sampleFactor=10, cinfo->num_components=3 (2×2, 1×1, 1×1)

INFO:root:result saved in ./results/yolov8s_int8_1b.bmodel_images_bmcv_python_result.json

INFO:root:—————— Predict Time Info ———————-

INFO:root:decode_time(ms): 57.77

INFO:root:preprocess_time(ms): 4.90

INFO:root:inference_time(ms): 11.61

INFO:root:postprocess_time(ms): 3439.02

all done.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

(.venv) linaro@Airbox:~/Radxa–Model–Zoo/sample/YOLOv8_seg$ python3 python/yolov8_bmcv.py —input ./images —bmodel ./yolov8s_int8_1b.bmodel [BMRT][bmcpu_setup:406] INFO:cpu_lib ‘libcpuop.so’ is loaded. bmcpu init: skip cpu_user_defined open usercpu.so, init user_cpu_init [BMRT][load_bmodel:1084] INFO:Loading bmodel from [./yolov8s_int8_1b.bmodel]. Thanks for your patience... [BMRT][load_bmodel:1023] INFO:pre net num: 0, load net num: 1 INFO:root:1, img_file: ./images/dog_bike_car.jpg sampleFactor=6, cinfo->num_components=3 (1×2, 1×1, 1×1) Open /dev/jpu successfully, device index = 0, jpu fd = 42, vpp fd = 43 INFO:root:2, img_file: ./images/people–street.jpg sampleFactor=10, cinfo->num_components=3 (2×2, 1×1, 1×1) INFO:root:result saved in ./results/yolov8s_int8_1b.bmodel_images_bmcv_python_result.json INFO:root:————————— Predict Time Info ——————————— INFO:root:decode_time(ms): 57.77 INFO:root:preprocess_time(ms): 4.90 INFO:root:inference_time(ms): 11.61 INFO:root:postprocess_time(ms): 3439.02 all done. |

Preprocessing with BMCV is quite faster than OpenCV. That sample decodes with SAIL, but it’s about as fast as OpenCV. In both cases SAIL handles inferences, so it’s probably just variability between the tests.

Radxa Fogwise Airbox’s power consumption and fan noise

When I first tested the Aibox Fogwise with a 100W GaN power supply, I noted idle power consumption was about 30 Watts. Since then I’ve received a power adapter from Radxa. and the idle power consumption is around 28 Watts. That’s still high. It goes up to 39W while Llama3 provides an answer, and jumps to about 49W when generating an image with Stable Diffusion. The power consumption varies depending on the image generated.

The fan runs all the time and is quite noisy. For a device close to the user such as a mini PC that would be an issue, but considering it’s a headless system, it can always be placed in a room with Ethernet connectivity and adequate ventilation far from users.

Conclusion

Radxa Fogwise Airbox is a great little headless box for offline/onsite AI processing that works with generative AI such as LLMs and text-to-image generators, as well as computer vision models like Resnet50 or YOLOv8. It’s very easy to get started thanks to the Ubuntu + CasaOS image preloaded with Stable Diffusion and Llama3 containers making a plug-and-play AI box. There’s also a Python SDK to customize models or create your own.

The documentation is pretty good, although I often had to run extra commands to succeed, and in one case (recompiling Llama3), it did not work for me despite my best efforts. I still think that overall Radxa Fogwise Airbox is an interesting device for people wanting to experiment with on-device generative AI and computer vision, or even integrate it into a project. Power consumption may be an issue, but the 32 TOPS AI box should be compared to similar solutions such as NVIDIA Jetson modules.

I’d like to thank Radxa for sending the Fogwise Airbox AI box for review. The model reviewed here with 16GB RAM and 64GB eMMC flash, but no SSD and no WiFi can be purchased on Aliexpress for $331 plus shipping.

Jean-Luc started CNX Software in 2010 as a part-time endeavor, before quitting his job as a software engineering manager, and starting to write daily news, and reviews full time later in 2011.

Support CNX Software! Donate via cryptocurrencies, become a Patron on Patreon, or purchase goods on Amazon or Aliexpress