In this image, the person on the left (Scarlett Johansson) is real, while the person on the right is AI-generated. Their eyeballs are depicted underneath their faces. The reflections in the eyeballs are consistent for the real person, but incorrect (from a physics point of view) for the fake person. Adejumoke Owolabi (CC BY 4.0)

HULL, England — In a world where AI-generated images are becoming increasingly common, telling real from fake is more crucial than ever. However, a new study finds that the answer to spotting deepfakes may be as simple as looking someone right in the eye.

A study presented at the Royal Astronomical Society’s National Astronomy Meeting suggests that we might be able to unmask AI-generated fakes by closely examining human eyes. Surprisingly, the technique borrows from methods astronomers use to study galaxies.

The eyes are windows to… authenticity?

At the heart of this research is a simple yet powerful observation: the reflections in our eyes. When you look at a photo of a real person, the light reflections in both eyes should match. However, this isn’t always the case in AI-generated images.

“The reflections in the eyeballs are consistent for the real person, but incorrect (from a physics point of view) for the fake person,” explains Kevin Pimbblet, professor of astrophysics and director of the Centre of Excellence for Data Science, Artificial Intelligence, and Modeling at the University of Hull, in a media release.

This discrepancy occurs because AI, despite its impressive capabilities, doesn’t always get the physics of light reflection quite right when creating fake images.

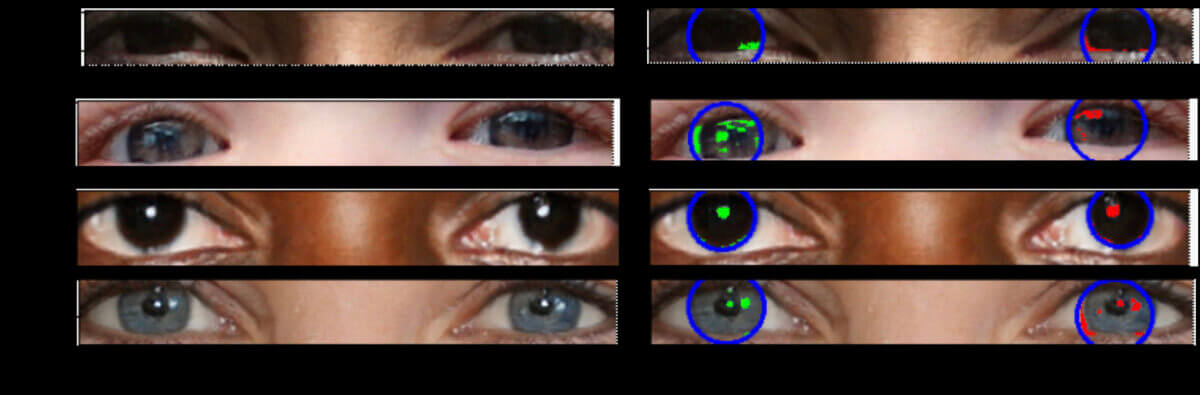

A series of deepfake eyes showing inconsistent reflections in each eye. Adejumoke Owolabi (CC BY 4.0)

A series of deepfake eyes showing inconsistent reflections in each eye. Adejumoke Owolabi (CC BY 4.0)

Celestial connection

So, how did astronomers end up studying fake photos? It turns out that the tools used to analyze galaxies can be repurposed for spotting deepfakes.

The research team, led by University of Hull MSc student Adejumoke Owolabi, analyzed the light reflections in the eyes of both real and AI-generated images. They then applied techniques typically used in astronomy to measure these reflections and check for consistency between the left and right eyes.

“To measure the shapes of galaxies, we analyze whether they’re centrally compact, whether they’re symmetric, and how smooth they are. We analyze the light distribution,” Pimbblet explains. “We detect the reflections in an automated way and run their morphological features through the CAS [concentration, asymmetry, smoothness] and Gini indices to compare similarity between left and right eyeballs.”

The Gini index: From economics to deepfake detection

One of the key tools in this study is the Gini index. Originally developed to measure income inequality in economics, it found a new purpose in astronomy for analyzing the distribution of light in galaxy images. Now, it’s being used to spot fake eyes.

In astronomy, the Gini index measures how evenly light is distributed across an image of a galaxy. A value of 0 means the light is perfectly evenly spread, while a value of 1 means all the light is concentrated in a single point.

When applied to eye reflections in images, this index can help reveal inconsistencies that might indicate an AI-generated fake.

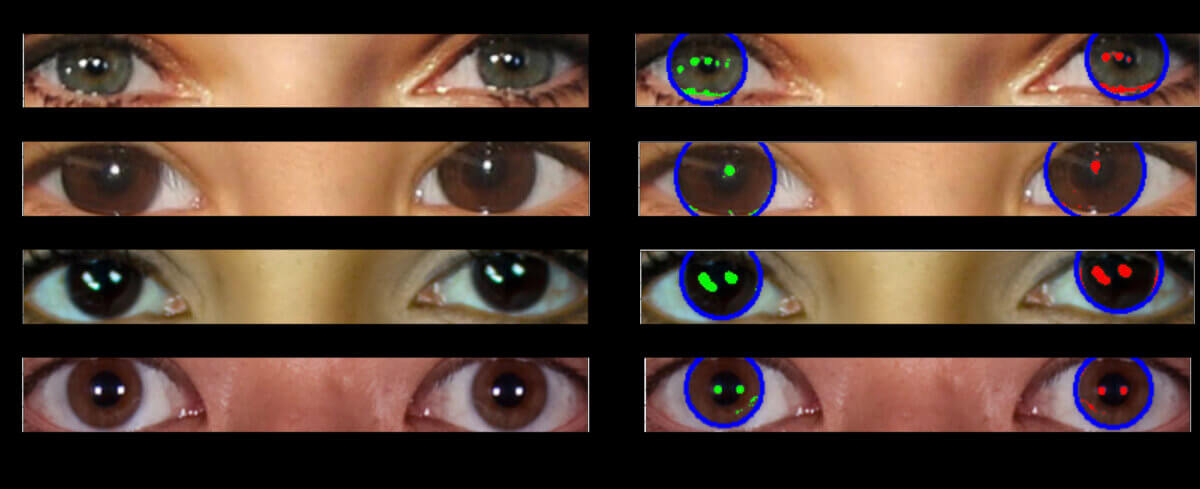

A series of real eyes showing largely consistent reflections in both eyes. Adejumoke Owolabi (CC BY 4.0)

A series of real eyes showing largely consistent reflections in both eyes. Adejumoke Owolabi (CC BY 4.0)

The team also experimented with the CAS (concentration, asymmetry, smoothness) system, another tool borrowed from astronomy. Typically used to classify galaxy shapes, it didn’t prove as effective for spotting fake eyes. This underscores the complexity of the challenge and the need for multiple approaches.

Not a silver bullet, but a step forward

While this method shows promise, Prof. Pimbblet cautions that it’s not foolproof.

“It’s important to note that this is not a silver bullet for detecting fake images,” Pimbblet says. “There are false positives and false negatives; it’s not going to get everything. But this method provides us with a basis, a plan of attack, in the arms race to detect deepfakes.”

As AI technology continues to advance, so too must our methods for detecting its creations. This research represents an innovative step in that ongoing battle, bringing together the unlikely worlds of astronomy and image forensics.

Next frontier in deepfake detection

As we move forward, this eye-reflection technique could become part of a larger toolkit for identifying AI-generated images. Combined with other methods, it could help social media platforms, news organizations, and even everyday users better navigate the increasingly blurry line between real and fake in our digital world.

So, the next time you’re unsure about an image you see online, remember: the truth might be in the eyes. Just don’t forget to blink!