In context: Creating differentiation in a saturated smartphone market filled with countless “me-too” products is no easy task. To their credit, Google managed to pull that off at this week’s Made by Google event by focusing on AI software capabilities in their latest Pixel 9 lineup.

The deep integration of Google’s Gemini AI models gave the company a way to demonstrate a variety of unique experiences that are now available on the full range of new Pixel 9 phones.

From the surprisingly fluid conversational AI assistant feature of Gemini Live, through the transcription and summarization of voice calls in Call Notes and image creation capabilities of Pixel Studio, Google highlighted several practical examples of AI-powered features that regular people are actually going to want to use.

On the hardware side of things, Google managed to bring some differentiation as well – at least for their latest foldable. The Pixel 9, Pixel 9 Pro and Pixel 9 Pro XL all feature the traditional flat slab of glass smartphone shape (in 6.3″ and 6.8″ screen sizes) but offer a new camera design on the back that offers a modest bit of design change.

However, the updated 9 Pro Fold stands out with a shorter, wider, and thinner shape, clearly distinguishing it from foldable competitors like Samsung’s Galaxy Z Fold. Interestingly, the Pixel 9 Pro Fold’s design is actually thinner and taller than the original Pixel Fold.

The aspect ratio that Google has chosen for the outside screen of the 9 Pro Fold makes it look and feel identical to a regular Pixel 9, while still offering the advantage of a foldable screen that opens up to a massive 8″.

For someone who has used foldable phones for several years, this similarity to a traditional phone size when unfolded is a much more significant change than it may first appear, and something I’m eager to try.

Inside the Pixel 9 lineup is Google’s own Tensor G4 SoC, the latest version of its mobile processor line. Built in collaboration with Samsung’s chip division, the Tensor G4 features standard Arm CPU and GPU cores but also incorporates Google’s proprietary TPU AI accelerator.

The whole line of phones incorporates upgraded camera modules, with the 9 Pro and Pro XL, in particular, sporting new 50MP main, 48MP ultrawide and 42MP selfie sensors. The phones also offer higher amounts of standard memory with 16 GB the new default on all but the $799 Pixel 9 (which features 12 GB standard). This will be critical because AI models like Gemini Nano require extra memory to run at their best.

In truth, though, it isn’t the hardware or the tech specs where Google made its most compelling case for switching to a new Pixel 9 – it was clearly in the software. In fact, the Gemini aspect is so important that on Google’s own site, the official product names of the phones are listed as Pixel 9 (or 9 Pro, etc.) with Gemini.

As Google announced at its I/O event this spring, Gemini is the replacement for Google Assistant, and it’s available on the Pixel 9 lineup now, with plans to roll it out to other Android phones (including those from Samsung, Motorola, and others) later this month. While Google has a reputation for frequently swapping names and changing critical functionality on their devices (or services), it seems they’ve made this shift from Google Assistant to Gemini in a more comprehensive and thoughtful manner.

Gemini appears in several different but related ways. For the kind of “smart assistant” features that integrate knowledge of an individual’s preferences, contacts, calendar, messages, etc., the “regular” version of Gemini provides GenAI-powered capabilities that leverage the Gemini Nano model on-device. Additionally, Gemini Nano powers the new Pixel Weather app and the new Pixel Screenshots app, which can be used to manually track and recall activities and information that appear on your phone’s screen. (In a way, Screenshots is like a manual version of Microsoft’s Recall function for Windows, although it doesn’t automatically take the screenshots like Recall does.)

Gemini Live offers a voice-based conversational assistant with which you can have complete conversations. In its first iteration, it functions entirely in the cloud and requires a subscription to Gemini Advanced (the first year of which is included with all but the basic Pixel 9).

In multiple demos of Gemini Live, I was impressed with how quickly and intelligently the Assistant (which can use one of 10 different voices) can respond – it’s by far the closest thing to talking with an AI-powered digital persona I’ve ever experienced. Unfortunately, Gemini Live doesn’t run on-device just yet, meaning it can’t access the personalized information that the “regular” Gemini models running on devices can, but combining these two types of assistant experiences is clearly where Google is headed.

Even so, the kinds of tasks you can use Gemini Live for, such as brainstorming sessions, researching topics, prepping for interviews, and more, seem very useful on their own.

Another intriguing implementation of Gemini Live is seen through Google’s new Pixel Buds 2, which were also introduced at the event. By simply tapping on one earbud and saying “Let’s talk live,” you can initiate a conversation with the AI assistant – one that can go on for an hour or more if you choose.

What’s interesting about using earbuds instead of the phone is that it will likely trigger different kinds of conversations, as it feels more natural to engage in an audio-only conversation with earbuds than it does holding and talking into a phone screen.

In addition to the Gemini-powered features, Google also debuted other AI-enabled software that’s unique to the Pixel including a photo Magic Editor that extends Google’s already impressive image editing capabilities to yet another reality-bending level. It really is getting harder to tell what’s real and what’s not when it comes to phone-based photography.

What was most impressive about Google’s launch event is that it managed to provide practical, real-world examples of AI-powered experiences that are likely to appeal to a broad range of people

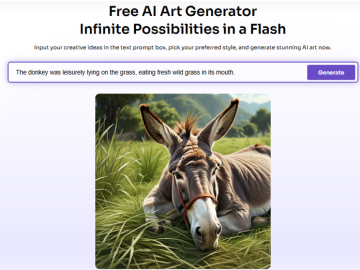

For image generation, Google showed Pixel Studio, which leverages both an on-device diffusion model and a cloud-based version of the company’s Imagen 3 GenAI foundation model. Finally, the clever new Add Me feature lets you get a group shot by merging two different photos (one of you taking your subject(s) and one of them taking a photo of you in the same spot) using augmented reality to create a shot of everyone there – without having to ask a stranger to take it for you!

Ultimately, the most important aspect about Google’s launch is that they managed to provide practical uses for the AI experiences that are likely to appeal to a broad range of people. At a time when some have started to voice concerns about an AI hype cycle and lack of capabilities that really matter, Google hammered home that the impact of GenAI is not only real, but compellingly so.

Plus, they dropped hints of even more interesting capabilities yet to come. I have little doubt some speed bumps will accompany these launches – as they always seem to do with AI-related capabilities – but I’m now even more convinced that we’re just at the start of what promises to be a very exciting new era.

Bob O’Donnell is the president and chief analyst of TECHnalysis Research, LLC, a market research firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow Bob on Twitter @bobodtech