7/8/2024

Facebook

Twitter

LinkedIn

Email

Since the release of ChatGPT in 2022, generative artificial intelligence chatbots have been heralded as the next big technology that will move society forward, promising increased efficiency and productivity. In medicine, particularly in specialty areas like infectious diseases, there have been many proposed uses, from augmenting clinical care and documentation to advancing medical education and facilitating research. (1)

Despite these opportunities, the use of these chatbots remains limited, with only 20% of employed American adults using them for work-related tasks. Additionally, usage tends to decrease with age, with only 10% of adults over 50 engaging with these tools. (2)

A potential reason might be the sometimes generic and uninspiring responses generated by these chatbots. However, the quality of a chatbot’s output is directly tied to the quality of the input it receives, making the use of effective prompts particularly crucial in medical tasks. (3)

For users of large language models, there’s no need for advanced coding skills; natural language is sufficient to interact with these machines. This natural language-based input, also called a prompt, is the way to communicate and obtain a response from chatbots. Prompts can range from simple tasks, such as an instruction or a question, to complex multistep instructions involving more than one type of input. The art of crafting prompts is called prompt design. The process of fine-tuning and testing the prompt with repeated iterations until you attain the desired output is called prompt engineering. (4,5)

Prompt design is an excellent way to introduce AI to newcomers, while prompt engineering helps transform casual users into savvy ones. As AI continues to spread to various fields, including medicine, mastering these skills becomes increasingly valuable, offering a universal tool set applicable across all large language models.

The CO-STAR framework

There are numerous strategies for skillfully crafting prompts, but one particularly simple and effective method is the CO-STAR framework: Context, output, specificity, tone, audience and response. (6) These prompt elements are designed to assist in generating the most specific and personalized output that suits your needs.

For context, set the scene by indicating your role (e.g., ID provider, pharmacist), your focus (clinician, researcher, educator) and/or the setting (clinic, inpatient, community) where you work. For output, clearly define what you expect the chatbot to produce, e.g., a letter, blog article, list, 500-word essay, visual image, handout or quiz. Be as specific with the topic as much as possible.

For instance, if you need a handout on latent tuberculosis, specify whether the focus should be latent TB testing, treatment options or a general overview of latent TB. Use tone descriptors like “empathetic,” “easy-to-understand,” “professional” or “scholarly,” and indicate the target audience in the prompt to ensure that the output matches your intended communication style and audience.

Often, the first version of your prompt may not be the best in achieving your desired output. Refining your prompt through iterative refinement, by tweaking elements of the CO-STAR framework repeatedly until you end up with the best iteration of the prompt, is considered good practice.

Other strategies

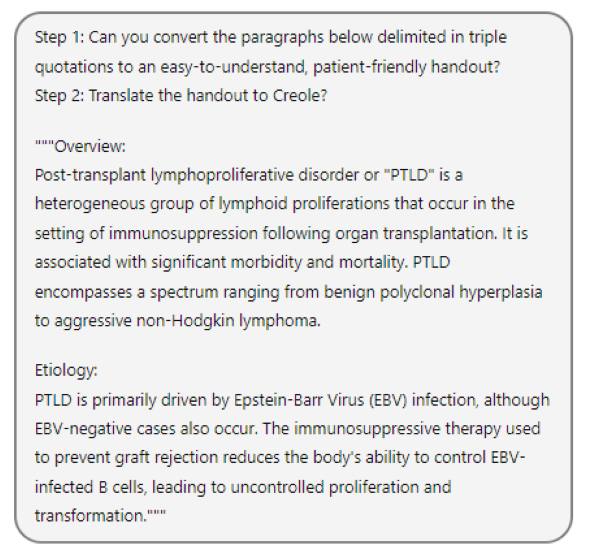

Delimiters such as colons, semicolons, line spaces, quotation marks and triple quotation marks (“””,”””) are useful in delineating different parts of your prompt. This is useful for prompts that involve multiple parts or steps. Additionally, if your prompt does involve multiple steps, break them down into each of the individual steps so you can examine the intermediate output before generation of the final output. (7)

Prompting for multimodal chatbots and retrieval-augmented generation

One feature that was recently added to commercial AI chatbots is multimodality of input and output. This means that beyond text prompts, various file types such as images, documents, audio recordings and web links can be uploaded as part of your input, allowing for a similarly diverse range of outputs. When prompting these multimodal chatbots, the CO-STAR framework remains a robust method for structuring prompts. However, it’s important to indicate any uploaded file in your prompt and specify how you want the chatbot to handle it. If you need to generate any other output beyond text (e.g., an image file or an Excel or .csv file), it is also necessary to specify this in your prompt.

Additionally, the integration of Retrieval-Augmented Generation, or RAG, into chatbots like ChatGPT has further enhanced their accuracy and functionality. RAG is a natural language processing technique that combines generative AI with targeted information retrieval to enrich the accuracy and relevance of the output. For example, if you would like to generate test questions on antibiotics, you can upload a reference document and prompt the chatbot to retrieve information from this file first before generating output. By doing this, you are ensuring that the content of your output is consistent with your reference document and is less prone to errors.

Examples of prompt design and engineering in ID

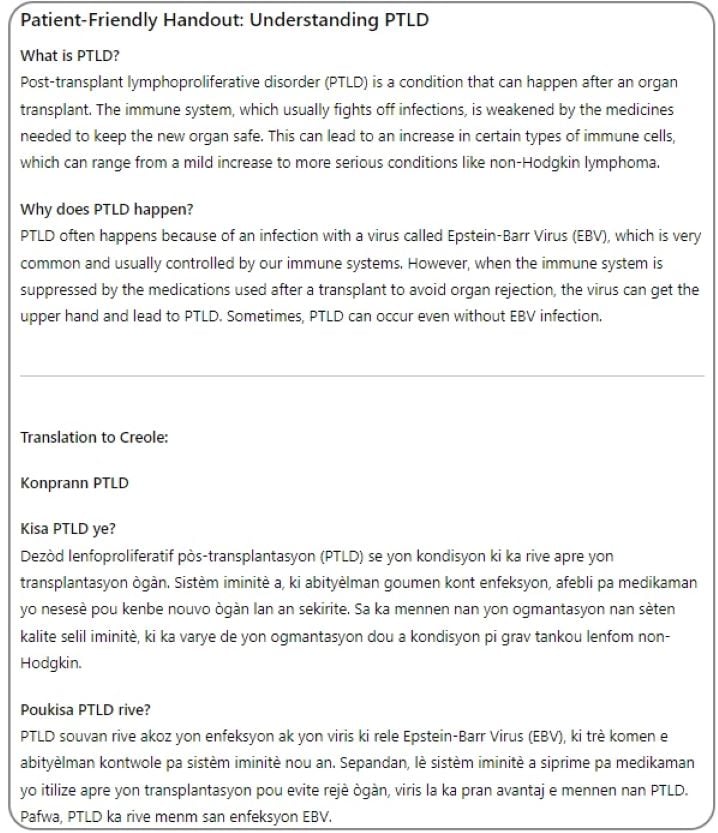

Example #1: Generating a customized handout for a patient in Creole showing the use of some elements of the CO-STAR framework, multistep prompting and the use of delimiters.

Prompt:

Chatbot response:

Example #2: Prompt design using the CO-STAR framework and prompt engineering to create a case-based quiz.

Prompt:

Chatbot response:

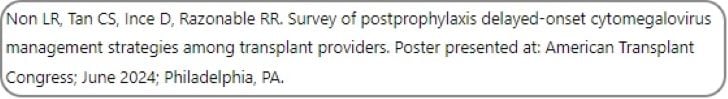

Example #3: Multimodal input, adding specificity and customization in a text prompt, and the use of delimiters.

Prompt:

Chatbot response:

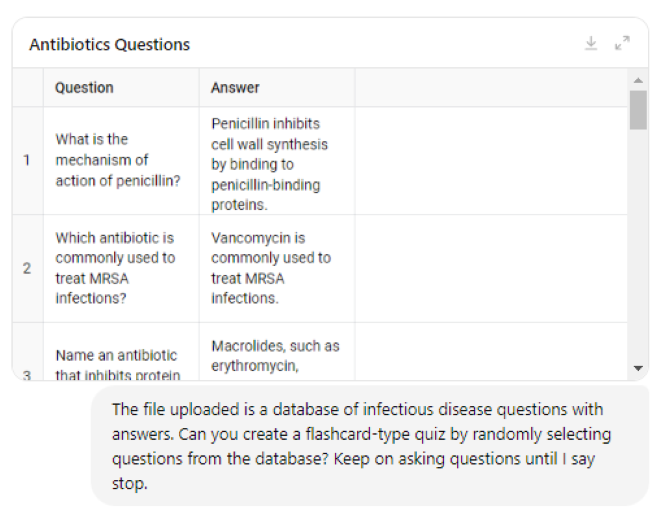

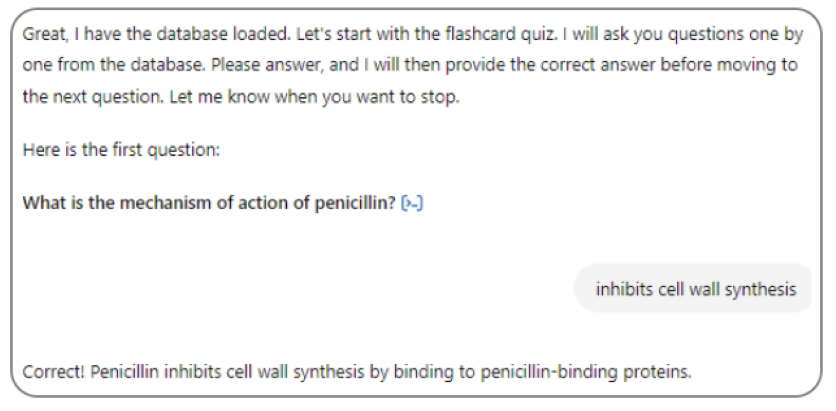

Example #4: Another example using multimodal input, this time using an Excel file to create a flash card-type quiz.

Prompt:

Chatbot response:

Other considerations

One significant limitation of large language models, particularly for specialized topics like ID, is the occurrence of “hallucinations” — the generation of incorrect or misleading information that appears to be correct. This issue is intrinsic to generative AI technologies. However, as these models are trained on increasingly comprehensive datasets, the frequency of such errors is expected to decrease with the use of more advanced and updated LLMs. Other ways to mitigate this issue include several strategies demonstrated in the examples above. Techniques in prompt engineering (e.g., “generate a response only if 100% certain”) and the use of reference file uploads to take advantage of RAG can significantly enhance the accuracy of outputs.

Ultimately, the output from any AI chatbot should always be checked and confirmed for accuracy. Relying solely on AI chatbots for creating content in specialty fields like ID is not advisable without the capability to confirm the information independently.

In a specialty field like ID, human-AI interaction should follow the co-pilot model, where both human and machine are needed to steer this technology to the right place. To be a co-pilot, however, one needs to know how to talk to the machine, and a key first step is learning how to effectively prompt and understand its limitations.

References

- Non LR. All aboard the ChatGPT steamroller: Top 10 ways to make artificial intelligence work for healthcare professionals. Antimicrob Steward Healthc Epidemiol. 2023;3(1):e243. doi:10.1017/ash.2023.512

- McClain C. Americans’ use of ChatGPT is ticking up, but few trust its election information. Pew Research Center. March 26, 2024.

- Wang L, Chen X, Deng X, et al. Prompt engineering in consistency and reliability with the evidence-based guideline for LLMs. NPJ Digit Med. Feb. 20, 2024;7(1):41. doi:10.1038/s41746-024-01029-4

- Mesko B. Prompt Engineering as an Important Emerging Skill for Medical Professionals: Tutorial. J Med Internet Res. Oct. 4, 2023;25:e50638. doi:10.2196/50638

- Introduction to prompting. Generative AI on Vertex AI. Updated June 6, 2024.

- Non LR. Prompt Engineering: The Basics. Medium and the Machine Blog. Jan. 15, 2024.

- Prompt Engineering. OpenAI Platform. Accessed June 7, 2024.