A few months ago, a young Black gay patient in a southern state tapped out a message on his phone that he might not have been comfortable raising in-person at his local sexual health clinic. “I’m struggling with my relationship with sex,” he wrote, knowing there’d be an immediate response, and no judgment. “I feel like sometimes it’s an impulse action and I end up doing sexual things that I don’t really want to do.”

“Oh, honey,” came a swift response, capped with a pink heart and a sparkle emoji. “You’re not alone in feeling this way, and it takes courage to speak up about it.” After directing him to a professional, it added, “while I’m here to strut the runway of health information and support, I’m not equipped to deep dive into the emotional oceans.”

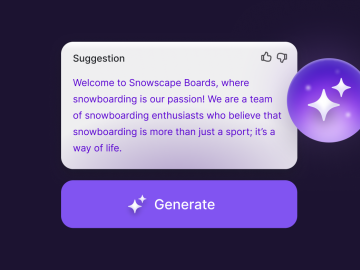

That’s among thousands of delicate issues patients have shared confidentially with an artificial intelligence-powered chatbot from AIDS Healthcare Foundation, a nonprofit offering sexual health and HIV care at clinics in 15 states. The tool can dispense educational information about sexually-transmitted infections, or STIs in real time, but is also designed to manage appointments, deliver test results and support patients, especially those vulnerable to infections like HIV but who are historically underserved. And as part of a provocative new patient engagement strategy, the foundation chose to deliver those services with the voice of a drag queen.

“Drag queens are about acceptance and taking you as you are,” said the foundation’s vice president for public health, Whitney Engeran-Cordova, who told STAT that he came up with the drag queen persona concept. “You’re getting the unvarnished, non-judgmental, empathetic truth.”

For several months, the foundation has offered the “conversational AI” care navigation service based on OpenAI’s GPT-4 large language model, and thousands of patients across the country have opted in to using it to supplement their in-person care. While they can select a “standard” AI that’s more direct, early data from a pilot at seven clinics in four states suggests the majority — almost 80 percent — prefer the drag queen persona.

The model, developers told STAT, was fine-tuned using vocabulary from interviews with drag performers, popular shows like RuPaul’s Drag Race, and input from staff familiar with drag culture. Health care workers, patients and performers are regularly invited to test it out and improve it.

It’s an early case study on the vast potential, and limitations, of AI tools that reach patients directly without immediate human oversight. Fearing hallucination, bias or errors, health care providers have so far largely avoided deploying AI-powered tools directly to patients until their risks are better understood. Instead, they mostly use AI to draft emails or help with back-office workflow.

But the foundation and Healthvana, the Los Angeles-based tech company that built the tool, said they are comfortable with the technology. They say they adhere to rigorous testing, strictly avoid giving medical advice, and constantly calibrate the model to help clinics safely field more patients than in-person staff could otherwise manage. By the time patients get to their appointments, they often have already received basic sexual health information through the chatbot, which can serve as a friendly, conversational source for potentially life-saving tips, like using condoms or PrEP.

Patients can consult the bot at any time, meaning they can also ask about precautions right before having sex, Engeran-Cordova added. “We’ve never been able to get this close to the moment of action, as a prevention provider” in a way that’s “comfortable, not stalker-y.”

It’s not perfect — sometimes the chatbot uses the wrong word or tone — but its creators assert that the benefits of reaching more patients outweigh the risks. Its missteps have also been minor, they say: An early version of the bot was overzealous, and congratulated a patient when she disclosed a pregnancy that might have been unwanted, for instance.

“What is [the] cost of a wrong answer here, versus what is the benefit of tens of thousands of people who suddenly have access to care? It’s a no-brainer,” said Nirav Shah, a senior scholar within Stanford Medicine’s Clinical Excellence Research Center and an advisor to Healthvana.

Engeran-Cordova said he also has pressure-tested the technology to ensure it doesn’t repeat or reflect back harmful or offensive questions about sexual health or sexuality.

“We would intentionally have yucky conversations that somebody might say, and I even felt creepy typing them in,” he said. The tool, he recalled, “would come back around and say, ‘We don’t talk about people that way, that’s not really a productive way for us to discuss your health care.’”

Having staff scrutinize the bot and routinely evaluate its responses after they’re sent helps it come across as human and empathetic, Engeran-Cordova said. “There’s an emotional intelligence that you can’t program.”

The chatbot also saves clinical staff time, according to Engeran-Cordova. “Our interactions with people get fairly limited in time — we’re talking about a 10-minute, a 15-minute amount,” he said. If a patient has already asked the tool basic questions, it “moves the conversation down the road” during the appointment, allowing the provider to focus on more immediately pressing issues.

Some outside experts agree that humans don’t necessarily need to review each message before it’s sent. If the tool isn’t giving medical advice, but rather information about scheduling or STIs, “the fact that they are overseeing the interaction [at all] is a lot more than what consumers get when they use Dr. Google (Internet search),” Isaac Kohane, a Harvard biomedical informaticist and editor-in-chief of the New England Journal of Medicine’s AI publication NEJIM AI, said in an email. “At least in this instance which information they see first will not be influenced by paid sponsorships and other intentional search engine optimizations.”

Healthvana has an agreement with OpenAI to protect patients’ health information under the federal privacy rule HIPAA. Any identifiable information was stripped from conversations shared with STAT.

The creators of the drag queen model and its evaluation process hope to detail their findings in an upcoming research paper, which hasn’t yet been accepted by a medical journal. Part of the goal is to demonstrate to other public health groups that rigorously tested AI tools might not always need the so-called “human-in-the-loop” in real time — as long as they’re evaluating the communication after the fact, Healthvana founder Ramin Bastani said.

The health industry hasn’t yet established standards for benchmarking patient-facing tools; one of Healthvana’s executives sits on a generative AI workgroup within the Coalition for Health AI — the industry body working with federal regulators to set evaluation and safety standards — to begin tackling these questions.

While deploying the technology too early could certainly harm patients, “if there’s no access for communities of color…then we’re going to be left behind,” said Harold Phillips, formerly director for the White House Office of National AIDS Policy, who co-authored the unpublished paper on the pilot.

Seventy-percent of messages sent to the chatbot during the pilot were from people of color. If tested carefully, chatbots won’t necessarily propagate medical discrimination, stigma and bias, Phillips said, adding, “many health care providers today are still really uncomfortable talking about sexual health or answering questions, and some patients are uncomfortable asking questions.”

It’s too early to tell whether the bot has actually made people measurably healthier. But Engeran-Cordova said he plans to work with an epidemiologist and research staff to monitor how interacting with the bot impacts patients’ STI testing patterns and their adherence to medication, for instance.

While the tool is meant to be lighthearted, it has the potential to draw the ire of conservative politicians at a time when sexual and reproductive rights have been increasingly restricted.

But it’s designed to foster especially difficult conversations — about, say, a positive STI result — with sensitivity and empathy, Engeran-Cordova said. And if a patient feels playful, like the millennial male who asked if he “should be worried about it raining men,” it can match their tone. “Honey, if it’s raining men, grab your most fabulous umbrella and let the blessings shower over you!” it said, before reminding him, “in the world of health and wellness, always make informed choices that keep you sparkling like the star you are!”

And what’s the bot called? During an evaluation session, Engeran-Cordova said, it named itself. “I asked her what her drag name would be, and she said ‘Glitter Byte.’”