Digital-only marketing company The Brandtech Group, which is also a global generative AI (Gen AI) marketing firm, has unveiled proprietary technology called “Bias Breaker” that tackles bias in foundation models.

This technology is part of Brandtech’s raft of initiatives to promote and set boundaries for the ethical use of generative AI, including a blueprint to create an ethical Gen AI policy.

Rebecca Sykes, Partner and Head of Emerging Technology, The Brandtech Group, said: “As very early adopters, we have a responsibility to lead. We believe all companies that use Gen AI should commit to what they will and won’t use it for and be transparent about this. We are committed to being an active participant in shaping a more responsible and ethical future of AI in marketing and advertising.”

The package represents Brandtech’s commitment to delivering Gen AI responsibly and ethically across three key areas: the teams it hires, promotes and trains, the tech it builds, and the services it offers.

After more than a year of development, Brandtech’s Bias Breaker is now live and integrated with Pencil, which has already created more than 1 million ads for 5,000+ brands, with the $1bn media spend that has passed through it enabling it to also make performance predictions.

Breaking the bias

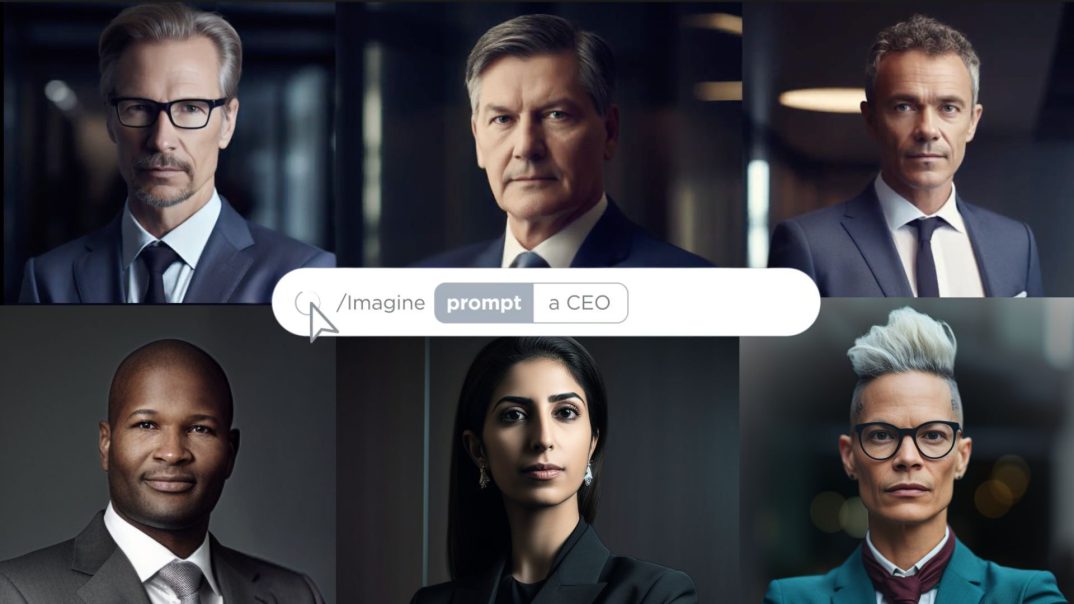

Brandtech’s own research, which is just one example of the many kinds of bias type, discovered that several major foundation models continue to generate 98 per cent to 100 per cent of images of males when prompted for “a CEO”, with the majority over-indexing for male (or male appearing) CEOs versus reality.

The research showed significant bias. Two of the models showed 100 per cent male-appearing images in 100 generations when prompted for an image of a CEO. While another was 98 per cent male, and two others offered male CEOs 86 per cent and 85 per cent of the time, the figure in reality is better – although far from equal.

McKinsey’s Women in the Workplace study last year identified that 28 per cent of C-suite roles were held by women and 10.4 per cent of CEOs of Fortune 500 companies were female.

The proprietary Bias Breaker technology adds a layer of probability-backed inclusivity to prompts. Brandtech configured several of the most common elements of diversity, which, in this iteration of Bias Breaker, include age, race, ability, gender identity, and religion.

So, when a user enters a simple prompt – e.g. “a CEO”, the tool adds a number of types of inclusivity, which will vary each time, creating a more sophisticated prompt to use in any image generation model. This will produce images which, instead of being reflective of bias in the training data, add positive bias towards a wide spectrum of diversity and intersectionality that current models simply do not provide for.

Tyra Jones-Hurst, the Managing Partner at Oliver US, and Founder of InKroud, said, “The answer to this problem of bias in Gen AI is far from set in stone but one thing’s for certain – brands and advertisers cannot simply accept bias as the status quo. One way to address it is to prioritise inclusion through creating automated features where they don’t already exist, and integrate them to build on top of foundation model use. This is what we have done with Bias Breaker.”

Rebecca Sykes added, “Looking at CEO examples is just one way bias shows up, and the simple use case we have used to demonstrate the challenge and implications of not addressing bias. There are many other examples, ranging from the obvious – nurses and carers are mostly female, there is a stark lack of disability etc – to much more nuanced instances. Over time, we hope to address all of this.”

The Brandtech Group ethical GenAI package

Brandtech’s Ethical Gen AI package also includes a number of practical measures, including the release of a blueprint to help anyone create an ethical Gen AI policy, which incorporates the group’s extensive learnings across this discipline and is available, free, to anyone. The group aims to go beyond compliance with any statutory regulations.

All Brandtech client-facing teams have been given training and a discussion guide on how to assist a client through an ethical decision-making process for Gen AI. Additionally, Brandtech Consulting, the group’s strategic offer, and InKroud, the multi-cultural consultancy that is part of Oliver, can offer six-week Gen AI Ethical Sprints to clients.

Brandtech has found that CMOs are increasingly asking questions around ethical usage of Gen AI as they move from pilots to scaled deployment and the Ethical Gen AI Package, including Bias Breaker, directly answers those questions.

David Jones, founder and CEO of Brandtech, says: “When social media exploded onto the scene no-one saw the negative issues coming. With Gen AI, we have the ability to get ahead, instead of simply being reactive after the event. Bekki, Tyra and the Gen AI teams have worked extensively with our clients to create best practices and lead the market.”