The participants in this session were:

- Chase Conching, Principal and Creative Director of Library Creative

- Ryley Higa, Machine Learning Engineer at Sumo Logic

- Yolanda Lau, Consultant, Educator and Co-Founder of Hawai‘i Center for AI

- Liya Safina, Digital Design and Innovation Contractor for Google and other companies

- Moderator: Ryan Ozawa, Emerging Tech Editor for Decrypt and Founder of Hawaii Hui

Ozawa: This session is practical and hands-on. We’re going to open up that AI toolbox. Raise your hands if they have fired up ChatGPT. (scans audience) Almost everybody. Who has an AI app on their smartphone? (scans audience) About half. So rather than getting into basics, we’ll go deeper. Yolanda, what are the innovative platforms you use?

Lau: ChatGPT 4 – and I think you should start there. Before that launched I told people to use Meta AI as their introduction. I think each of the different large language models has their strengths and weaknesses. Meta is better generally for short-form content. I prefer Claude for longer form content. If I’m doing coding, I prefer ChatGPT. But the truth is I have a browser open with all of them in separate tabs. I will try prompts in all of them, and then keep iterating with a model or two before I finally pick the one for that specific task.

Ozawa: We’ll start with the text generators most people are familiar with. We’ll go through text, image, video and specialized business applications. Chase, as a branding strategist, you do a lot of writing. You certainly want to represent the client’s voice, certainly the spirit of Hawai‘i, and we learned that can be a challenge based on what the broad internet has taught people about Hawai‘i. How do you use the text tools?

Conching: There was a good question from the audience in a previous session about the lack of information about Indigenous people in these large language models. What’s really cool is we now have access to a hui of people that have access to Hawai‘i photos and offline text and are using that to inform these language models and plan to open source these Indigenous datasets.

Ozawa: A group called Indigenous AI is focused on improving that representation around the world – the availability of the datasets and the quality of the outputs. Are you using ChatGPT or another text tool?

Conching: Like Yolanda, I go back and forth between a few different large language models. ChatGPT is one; its multimodality is a game changer for me. I also use Claude. I’m on the go quite a bit, so I use mobile apps for ChatGPT, Pi and Claude for different reasons.

Ozawa: Ryley, as a software developer, what does your toolbox look like?

Higa: For generative text, I mainly use ChatGPT and Claude. I use GitHub Copilot for personal projects and I find GitHub Copilot to be a very useful tool for programming.

Safina: If you’re trying to generate imagery that’s very specific to a culture, the large language models cannot get that specific. One workaround is to leverage either OpenAI or Adobe Firefly within Photoshop to generate a piece of an image that I want, rather than trying to have it get everything right all at once. It’s like a puzzle: You get each piece individually correct first, then work on the whole.

For image generation, Midjourney of course. Midjourney will also analyze imagery you send it. For instance, if you are working with a particular photographer, style or artist, you can send Midjourney a referential image, ask it to analyze the image, so Midjourney tells you the way it would describe the image. Then you can work with the output that it provides to get your result closer to the way Midjourney describes it rather than the way humans describe it.

I also use AI because I’m an immigrant; English is my second language and the metric system is my first way of measuring everything. I needed work done in my backyard and I couldn’t estimate the area’s size in square feet. So I sent photos to Open AI with different angles and asked: Can you estimate it in square feet?

Ozawa: Yolanda, why is Claude your preference for long-form content versus ChatGPT?

Lau: I think it’s more about the style. I find ChatGPT too stiff, in the way that Gemini is too informal. Claude has a nice middle ground. I like what Liya said about starting in the corner of an image. I feel the same way about writing. If you ask ChatGPT or any LLM to provide generic content – an article about whatever – you’re going to get something generic and terrible. But if you start by asking: Can you talk about this one idea, then build off that, that’s how you get the results you want.

Left: Yolanda Lau, Right: Ryan Ozawa

Ozawa: Chase, how do you use tools so you don’t get a generic response?

Conching: Provide as much context as possible. Mike Trinh in the opening session said he uses five or six different prompts to get the output as refined as possible. That is important. So is building the expertise to then say, “This is incorrect” or “This is not my style, please correct it.” Nowadays, a lot of the large language models will remember that you like to write in one particular style, or you don’t like to use this particular language. Sometimes people feel they’re bugging the program if they prompt it over and over. But it’s really helping it help you.

Safina: With text, I find what works is reverse engineering, figuring out if there’s a particular writer you like, feeding the model that content and asking what’s so particular about it. What is different about the way this author structures sentences or uses descriptors? Tell me what’s the formula in bullet points. My most common request is TLDR – too long, didn’t read – so it gives me concise answers. I learn what makes this text different and then apply this formula to the prompt I give AI.

Ozawa: My examples of multiple iterations to AI: “You are an expert in agriculture and have a technical understanding of this and that, and are speaking to someone with a 10th grade education. How would you articulate this information?”

Higa: One technique I use when prompting ChatGPT is called in-context learning – giving examples of how to do the task. Another technique is reachable augmented generation. That means you’re providing facts and knowledge inside the prompt so ChatGPT has the information to answer your question. Another simple technique is chain of thought prompting: Ask ChatGPT to explain its reasoning.

Lau: I use Otter to record meetings when I think taking notes will be impractical or I’m likely to miss stuff, and always ask permission before recording. Then I can go over the transcript for details I missed.

Conching: Instead of Otter I use Fathom for transcription because it is HIPAA certified and SOC-2 certified (good for financial data). Also, they have a transparent policy on what data they use. They don’t use any recorded data or chat data to train their models. It’s important before you commit to a tool to read its policy on data retention and usage.

Ozawa: I am a fan of Otter. There’s also Fireflies.ai and other tools. Transcripts are useful if you’re looking for the actual words. Otter makes it easy to edit and correct, which you still have to do. But summary tools within Otter and Zoom are useful because you can come out of a meeting with the action items or a checklist like, “Yolanda will bring the chicken and Ryley will make rice.”

Let’s move to images. Liya got us started with Midjourney. Chase, what’s your image creation tool?

Conching: In my work, that’s mainly creative and by proxy marketing, etc., we do a lot of creative execution, and we use image generation tools for early ideation, but there’s still absolutely the need for a human. Midjourney is one of the tools we use quite a bit.

We are developing our own models using Indigenous faces. That’s something we’re eventually hoping to open source.

An industry standard is still Adobe. I was fortunate to work with Adobe back in 2018 on their Sensei project, their early generative AI model, and that turned into Firefly. What I like about Adobe is they are one of, if not the only major player in the space, that only sources training data from licensed or open source information. They pay artists for images they use in their training data. So even though they might be a little behind the curve in quality of output, they are the most ethical, in my opinion, when it comes to input.

Ozawa: Yolanda, is ChatGPT your go-to for image work?

Lau: I prefer Firefly, for the same reason as Chase: They’re not using data they’ve sourced illegally. You feel safer using content created by Firefly versus ChatGPT or Dall-E.

Higa: I use Firefly and Dall-E usually, but I only use it mainly for personal flyers for meetings and such. I often use something like Magneto, which is free and casual.

Ozawa: Canva has options like that.

Safina: Yes, Canva. For each industry, there’s one tool that tries to be everything, your Swiss Army knife, and in marketing Canva is that, allowing you to generate your own images. For presentations, you can simply drop in three photos of your team members and it will give you four different options of beautifully designed slides, biographies and names, all well designed.

I’m a designer, so I will never advocate for “Let’s replace all designers with Canva.” But there’s a time and place for AI. You will never find a designer who says my joy in life is creating presentations. We want to free designers to do higher level work. But something as simple as a flyer for social media or a presentation, use Canva. Canva is the number one tool that I would encourage all businesses to try. Tell your marketing department: “See how much time you can save to actually be more creative.”

Conching: For entrepreneurs and businesspeople who don’t have full marketing departments, Canva will save time and money. I recommend it.

Lau: I agree on Canva. Another one I use is Ideogram, which gives 100 free images each day. These image generation tools help anyone become an entrepreneur. You used to need a designer to make a starter logo for you. Now you can have AI do it. Anyone can use AI to start their own business pretty much overnight, something that would have taken months before.

Safina: One more tool specifically for presentations: Gamma AI. I used it for a conference and it took me half the time that it normally takes me to prepare a conference presentation. Gamma was easy to use. And there’s Beautiful and 10 others that cut your time in half while creating presentations that are better than templates.

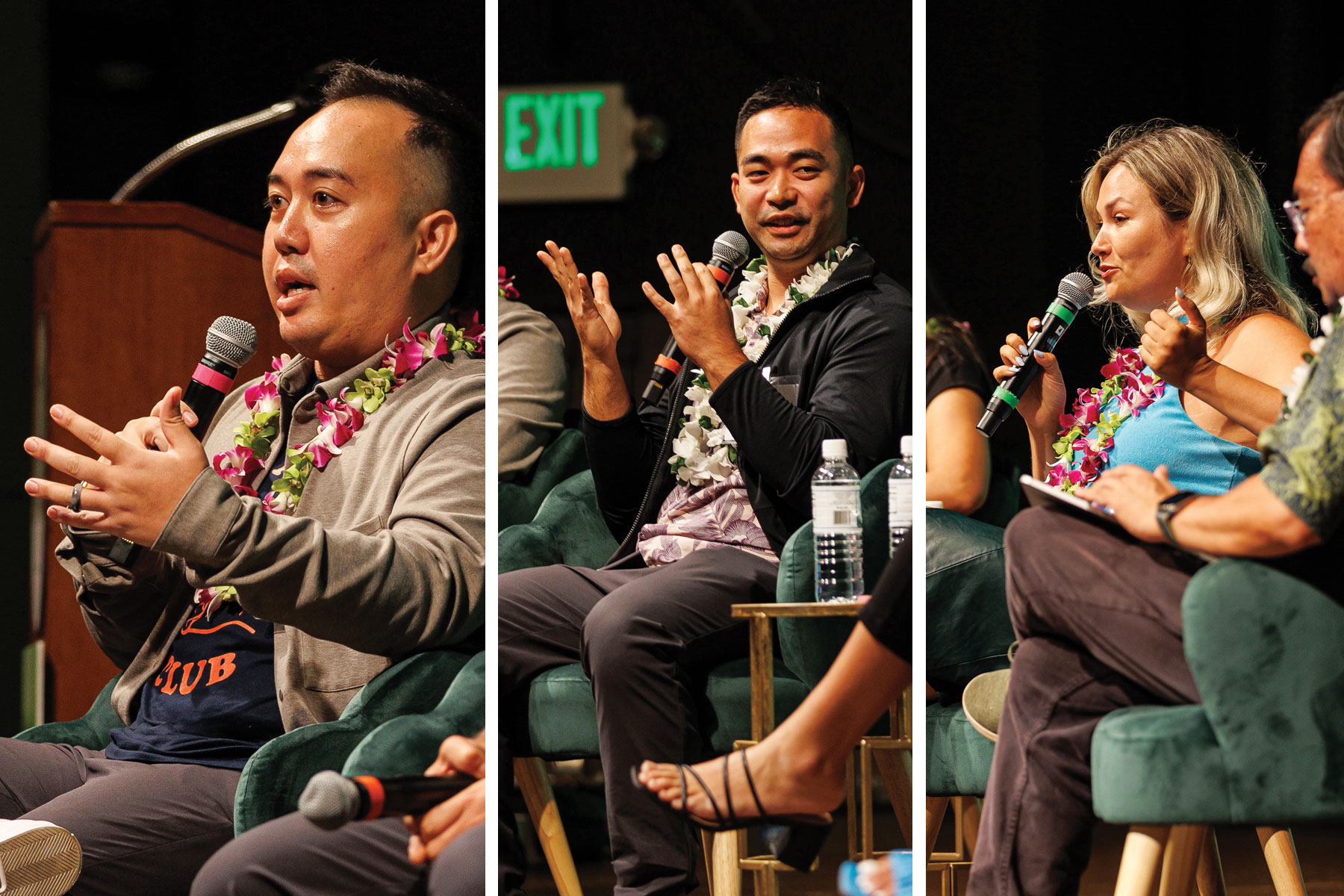

Left: Chase Conching, Middle: Ryley Higa, Right: Liya Safina

Ozawa: Let’s move to video generation. Ryley, what’s your favorite?

Higa: I used Pika, which turns text into video, but the quality was poor. For text to video, we’re not there yet.

Conching: But there are more limited tools that save tons of time for video editors. Premiere now can automatically remove objects from moving scenes so you don’t have to do it manually.

Lau: We can’t access Sora as everyday people, but you can use tools like Canva or Synthesia to make training videos. Take your pages and pages of written training content and turn them into a talking head that people can learn from. It’s easier for employees than reading and they’re likely to retain more of the information.

Ozawa: Let’s talk next about business applications. What about Zapier?

Lau: (turns to the audience) Who has used Zapier? (only about half a dozen raised hands) That’s surprising to me. Zapier is free – you can pay to get more – no coding and it allows anyone to automate almost anything. So you don’t need to write the code to call the API (an application programming interface between two applications), you just use Zapier to call whatever and you can hook up Airtable to literally anything. There’s so many uses. Everyone should have a free account.

Ozawa: Basically a translation tool between different platforms. What common applications do you see for Zapier?

Lau: Use it for anything repetitive, time-consuming, that you don’t want to do yourself. I use it to call data from standard emails into spreadsheets, which is a format I want. Magical.

Safina: If there’s one takeaway from this panel, I highly encourage anybody who’s dealing with marketing, sales or customer relationships or automation, to check out Zapier. A feature called Zaps allows you to write algorithms. If my company gets an inbound email or a form submission, we can segment who submitted this form. Is this an existing customer or new? If existing, do we want to send them a message or Slack or notify our rep to call them? It talks to Slack, Salesforce, Intercom, texts. You don’t need to add new tools, you can link existing tools to automatically do actions that normally a human oversees. It’s an amazing tool to experiment with.

Ozawa: I want to mention a couple of AI companies with Hawai‘i ties. Legislature.ai started here about a year ago and allows you to track legislation that might impact you or your company. A company called Sudowrite has a writing tool focused on creative writing. I know a group that got a grant that AI wrote the application for. And finally Segment X, if you’re looking for marketing and business development help.

Lau: And Reef.ai for understanding your customers. And I just want to mention the founder of Sudowrite, Amit Gupta, lives in Honolulu.

Audience question: The website theneuron.ai ranks AI tools, but is there a platform that replicates this panel and tells me, “You should be using this and that.”

Safina: There’s a newsletter I love called “You probably need a robot.” Every day or every other day, it sends you the latest business tools to use.

Higa: I find it useful to see if there is an AI integration for apps I already use and then test it. There’s good and bad AI integration, so test first.

Audience question: How do we learn to trust that these products are not uploading our data?

Safina: Every time I accept a privacy policy, I copy the whole policy and throw it in ChatGPT and ask, “Summarize this in 10 bullets,” so I know what I’m agreeing to.

Ozawa: All of these AI tools have a switch where you can tell them, “I don’t want you to use what I’m submitting to you to further train your bot.” They hide the switch but you can trigger it.