Illustration: Zohar Lazar

Slop started seeping into Neil Clarke’s life in late 2022. Something strange was happening at Clarkesworld, the magazine Clarke had founded in 2006 and built into a pillar of the world of speculative fiction. Submissions were increasing rapidly, but “there was something off about them,” he told me recently. He summarized a typical example: “Usually, it begins with the phrase ‘In the year 2250-something’ and then it goes on to say the Earth’s environment is in collapse and there are only three scientists who can save us. Then it describes them in great detail, each one with its own paragraph. And then — they’ve solved it! You know, it skips a major plot element, and the final scene is a celebration out of the ending of Star Wars.” Clarke said he had received “dozens of this story in various incarnations.”

These are prime examples of what is now known as slop: a term of art, akin to spam, for low-rent, scammy garbage generated by artificial intelligence and increasingly prevalent across the internet — and beyond. From their weird narrative instincts and inert prose, Clarke realized the stories came straight from ChatGPT. Sometimes they would arrive with the original prompt included, which was often as simple as “Write a 1,000-word science-fiction story.”

It was relatively easy to identify an AI-generated submission, but that required reading thousands (a “wall of noise”) and manually sorting them. Clarke compared the problem to turning off the spam filter and trying to read your email: “Okay, now multiply that by ten because that’s the ratio that we were getting.” Within weeks, the problem became unmanageable. “We had reached the point where we were on track to receive as many generated submissions as legitimate ones,” Clarke told me. Eventually, on February 20, he made the decision to close submissions temporarily. Clarkesworld had become one of the first victims of AI slop.

In the nearly two years since, a rising tide of slop has begun to swamp most of what we think of as the internet, overrunning the biggest platforms with cheap fakes and drivel, seeming to crowd out human creativity and intentionality with weird AI crap. On Facebook, enigmatic pages post disturbing images of maimed children and alien Jesuses; on Twitter, bots cluster by the thousands, chipperly and supportively tweeting incoherent banalities at one another; on Spotify, networks of eerily similar and wholly imaginary country and electronic artists glut playlists with bizarre and lifeless songs; on Kindle, shoddy books with stilted, error-ridden titles (The Spellbound Quest: Students Perilous Journey to Correct Their Mistake) are advertised on idle lock screens with blandly uncanny illustrations.

If it were all just a slightly more efficient form of spam, distracting and deceiving Facebook-addled grandparents, that would be one thing. But the slop tide threatens some of the key functions of the web, clogging search results with nonsense, overwhelming small institutions like Clarkesworld, and generally polluting the already fragile information ecosystem of the internet. Last week, Robyn Speer, the creator of WordFreq, a database that tracks word frequency online, announced that she would no longer be updating it owing to the torrent of slop. “I don’t think anyone has reliable information about post-2021 language usage by humans,” Speer wrote. There is a fear that as slop takes over, the large language models, or LLMs, that train on internet text will “collapse” into ineffectiveness — garbage in, garbage out. But even this horror story is a kind of wishful thinking: Recent research suggests that as long as an LLM’s training corpus contains at least 10 percent non-synthetic — that is, human — output, it can continue producing slop forever.

Worse than the havoc it wreaks on the internet, slop easily escapes the confines of the computer and enters off-screen systems in exasperating, troubling, and dangerous ways. In June, researchers published a study that concluded that one-tenth of the academic papers they examined “were processed with LLMs,” calling into question not just those individual papers but whole networks of citation and reference on which scientific knowledge relies. Derek Sullivan, a cataloguer at a public-library system in Pennsylvania, told me that AI-generated books had begun to cross his desk regularly. Though he first noticed the problem thanks to a recipe book by a nonexistent author that featured “a meal plan that told you to eat straight marinara sauce for lunch,” the slop books he sees often cover highly consequential subjects like living with fibromyalgia or raising children with ADHD. In the worst version of the slop future, your overwhelmed and underfunded local library is half-filled with these unchecked, unreviewed, unedited AI-generated artifacts, dispensing hallucinated facts and inhuman advice and distinguishable from their human-authored competition only through ceaseless effort.

Clarkesworld was, luckily, only temporarily disabled by the slop deluge; over the course of March 2023, with the help of volunteers, Clarke built a “very rudimentary spam filter,” and by the end of the month the magazine was able to reopen submissions. Clarke doesn’t like to describe how the filter works for fear of giving too much away to the spammers, but “it’s holding things at bay,” he said. Still, “it’s clear that business as usual won’t be sustainable,” he wrote in a blog post describing the problem. “If the field can’t find a way to address this situation, things will begin to break.”

Illustration: Zohar Lazar

The information superhighway”: That’s what the internet was supposed to be. And while it’s hard to regard the internet we have now as a wholly beneficent advance in collective wisdom — the commercial opportunities afforded by connecting billions of people sit uncomfortably with the civic aspirations of some of the web’s pioneers — it’s hard to deny that an information superhighway, with some tolls and billboards and potholes, is more or less what we’ve got. It’s still the first place most of us go to answer questions, to find out what’s happening, and to learn new things.

Since the arrival of widespread consumer-grade generative AI, these tasks have become progressively more difficult. Answering questions via Google now requires contending with AI-authored “Overview” modules at the top of some search pages, which offer incorrect summaries — “None of Africa’s 54 recognized countries start with the letter ‘K,’” one Overview claimed — just often enough to render them untrustworthy. Attempting to read news online is now fraught with the possibility that you’re consuming unedited AI-generated tattle: CNET, BuzzFeed, USA Today, and Sports Illustrated have published stilted and often incorrect AI-generated articles or used phony images and biographies for “authors.”

Imagine you are going foraging and want to download to your Kindle a guide to distinguish between edible and toxic mushrooms. If you look on Amazon, you’ll turn up some obviously legitimate books. But early on in the search results, you’ll find some seemingly AI-generated guides as well — for example, Forager’s Harvest 101: A Comprehensive Guide to Identifying, Preserving, and Preparing Wild Edible Plants, Mushrooms, Berries, and Fruits, by “Diane Wells.” Elan Trybuch, the secretary of the New York Mycological Society, recently wrote a blog post warning mushroom foragers about these dangerously inadequate guides: It’s possible that Forager’s Harvest 101 is fully accurate and safe to use, but it’s almost certainly unreviewed and unchecked and “written” by an AI that, as Trybuch described the technology, “does not know the subtle differences between a mushroom that is poisonous … vs one that is not.”

It’s not particularly easy to tell the difference between the AI-generated guides and those written by experts. Forager’s Harvest 101 has an intelligibly (if cheaply) designed cover and legible (if smooth and voiceless) prose as well as an author biography featuring a photo of a smiling middle-aged woman. Is this a completely AI-generated object, a self-published pamphlet, or a book from a publishing house that recently slashed its marketing and editing budgets? Indeed, I feel comfortable saying it’s AI-generated only because a watermark on Diane’s author photo credits it to the AI that powers the fake-portrait website ThisPersonDoesNotExist.com.

Experiences like this — staring at a collection of books written by AI with computer-generated author photos and dozens of reviews written and posted by bots — have become for many people evidence for the “dead-internet theory,” the only slightly tongue-in-cheek idea, inspired by the increasing amount of fake, suspicious, and just plain weird content, that humans are a tiny minority online and the bulk of the internet is made by and for AI bots, creating bot content for bot followers, who comment and argue with other bots. The rise of slop has, appropriately, the shape of a good science-fiction yarn: a mysterious wave of noise emerging from nowhere, an alien invasion of semi-coherent computers babbling in humanlike voices from some vast electronic beyond.

But the idea that AI has quietly crowded out humans is not exactly right. Slop requires human intervention or it wouldn’t exist. Beneath the strange and alienating flood of machine-generated content slop, behind the nonhuman fable of dead-internet theory, is something resolutely, distinctly human: a thriving, global gray-market economy of spammers and entrepreneurs, searching out and selling get-rich-quick schemes and arbitrage opportunities, supercharged by generative AI.

We know that the original source of these things was side-hustle scams,” Clarke told me. “People waving a bunch of money on YouTube or TikTok videos and saying, ‘Oh, you can make money with ChatGPT by doing this.’” Clarke could even trace spikes in submissions to specific videos: It’s not some burgeoning artificial super-intelligence or even a particularly sophisticated crew of scammers that has waylaid Clarkesworld; rather, it’s the audiences of influencers like Hanna Getachew, an accountant and technology-procurement manager who runs an Amharic-language YouTube account dedicated to “teaching side hustles and online jobs” — and who recently posted a video called “Get Paid With Clarkes World Magazine.” (Clarkesworld pays 12 cents per word for submissions of 1,000 to 22,000 words. Getachew claims viewers can “earn between $250 and $2,460.”)

The economics involved are simple. On one end, the demand: the effectively infinite, indiscriminate appetite for content of websites like Facebook and TikTok, which need enticements for users and real estate for advertisers. On the other, the supply: the astonishingly adequate, inexhaustible output of generative-AI apps like ChatGPT, Midjourney, or Microsoft’s Image Creator, heavily subsidized by investors and provided to consumers at low or no cost.

Billions of dollars are flowing among the many companies on either side of this dynamic, and the question for any would-be AI hustler is how to get in the middle, find an angle, and take a cut. The simplest, most straightforward option is to be a “slopper”: someone who generates content at scale using AI and manipulates or leverages a platform to make money from it. Sloppers may try to sell their content directly to people on a major marketplace — by, say, automating the production of recipe books to sell to unsuspecting (and maybe undiscriminating) customers on Amazon. Or they may build a website filled with articles generated by an LLM, festoon them with advertisements, and try to get them highly ranked on Google News. Maybe, and most straightforwardly of all, many simply vie for direct payments from platforms for AI-generated text, images, and videos: Facebook, TikTok, and Twitter all offer bonus payments for “engaging” content. (In a sense, so does Spotify, though we call those payments “royalties.”)

Take, as a case study in the slop economy, Facebook. Since the beginning of this year, obviously AI-generated images from anonymously administered pages have become inescapable. What began as riffs on already viral images have evolved into bizarre, sui generis dreamscapes through which inexplicable and unrelated themes and topics emerge: multiheaded, enormously breasted “farmer girls”; stewardesses wading in muddy rivers; amputee beggars carrying signs reading TODAY IS MY BIRTHDAY. One of the most famous of these images is “Shrimp Jesus,” a statuelike Jesus figure bobbing just underwater, his limbs and torso made entirely of the bristling chitinous bodies of shrimp. For the majority of these pages, there is no obvious scam at play, no ads or external links — no business model at all, just eerily contextless pages publishing demented nonsense into a void.

Where were these images coming from? The answer, at least in part, is a guy in Kenya named Stephen Mwangi. (At least, I think that’s his name and where he lives.) Stevo, as he introduced himself to me over WhatsApp, is the moderator of five YouTube channels and “about 170 Facebook pages” that deal heavily in AI-generated images, the largest of which has 4 million followers. He agreed to show me his methods, for a price. “If you need my information pay me,” he wrote. “No free information.” For a total of $105, I enrolled in a crash course in becoming a slopper.

His process for creating posts, he told me, is pretty simple and AI intensive: “I use ChatGPT to ask for the best images that can generate a lot of popularity and engagement on Facebook,” focusing on topics like the Bible, God, the U.S. Army, wildlife, and Manchester United. “WRITE ME 10 PROMPT picture OF JESUS WHICH WILLING BRING HIGH ENGAGEMENT ON FACEBOOK,” read the ChatGPT prompt in one screenshot he shared with me. You then take the prompts to the image-generation programs Leonardo.ai and Midjourney. Voilà: slop.

These pages make money through Facebook’s Performance bonus program, which, per the social network’s description, “gives creators the opportunity to earn money” based on “the amount of reach, reactions, shares and comments” on their posts. It is, in effect, a slop subsidy. The AI images produced on Stevo’s pages — rococo pictures of Jesus; muscular police officers standing on the beach holding large Bibles; grotesquely armored gargantuan helicopters — are neither scams nor enticements nor even, as far as Facebook is concerned, junk. They are precisely what the company wants: highly engaging content.

On a website like Facebook, the more strikingly weird an image is, the more likely it is to attract attention and engagement; the more attention and engagement, the more Facebook’s sorting mechanisms will promote and recirculate the image. Another AI content creator, a French financial auditor named Charles who makes bizarre pictorial stories about cats for TikTok, told me he always makes his content “a bit WTF” as “a way to make the content more viral, or at least to maximize the chances of it becoming viral.” Or as Stevo put it, “You add some exaggeration to make it engagementing.”

Stevo, who insisted he doesn’t “use bots” to juice his follower numbers or “pay for engagement,” shared a screenshot that showed a $500 “bonus earnings” payout for activity from mid-May to mid-June this year. (Minimum wage in Kenya ranges from about $120 to $270 a month.) It’s not really passive income, either. He said he spends about six hours a day administering his Facebook pages, but he works at the mercy of the website’s opaque moderation and decision-making processes. When I spoke with him, “God Lovers” had been placed under some kind of restrictions and wasn’t earning him money. He wasn’t sure what the problem was, but it wasn’t that the images were fake. “I have other pages which have over 100,000 followers which use AI images,” he said. Facebook doesn’t reveal how it calculates the value of the bonuses, and only creators in certain countries — the U.S., the U.K., and India among them — are eligible for the bonus program, which helps explain why, several times during our interview, Stephen insisted he was actually a British cybersecurity student named Jacob.

There’s a Stephen (and often a “Jacob”) behind all of this slop: an actual person uploading, say, identical Viking “novels” with seemingly AI-generated covers, all called Wrath of the Northmen: A Gripping Viking Tale of Revenge and Honor (that one has been published variously by authors named Sula Urbant, Sula Urbanz, and Sula Urbanr). The sloppers gather on message boards and chat apps and social media to swap tips and tricks. On Facebook, a 130,000-member group of Vietnamese sloppers called “Twitter Academy — Make Money on X” discusses methods of prompting ChatGPT to write X threads: “You are a Twitter influencer with a large following. You have a Funny tone of voice. You have a Creative writing style. Do not self-reference. Do not explain what you are doing.”

There are also hundreds of thousands of videos across the internet giving detailed instructions similar to the ones Stephen gave me. Jason Koebler, co-founder of 404 Media, an independent tech-news collective that acts as the publication of record for the world of slop, watched dozens of Hindi-language slop seminars on YouTube, many of them offering example prompts: “american soldier veteran holding cardboard sign that says ‘today’s my birthday, please like’ injured in battle veteran war american flag,” “A old American women is making forest lion out of cauliflower and her neighbors looking at it. keep it detailed.”

The creators of these kinds of seminars, equipped with the “sell shovels in a gold rush” playbook, often have more a reliable income than the sloppers themselves. They sell lesson plans and memberships to private Discord and Telegram chat rooms and act as middlemen who help set up U.S.-based accounts for international sloppers. If sloppers are the manufacturing sector of the slop economy, these gurus, vouchers, and toolmakers represent the service sector.

This ecosystem is not new. Influencers have been hawking platform-dependent “internet marketing” schemes for decades. What has changed is the level of work and investment involved. For a while now, it has been common for entrepreneurs to outsource the actual production of content: “I have two people in the Philippines who post for me,” one American Facebook-page operator told The New York Times Magazine in 2016. But when you’ve got an automated post-creating machine, who needs two people in the Philippines? For that matter, given the sophistication of the AI, why would the Filipinos need an American?

There’s no definitive way to tell how much slop has already been produced in the few short years generative-AI apps have been widely available, but there are ways to get a glimpse. Guillaume Cabanac, a professor of computer science at Université Toulouse III–Paul Sabatier, has spent the past several years attempting to ferret out instances of fraud, plagiarism, and the use of computer-generated text in major scientific journals. One of his methods is to focus on what he calls “smoking guns” — phrases that show unambiguously the use of an AI text generator like ChatGPT. One of these is “regenerate response,” which appears at the end of ChatGPT’s answers. “The persons did all that copy-paste and didn’t even care to remove” the telltale phrase, Cabanac said. Others are “as an AI language model,” “as of my knowledge cutoff,” and “I cannot fulfill that request,” phrases that ChatGPT and other chatbots use frequently.

Cabanac has found almost 100 cases of obviously AI-generated scientific papers, which he called “only the tiny tip of the iceberg.” A recent study by the librarian Andrew Gray used words that appear disproportionately often in text generated by ChatGPT — among them commendable, intricate, and meticulously — to estimate that 60,000 scholarly papers were at least partially generated by AI in 2023.

You can do your own versions of these experiments at home. Searching “as of my knowledge cutoff” or “as an AI language model” in Google Books turns up hundreds of AI-generated “books” with titles like Hollywood’s 100 Leading Actors and Summary If the Woman in Me: A Guide to Britney Spears Memoir. On Amazon, a quick search found a listing for some (presumably real) underwear with the description “As of My Knowledge Cutoff in Early 2023, Providing Specific Purchasing Options for ‘Women’s Stylish Sexy Casual Independence Day Printed Panties’ Would Be My Capabilities As I Cannot Browse or Access Live Data From the Internet, Including Current Inventory From or Private Sellers.”

Twitter — Elon Musk’s X — may be the most fruitful platform for this kind of search thanks to its sub-competent moderation services. In January, Chris Mohney, a writer and an editor, spotted a tweet that appeared to be an AI-generated description of an image with no image attached: “The photo captures a couple exchanging vows at sunset. The emotions that it evokes are love, happiness, and the memory of a special day filled with promises.” Hundreds of verified accounts swarmed in the replies to praise the missing image: “This picture exudes pure love and joy, a magical moment indeed!,” “This photo truly encapsulates the beauty and magic of true love,” “Such a beautiful moment captured in time, filled with love and joy.”

Cabanac believes “LLMs can be a magnificent tool, a very effective tool” for scientists if properly acknowledged. Some researchers, especially those for whom English is not a first language, use ChatGPT and other AI programs to help with translation and editing. But many people “are using the LLMs to produce more, which lowers the quality of the science that is published and made.” Even innocuous misuse has a cascading effect on the entire scientific enterprise, as retracted papers cast doubt on other papers that cite them. “The error propagates, right?” he said. “It’s like a virus.”

AI-generated papers, Cabanac argued, are often used to pad an academic’s résumé with more publications and citations: “You buy a paper on a topic of your choice, and you buy a set of 500 citations. Then you go to your faculty and you say, ‘Look, I’m a genius, and I deserve this position as a full professor.’” In other words, like Facebook slop, the content of the content isn’t really as important as its presence — or, more accurately, its measurability.

This is the most widespread use yet found for generative-AI apps: creating stuff that can take up space and be counted. When you look through the reams of slop across the internet, AI seems less like a terrifying apocalyptic machine-god, ready to drag us into a new era of tech, and more like the apotheosis of the smartphone age — the perfect internet marketer’s tool, precision-built to serve the disposable, lowest-common-denominator demands of the infinite scroll.

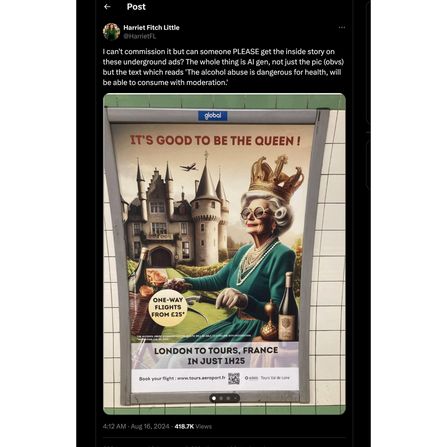

It’s nice to think that if you could simply turn off your phone and computer, you could avoid all these ugly creations. But slop has a way of leaking out. In the most recent season of True Detective, a heavy-metal poster in the background of one scene was obviously and cheaply AI-generated. (The showrunner insisted the poster was diegetically AI-generated.) On the subway, ads for the secondhand-furniture website Kaiyo feature images with oddly levitating pedestrians and signs written in the dream glyphs typical of image generators’ attempts at text.

Outsourcing designwork to generative-AI apps may be an effective cost-cutting and productivity measure for some businesses, but in practice it just off-loads work elsewhere. The cost of slop to libraries is serious, Sullivan said, “not just the cost of the books” but the cost of labor: It takes cataloguers longer to do their jobs when they’re wading through “an outpouring of valueless product.” Human artists, writers, journalists, musicians, and even TikTokers have more work to do too, competing not just with other humans but with the passable products of automated systems.

There’s more work for us readers and watchers and content consumers as well. The future portended by the past two years is one in which we all become cataloguers, Neil Clarkes sorting through the noise for a little bit of signal. Even the stuff that passes through is a burden; unrefined, unedited slop is by definition more work to read, watch, interpret, and understand.

But it’s also, it seems, what we want. The other important participants in the slop economy, besides the sloppers and the influencers and the platforms, are all of us. Everyone who idly scrolls through Facebook or TikTok or Twitter on their phone, who puts Spotify on autoplay, or who buys the cheapest recipe book on Amazon is creating the demand.

Fifteen years ago, Wired magazine heralded the “good-enough revolution” in low-cost technology: “Cheap, fast, simple tools are suddenly everywhere … We now favor flexibility over high fidelity, convenience over features, quick and dirty over slow and polished.” Generative AI as a technology exists in this lineage. That it can create adequate texts and images is an astonishing leap forward in machine learning, but the texts and images are still only adequate, “good enough” and cheap enough for people to thumb past on their phones. Slop is the most appropriate word for what it produces because, as disgusting and unappetizing as it may seem, we still eat it. It’s what’s right there in the trough.

Thank you for subscribing and supporting our journalism.

If you prefer to read in print, you can also find this article in the September 23, 2024, issue of

New York Magazine.

Want more stories like this one? Subscribe now

to support our journalism and get unlimited access to our coverage.

If you prefer to read in print, you can also find this article in the September 23, 2024, issue of

New York Magazine.