A FLOOD of deepfakes and “disinformation” threaten to disrupt the 2024 U.S. presidential election.

That’s the warning from experts who say it’s now “easier than ever” to create fake videos, images, and audio recordings.

1

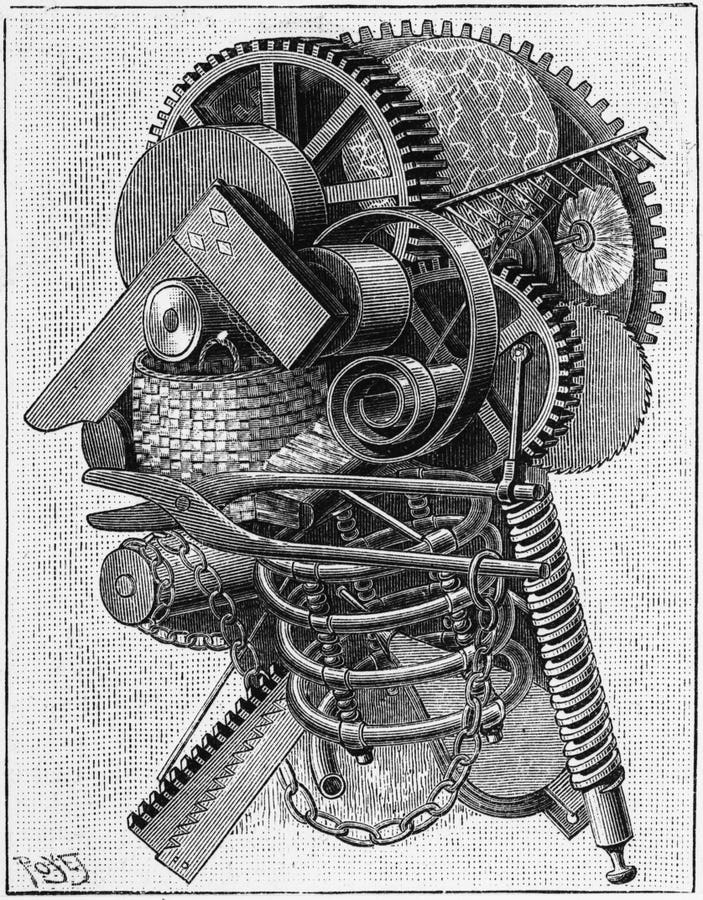

Fake images can be incredibly convincing – so treat what you see online with cautionCredit: Generated with AI by USC Price School staff

It’s thanks to artificial intelligence apps that allow the faces and voices of people to be digitally recreated.

And this can be used to make it seem like a person is doing or saying something they never did.

Earlier in the year, New Hampshire residents received phone messages from an AI voice “cloning” President Joe Biden that discouraged them from voting.

Now experts say the risks of fake information reaching voters is at its greatest.

“The rise of AI has made it easier than ever to create fake images, phony videos and doctored audio recordings that look and sound real,” said Christian Hetrick, of the University of Southern California.

“With an election fast approaching, the emerging technology threatens to flood the internet with disinformation, potentially shaping public opinion, trust and behavior in our democracy.”

The problem is that AI apps make it extremely simple to create fake content.

For instance, a voice can be cloned in a matter of seconds.

And an expert recently told The U.S. Sun that they could make a person appear to say anything just from a single photo.

AI can now make creepy videos of people using just ONE photo – but Microsoft won’t release tool over impersonation fears

HOW TO STAY SAFE

The University of Southern California released some official guidance on how to spot deepfakes.

A big one is confirming what you see from multiple sources.

This is especially true if a video or image is making an especially bold claim.

You should also be skeptical of any political news that is highly emotional – and be sure to analyze it in case it’s false.

Deepfakes – what are they, and how do they work?

Here’s what you need to know…

- Deepfakes are phoney videos of people that look perfectly real

- They’re made using computers to generate convincing representations of events that never happened

- Often, this involves swapping the face of one person onto another, or making them say whatever you want

- The process begins by feeding an AI hundreds or even thousands of photos of the victim

- A machine learning algorithm swaps out certain parts frame-by-frame until it spits out a realistic, but fake, photo or video

- In one famous deepfake clip, comedian Jordan Peele created a realistic video of Barack Obama in which the former President called Donald Trump a “dipsh*t”

- In another, the face of Will Smith is pasted onto the character of Neo in the action flick The Matrix. Smith famously turned down the role to star in flop movie Wild Wild West, while the Matrix role went to Keanu Reeves

And be sure to double-check what you’re reading before you share it, otherwise you can make the problem worse.

“Democracies depend on informed citizens and residents who participate as fully as possible and express their opinions and their needs through the ballot box,” said Mindy Romero, of the University of Southern California.

“The concern is that decreasing trust levels in democratic institutions can interfere with electoral processes, foster instability, polarization, and can be a tool for foreign interference in politics.”

She added: “It can be hard for people to protect themselves against disinformation.”

DEFENCE AGAINST THE DEEPFAKES

Here’s what Sean Keach, Head of Technology and Science at The Sun and The U.S. Sun, has to say…

The rise of deepfakes is one of the most worrying trends in online security.

Deepfake technology can create videos of you even from a single photo – so almost no one is safe.

But although it seems a bit hopeless, the rapid rise of deepfakes has some upsides.

For a start, there’s much greater awareness about deepfakes now.

So people will be looking for the signs that a video might be faked.

Similarly, tech companies are investing time and money in software that can detect faked AI content.

This means social media will be able to flag faked content to you with increased confidence – and more often.

As the quality of deepfakes grow, you’ll likely struggle to spot visual mistakes – especially in a few years.

So your best defence is your own common sense: apply scrutiny to everything you watch online.

Ask if the video is something that would make sense for someone to have faked – and who benefits from you seeing this clip?

If you’re being told something alarming, a person is saying something that seems out of character, or you’re being rushed into an action, there’s a chance you’re watching a fraudulent clip.

Just last month, experts told The U.S. Sun that even “living offline” isn’t enough to fight deepfakes – because people could still find photos, videos, or audio clips of you.

And scammers are even using AI for a variety of cons, including romance scams.

Experts say being able to spot mistakes in deepfakes is no longer enough – you’ll need to find other ways to deal with the threat.

This includes setting up “safe words” with friends and family, and scrutinizing what you see online.