By Lottie Gibson

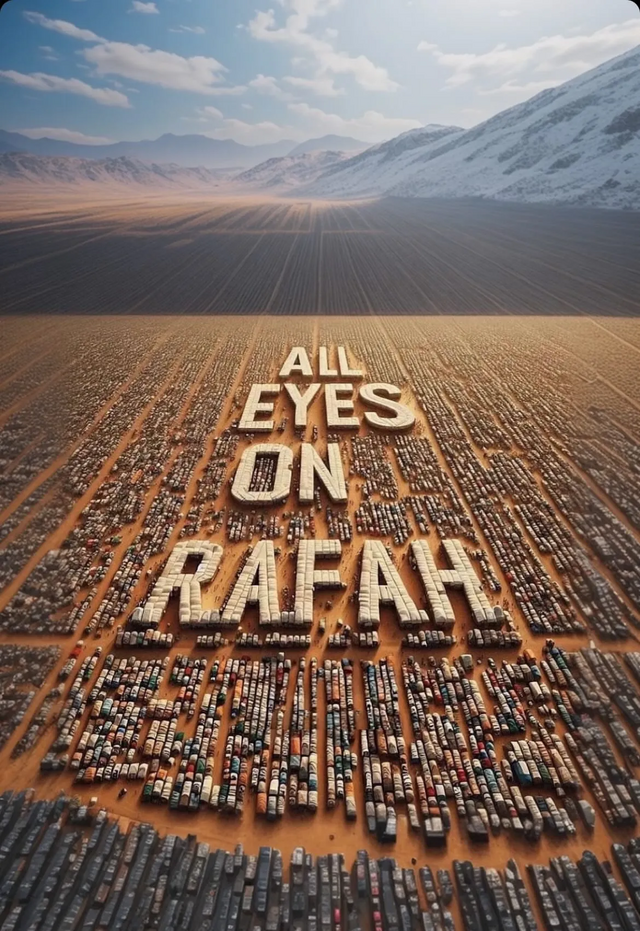

Nobody can deny that artificial intelligence and false information go hand-in-hand and can have real impact in society. Images or videos spread onto people’s feeds — most of the time without context. This has been seen recently with the ‘All Eyes on Rafah’ image, which in the past few weeks alone was shared over 47 million times on Instagram. The image, which was generated by artificial intelligence, depicts a refugee camp in Gaza, with the tents spelling out the title ‘All Eyes on Rafah’. The absent context here is that this risks obscuring genuine photojournalism and does not accurately depict the situation on the ground in Gaza. Indeed, instead of a glossy, sanitised image, the opposite is true in Rafah. As suggested by Angela Watercutter in Wired Magazine, the irony of this image cannot be denied, as despite being asked to look towards Gaza, we are not really seeing Rafah at all.

This is by far not the only example of artificial intelligence and deepfakes affecting our perception of global events. In the US for example, some voters in New Hampshire thought that they had received an automated phone call from Joe Biden telling them not to go and vote in the presidential elections, which turned out to be a deepfake. It is not just politically that deepfakes are a problem, but also personally. As we have seen recently with pornographic deepfakes of Taylor Swift, AI holds the potential to exacerbate prejudices just as effectively. The use of deepfakes to manipulate, and even abuse, is therefore a global issue which must be dealt with urgently. It need not be limited to just celebrities, but to the general public at large.

So why has false information and deepfakes become so prevalent? Firstly, deepfake technology has become more accessible. Software which creates basic deepfakes is relatively affordable, if not completely free, and easily downloadable. This means that anyone is able to create deepfake images or videos through the means of a basic tutorial. With this power being so widely available, the sheer quantity of AI-generated images online has increased vastly. Whilst not all of these apps are able to create “believable” content, with the speed at which technology is advancing, we should expect to see more intelligent, high-performing software emerging in the future.

Obviously, the future of media in an AI world is worrying, but there is still some hope — if we strive to build a media literate and alert society

Secondly, because of social media it has never been easier to spread false information. All social media users have themselves become ‘broadcasters’, each with the capacity to share images online, even if they have not been fact-checked. This leads to a huge amount of exaggerated, edited, or false information being spread quickly and effectively. As a consequence, the lines between true and false have become blurred, and it is hard for us as individuals to distinguish between genuine and false information.

Obviously, the future of media in an AI world is worrying, but there is still some hope — if we strive to build a media literate and alert society. One way to do this is through education. Whilst this education could be considered solely a governmental responsibility, media outlets themselves would also benefit from offering outreach programmes to help to teach citizens about the risks of false information. By offering such initiatives, media channels could explain to audiences the value of receiving information from reliable sources rather than social media, which, in turn, would help them to gain younger, more engaged audiences.

All images generated by AI and posted online should be marked as such, in order to help people to differentiate between real and false images

Social media channels themselves also have a crucial role to play. All images generated by AI and posted online should be marked as such, in order to help people to differentiate between real and false images. And whilst this is improving, more needs to be done. By having these visible markers of AI images, people would be able to better understand what a deepfake or manipulated image looks like, so that they can better identify them in the future. This would allow for a generation of critical, independent thinkers, who have a clear understanding of the threats to our media.

It is crucial that we recognise our role in the sharing of false information. Social media is, of course, a positive tool in terms of learning about people’s personal experiences. However, as media users we must think critically and become sensible to our social media feeds of false information and images, and improve our media literacy in the face of massive swathes of information, both true and false.

Image: Public Domain