In a world increasingly influenced by artificial intelligence, this question arises: can AI chatbots like ChatGPT detect AI-generated deepfakes?

Can AI Chatbots ChatGPT and Gemini Detect Deepfakes?

A new University at Buffalo-led study aims to answer this question, focusing on large language models (LLMs) like OpenAI’s ChatGPT and Google’s Gemini.

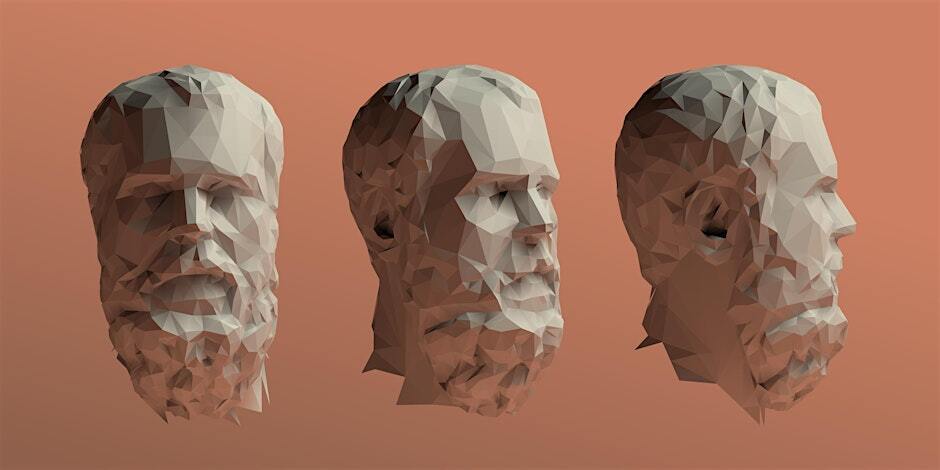

The study explored the potential of LLMs in spotting deepfakes of human faces, a challenge that has become more pressing with the rise of AI-generated content on social media and other platforms.

The team discovered that while LLMs did not match the performance of advanced deepfake detection algorithms, their natural language processing capabilities could make them a valuable tool for this task in the future.

Recent versions of models like ChatGPT have enabled these tools to analyze images, using databases of captioned photos to understand the connections between words and visual content.

The researchers provided the models with thousands of real and AI-generated images and tasked them with identifying signs of manipulation. The study found that ChatGPT was accurate 79.5% of the time in flagging synthetic artifacts in photos created by latent diffusion and 77.2% on images created by StyleGAN.

One of the key advantages highlighted in the study was ChatGPT’s ability to explain its decisions in a way that humans can understand. For instance, when analyzing an AI-generated photo of a man with glasses, the model pointed out issues such as blurred hair on one side of the image and an abrupt transition between the person and the background.

According to the researchers, this human-like explanation provides an additional layer of transparency not typically found in traditional deepfake detection algorithms, which often only give a probability score without context.

Read Also: Teachers, Victims of Explicit AI Deepfakes Made by Students

(Photo : Gerd Altmann from Pixabay)

ChatGPT and Gemini’s Drawbacks?

However, the study also cited several drawbacks. ChatGPT’s accuracy still lagged behind the latest deepfake detection algorithms, which boast accuracy rates in the mid- to high-90s.

According to the team, this discrepancy is partly due to LLMs’ inability to detect signal-level statistical differences invisible to the human eye but detectable by specialized algorithms.

Moreover, the semantic knowledge that makes ChatGPT’s explanations intuitive can also limit its effectiveness. The model focuses on semantic-level abnormalities, which may not capture all nuances of deepfake manipulations.

Another challenge they found was that ChatGPT often refused to analyze photos when asked to identify whether they were AI-generated or not. The researchers said that it usually responds with a statement about being unable to assist with the request due to confidence thresholds.

Gemini, another model tested in the study, performed comparably to ChatGPT in identifying synthetic artifacts but struggled to provide coherent explanations. The study said the model’s supporting evidence often included nonsensical observations, such as identifying non-existent moles.

The researchers concluded that while LLMs like ChatGPT show promise as deepfake detection tools, they are not yet ready to replace specialized algorithms. The findings were presented at the IEEE/CVF Conference on Computer Vision & Pattern Recognition. The paper was also published in arXiv.

Related Article: California Lawmakers Push Measures to Intensify AI Regulations to Fight Algorithmic Discrimination, Deepfakes

ⓒ 2024 TECHTIMES.com All rights reserved. Do not reproduce without permission.