The AI race is heating up, and Meta just made a bold move with its latest release: Llama 4. Designed to challenge the industry’s top players, like OpenAI’s GPT-4.5 and Google’s Gemini, Meta’s Llama 4 models push the boundaries of AI performance, efficiency, and accessibility.

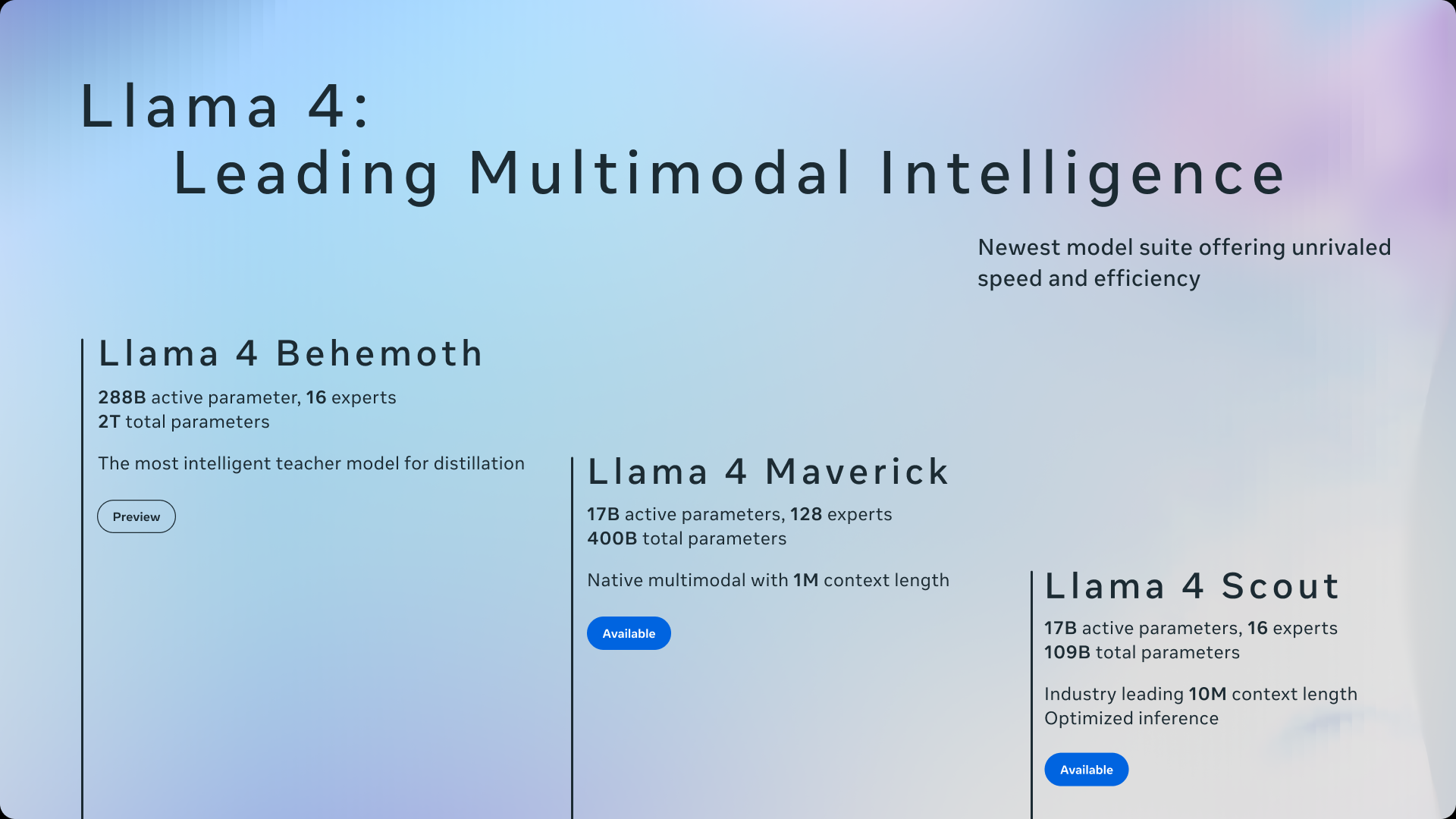

Meta’s Llama models have evolved significantly. While Llama 2 and 3 topped out at 7 and 8 billion parameters, Llama 4 pushes the limit with up to 17 billion parameters, and Llama 4 Behemoth takes it further with 2 trillion, solidifying its place in the AI race.

Open-source AI gets a big boost with Meta’s latest Llama models

The goal is to accelerate AI innovation and democratize access to this cutting-edge technology.

So far, Meta has released two versions: Scout and Maverick. Scout, built for speed, features a 10-million-token context window, which, according to Meta, allows it to handle more complex tasks than models like Google’s Gemini 7B or Mistral 7B, all while running on a single Nvidia H100 GPU. This makes high-performance AI far more accessible to developers without massive cloud budgets.

Then there’s Maverick, which uses a mixture-of-experts (MoE) system with 128 experts. Instead of running the entire model for every task, Maverick activates only the necessary parts, making it much more efficient. Despite its smaller compute footprint, it rivals the performance of GPT-4 and Gemini 2.0 Flash.

Image Credit: Meta

Image Credit: Meta

Next up is Llama 4 Behemoth, still in development, which promises to be an absolute powerhouse with 2 trillion parameters. Early reports suggest it could outperform models like GPT-4.5 and Claude Sonnet 3.7, especially in STEM tasks, but we’ll have to wait for official benchmarks to confirm.

What makes Llama 4 especially significant isn’t just the sheer size or speed of its models — it’s Meta’s approach to AI. The MoE architecture reduces compute demands by activating only the parts of the model needed for each task, which could lower costs and expand accessibility. If other companies, from open-source players like Mistral to enterprise-focused tools like Cohere, adopt this approach, we could see faster, cheaper AI spread far beyond the tech giants.

Meta is also shaking things up by offering Llama 4 models under a license to developers and businesses, making it more accessible than proprietary systems. It’s integrated into Meta’s own platforms like Facebook, Instagram, and WhatsApp, setting the stage for more efficient, modular AI systems in the future, where power doesn’t always mean size.

Llama 4 may be Meta’s ticket to reshaping the AI landscape, driving innovation and potentially shifting the economics of the industry.

Meta is developing an in-house AI chip to train its AI model. Because, why not?

It could help it reduce reliance on other chip companies like Nvidia.